Statistics for Decision Makers - 14.02 - Regression - r squared

<slideshow style="nobleprog" headingmark="。" incmark="…" scaled="false" font="Trebuchet MS" footer="www.NobleProg.co.uk" subfooter="Training Courses Worldwide">

- title

- 14.02 - Regression - r squared

- author

- Bernard Szlachta (NobleProg Ltd) bs@nobleprog.co.uk

</slideshow>

Dividing Variation。

Regression can divide the variation in Y into two parts:

- The variation of the predicted scores (Y)

- The variation in the errors of prediction (E)

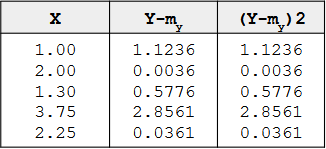

The variation of Y

- The sum of squares Y (SSY) or Total Sum of Squares (TSS)

- The sum of the squared deviations of Y from the mean of Y

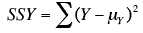

SSY - the sum of squares Y and

Y - an individual value of Y

μy is the mean of Y

SSY - the sum of squares Y and

Y - an individual value of Y

μy is the mean of Y

Example。

The mean of Y is 2.06 and SSY is the sum of the values in the third column and is equal to 4.597

When computed using a sample, you should use the sample mean, M, in place of the population mean.

Sum of the squared deviations from the mean。

SSY = SSY' + SSE

SSY can be partitioned into two parts:

- 1. The sum of squares predicted (SSY') or Explained Sum of Squares (ESS)

-

- The sum of squares predicted is the sum of the squared deviations of the predicted scores from the mean predicted score (M')

- 2. The sum of squares error (SSE) or Residual Sum of Squares (RSS)

-

- The sum of squares error is the sum of the squared errors of prediction

Proportion of variation explained。

SSY is the total variation SSY' is the variation explained SSE is the variation unexplained

Therefore, the proportion of variation explained can be computed as:

Proportion explained = SSY'/SSY

Similarly, the proportion not explained is:

Proportion not explained = SSE/SSY

r2 and Pearson correlation。

There is an important relationship between the proportion of variation explained and Pearson's correlation:

r2 = SSY'/SSY = is the proportion of variation explained

Therefore,

- if r = 1, then the proportion of variation explained is 1

- if r = 0, then the proportion explained is 0;

- if r = 0.4, then the proportion of variation explained is 0.16

Sum of Squares and Variances。

Variance is computed by dividing the variation (Sum of Squares) by N (for a population) or N-1 (for a sample). The relationships spelled out above in terms of variation also hold for variance.

variance total = variance of prediction + errors of prediction

- r2 is the proportion of

- variance explained

- variation explained

Summary Table。

It is often convenient to summarize the partitioning of the data in a table.

- The degrees of freedom column (df) shows the degrees of freedom for each source of variation

- The degrees of freedom for the sum of squares explained is equal to the number of predictor variables

- This will always be 1 in simple regression

- The error degrees of freedom is equal to the total number of observations minus 2

- In this example, it is 5 - 2 = 3

- The total degrees of freedom is the total number of observations minus 1

| Source | Sum of Squares | df | Mean Square |

|---|---|---|---|

| Explained | 1.806 | 1 | 1.806 |

| Error | 2.791 | 3 | 0.930 |

| Total | 4.597 | 4 |

Understanding r2。

- AKA Coefficient of determination

- Goodness of fit of a model

- Measure of how well the regression line approximates the real data points

- In multiple regression it increases with number of predictors (see adjusted R2)

- Example

r2 = 0.7

- 70% of the variation in the response variable can be explained by the explanatory variable

- 30% can be attributed to unknown, lurking variables or inherent variability

Quiz。

Quiz