Statistics for Decision Makers - 06.02 - Research Design - Measurement

<slideshow style="nobleprog" headingmark="。" incmark="…" scaled="false" font="Trebuchet MS" footer="www.NobleProg.co.uk" subfooter="Training Courses Worldwide">

- title

- 06.02 - Research Design - Measurement

- author

- Bernard Szlachta (NobleProg Ltd) bs@nobleprog.co.uk

</slideshow>

Goals。

- Determine which way of measuring things is most reliable

- Define reliability

- Compute reliability from the true score and error variance

- Define standard error of measurement

- State the effect of test length on reliability

- Distinguish between reliability and validity

- Categorize validity.

- Define the relationship between reliability, validity and power.

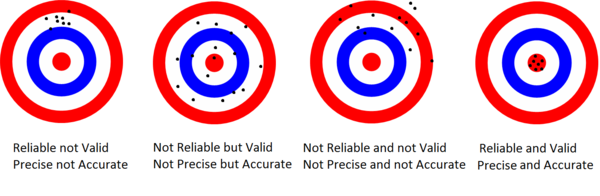

Validity and Reliability。

Example Use Cases。

Measuring customer satisfaction is done using two methods: online surveys and telephone interviews.

- Which method is more consistent (i.e. produces similar results under consistent conditions)?

- How can we increase reliability to a specific level?

Measurement 。

- Weight and height are easy to measure.

- Human satisfaction is less so.

Reliability and validity are two common methods use in psychometrics to determine the consistency of measurement.

Types of Reliability。

- Inter-rater

- Test-retest (intra-rater)

- Inter-method (parallel-forms)

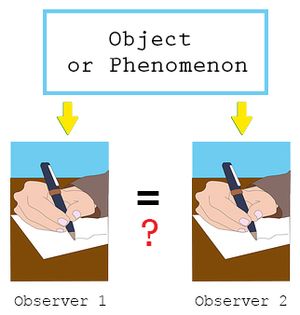

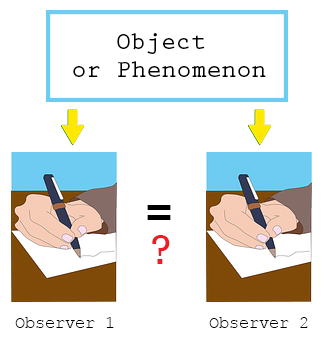

Inter-rater 。

- Assesses the degree of agreement between two or more raters (judges) in their appraisals.

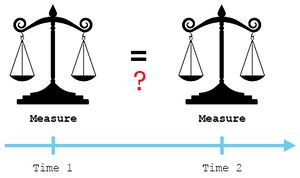

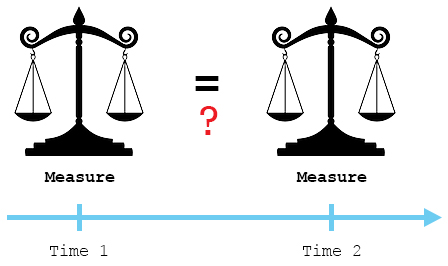

Test-retest (intra-rater)。

- Assesses the degree to which test scores are consistent from one test administration to the next.

- Single rater who uses the same methods or instruments and the same testing conditions.

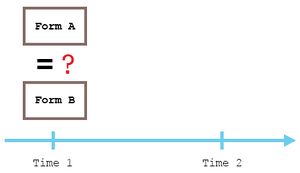

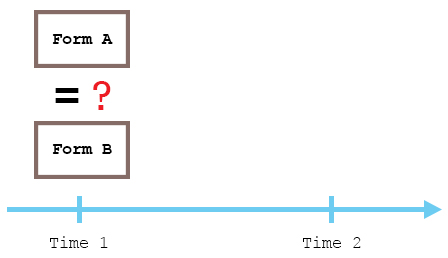

Inter-method (parallel-forms)。

- Assesses the degree to which test scores are consistent when there is a variation in the methods or instruments used.

- This allows inter-rater reliability to be ruled out

Inter-method Reliability。

- It is just correlation between parallel forms of a test.

- A parallel form is an alternate test form that is equivalent in terms of content, response processes and statistical characteristics.

- E.g. multiple ways of measuring IQ.

- Example

We send two kinds of survey to the same group of people.

- We compare the results on both of them and calculate how the results are correlated.

- The reliability is, let us say, 60%.

- You can conclude that the Inter-method Reliability is low, therefore the tests are not very reliable.

The True Score。

Every measurement can be consider the sum of two factors:

- The True Score, i.e. what we really want to measure.

- The Error score, noise.

X = T + e X - test results T - true score e - error

- Example

- An exam candidate got a score of X = 90.

- Let us assume that the student really knew the answers to T = 80 questions, and just guessed e = 10 of them.

- If we measure the same person using two different tests, than X1 and X2 will be different but T1 and T2 should be the same.

- X1 and X2 are different due to random error and bias.

Reliability Definition。

- Formula

rtest,test = True Score (T) / Test Result (X) = T/X

- We want to know the reliability of the test, so using the variance sum law we can state:

rtest,test = var(T)/var(X)

- We do not know the True Score, so we need to estimate it

Estimating Reliability 。

Reliability cannot be computed, it has to be estimated.

Reliability and Power

- The higher the reliability the higher the power of the experiment.

- Power is the probability of finding what we are looking for.

Increasing Reliability。

- Improve the quality of the items.

- Increase the number of items.

- Improve the quality of the items

- Items that are either too easy so that almost everyone gets them correct or too difficult so that almost no one gets them correct are not good items: they provide very little information.

- In most contexts, items which about half the people get correct are the best (other things being equal).

- Items that do not correlate with other items can usually be improved.

- Remove confusing and/or ambiguous items.

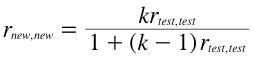

Increasing Reliability。

- Increase the number of items

Increasing the number of items increases reliability:

k - the factor by which the test length is increased rnew,new - the reliability of the new longer test rtest,test - the current reliability

- Example

- Increasing the number of items from 50 to 75 (k=1.5) would increase the reliability from 0.70 to 0.78.

Validity。

- The validity of a test refers to whether the test measures what it is supposed to measure.

- "The degree to which evidence and theory support the interpretations of test scores, as entailed by proposed uses of tests."

- "Whether a study is able to scientifically answer the questions it is intended to answer."

Types of Validity。

- Test validity

- Construct validity

- Convergent validity

- Discriminant validity

- Content validity

- Representation validity

- Face validity

- Criterion validity

- Concurrent validity

- Predictive validity

- Experimental validity

- Statistical conclusion validity

- Internal validity

- External validity

- Ecological validity

- Relationship to internal validity

- Diagnostic validity

Face Validity。

- Does the test appear to measure what it is supposed to measure?

- Does the test "on its face" appear to measure what it is supposed to measure?

- Example

- An Asian history test consisting of a series of questions about Asian history would have high face validity.

- If the test included primarily questions about American history then it would have little or no face validity as a test of Asian history.

Predictive Validity (Empirical Validity)。

- A test's ability to predict the relevant behaviour.

- Example

- The main way in which the SAT (UK: A level) tests are validated is by their ability to predict college grades.

- Thus, to the extent these tests are successful at predicting college grades they are said to possess predictive validity.

Construct Validity。

- A test has construct validity if its pattern of correlations with other measures is in line with the construct it is purporting to measure.

- Construct validity can be established by showing a test has both convergent and divergent validity.

- A test has convergent validity if it correlates with other tests that are also measures of the construct in question.

- Divergent validity is established by showing the test does not correlate highly with tests of other constructs.

- Example

- Suppose one wished to establish the construct validity of a new test of spatial ability.

- Convergent and divergent validity could be established by showing the test correlates relatively highly with other measures of spatial ability but less highly with tests of verbal ability or social intelligence.

Reliability and correlation between the test and other measures。

- The reliability of a test limits the size of the correlation between the test and other measures.

- In general, the correlation of a test with another measure will be lower than the test's reliability.

- A test should not correlate with something else as highly as it correlates with a parallel form of itself.

- Theoretically it is possible for a test to correlate as highly as the square root of the reliability with another measure.

- Example

- If a test has a reliability of 0.81 then it could correlate as highly as 0.90 with another measure.

- Detecting reliability problems

- A correlation above the upper limit set by reliabilities can act as a red flag.

- Example

- Vul, Harris, Winkielman, and Paschler (2009) found that in many studies the correlations between various fMRI (Functional magnetic resonance imaging) activation patterns and personality measures were higher than their reliabilities would allow.

- A careful examination of these studies revealed serious flaws in the way the data were analysed.

Quiz。

Quiz

<quiz display=simple >

{ A brand preference survey seem to be inconsistent with similar surveys conducted by a difference organization. As the company who owns the brand, what would you try to estimate to determine whether the survey is up to the job?

|type="()"} - Predictive Validity - Intra-rater Reliability + Inter-method Reliability - Power

{

Answer >>

Inter-method Reliability

}

{ Imagine a situation where you can either make a test more accurate, or more precise, but not both. What would you choose?

|type="()"} + Accuracy - Precision

{

Answer >>

Accuracy

}

{ It turns out that reliability of a survey is only 0.6, so you want to make it higher. What can you do?

|type="[]"} - Change the significance level + Increase the number of questions in the survey - Increase the number of respondents + Remove confusing questions

{

Answer >>

Change the significance level

Remove confusing questions

}