EX210

Jump to navigation

Jump to search

Certified System Administrator for OpenStack

Course Overview⌘

What is OpenStack?⌘

Outline⌘

- Day I:

- Session I:

- Introduction

- Session II:

- OpenStack Administration

- Session III:

- OpenStack Administration

- Session IV:

- Basic Environment

- Session I:

Outline #2⌘

- Day II:

- Session I:

- Keystone

- Session II:

- Glance

- Session III:

- Nova

- Session IV:

- Neutron

- Session I:

Outline #3⌘

- Day III:

- Session I:

- Neutron

- Session II:

- Horizon

- Session III:

- Cinder

- Session IV:

- Swift

- Session I:

Outline #4⌘

- Day IV:

- Session I:

- Heat

- Session II:

- Ceilometer

- Session III:

- Adding Compute Node

- Session IV:

- Case Studies, Certification and Surveys

- Session I:

Day I - Session I⌘

"I think there is a world market for maybe five computers"⌘

What is OpenStack?⌘

- Open source software for creating private and public clouds

- IaaS (Infrastructure as a Service) solution

- Launched as a joint project between Rackspace and NASA

- Managed by the OpenStack Foundation

- Winner of 2014 private cloud market

- Compatible with Amazon EC2 and Amazon S3

- Written in Python

Foundations of cloud computing⌘

Base features of the cloud:

- it is completely transparent to users

- it delivers a value to users in a form of services

- its storage and computing resources are infinite from users' perspective

- it is geographically distributed

- it is highly available

- it runs on the commodity hardware

- it leverages computer network technologies

- it leverages virtualization and clustering technologies

- it is easily scalable (it scales out)

- it operates on the basis of distributed computing paradigm

- it implements "pay-as-you-go" billing

- it is multi-tenant

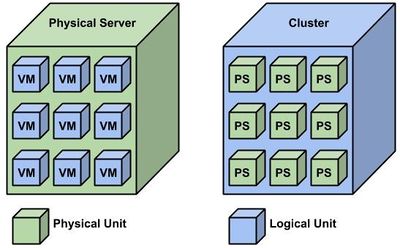

Virtualization vs clustering⌘

OpenStack evolution⌘

- 1990 - origins of grid computing paradigm

- 1997 - distributed.net volunteer computing platform

- 2000 - origins of cloud computing paradigm

- 2006 - Amazon EC2 (Elastic Compute Cloud) released

- 2010 - OpenStack project launched

- 2012 - OpenStack Foundation founded

- 2013 - Red Hat introduces commercial support for OpenStack Grizzly

- 2015 - OpenStack Kilo released

OpenStack distributions⌘

- RHEL OSP (Red Hat Enterprise Linux OpenStack Platform)

- RDO (Red Hat Distribution for OpenStack) (https://www.rdoproject.org/Main_Page)

- Ubuntu OpenStack (http://www.ubuntu.com/cloud/openstack)

- Suse Cloud (https://www.suse.com/products/suse-cloud/)

- Mirantis OpenStack (https://www.mirantis.com/)

- Rackspace Private Cloud Software (http://www.rackspace.com/cloud/private)

- and more

OpenStack releases⌘

| OpenStack Release | RHEL OSP Release | Status | Release Date | Components | Release Notes |

|---|---|---|---|---|---|

| Liberty | 8 | Development | 15-10-2015 | Nova, Glance, Swift, Horizon, Keystone, Neutron, Cinder, Heat, Ceilometer, Trove, Sahara, Ironic, Zaqar, Manila, Designate, Barbican | ??? |

| Kilo | 7 | Stable | 30-04-2015 | Nova, Glance, Swift, Horizon, Keystone, Neutron, Cinder, Heat, Ceilometer, Trove, Sahara, Ironic | Kilo |

| Juno | 6 | Old Stable | 16-10-2014 | Nova, Glance, Swift, Horizon, Keystone, Neutron, Cinder, Heat, Ceilometer, Trove, Sahara | Juno |

| Icehouse | 5 | EOL | 17-04-2014 | Nova, Glance, Swift, Horizon, Keystone, Neutron, Cinder, Heat, Ceilometer, Trove | Icehouse |

| Havana | 4 | EOL | 17-10-2013 | Nova, Glance, Swift, Horizon, Keystone, Neutron, Cinder, Heat, Ceilometer | Havana |

| Grizzly | 3 | EOL | 04-04-2013 | Nova, Glance, Swift, Horizon, Keystone, Quantum, Cinder | Grizzly |

| Folsom | --- | EOL | 27-09-2012 | Nova, Glance, Swift, Horizon, Keystone, Quantum, Cinder | Folsom |

| Essex | --- | EOL | 05-04-2012 | Nova, Glance, Swift, Horizon, Keystone | Essex |

| Diablo | --- | EOL | 22-09-2011 | Nova, Glance, Swift | Diablo |

| Cactus | --- | EOL | 15-04-2011 | Nova, Glance, Swift | Cactus |

| Bexar | --- | EOL | 03-02-2011 | Nova, Glance, Swift | Bexar |

| Austin | --- | EOL | 21-10-2010 | Nova, Swift | Austin |

OpenStack deployment solutions⌘

- RHEL OSP Installer (replaced by OSP Director in OSP 7)

- RDO Manager (https://www.rdoproject.org/RDO-Manager)

- Rackspace Private Cloud (http://www.rackspace.com/cloud/private)

- PackStack (https://wiki.openstack.org/wiki/Packstack)

- DevStack (http://docs.openstack.org/developer/devstack/)

- Puppet (https://forge.puppetlabs.com/puppetlabs/openstack)

- Manual

OpenStack services⌘

- Core services:

- Keystone - Identity Service; authentication and authorization

- Swift - Object Storage Service; cloud storage

- Cinder - Block Storage Service; non-volatile volumes

- Glance - Image Service; instances images and snapshots

- Neutron - Networking Service; network virtualization

- Nova - Controler Service; cloud computing fabric controller

- Horizon - Dashboard service; web GUI

- Heat - Orchestration Service; instances templates

- Ceilometer - Metering Service; telemetry and billing

OpenStack services #2⌘

- Additional services:

- Trove - Database Service; DBaaS (DataBase as a Service)

- Sahara - Elastic Map Reduce Service; Hadoop cluster provisioning

- Ironic - Bare Metal Provisioning Service; bare metal instances

- Zaqar - Multi-tenant Cloud Messaging Service; cross-cloud communication

- Manila - Shared Filesystem Service; control plane

- Designate - DNS Serivce; DNSaaS (DNS as a Service)

- Barbican - Security Service; storage security

- Underpinning services:

- AMQP (Advanced Message Queuing Protocol) - messaging service

- Hypervisor - compute service; compute and storage virtualization

- MariaDB - SQL database

- MongoDB - no-SQL database

- Apache - web server

OpenStack competitors⌘

- Private cloud vendors:

- VMware (http://www.vmware.com/)

- Apache CloudStack (http://cloudstack.apache.org/)

- Public cloud vendors:

- Amazon EC2 (on Xen) (http://aws.amazon.com/ec2/)

- Microsoft Azure (on Hyper-V) (https://azure.microsoft.com/en-us/)

- Google Compute Engine (on KVM) (https://cloud.google.com/compute/)

EX210 exam⌘

- Objectives: http://www.redhat.com/en/services/training/ex210-red-hat-certified-system-administrator-red-hat-openstack-exam

- Certification Authority: Red Hat (http://www.pearsonvue.com/)

- Cost: 705 EUR (prizes in local currency updated on a daily basis)

- Duration: 180m

- Number of Tasks: 15

- Passing Score: 70%

- Questions Type: lab

- Goal: Install and configure OpenStack using PackStack

References⌘

- Books:

- K. Pepple, "Deploying OpenStack", O'Reilly

- T. Fifield et al., "OpenStack Operations Guide", O'Reilly

- K. Jackson and C. Bunch, "OpenStack Cloud Computing Cookbook", Packt Publishing

- Websites:

- OpenStack documentation: http://docs.openstack.org

- OpenStack wiki: http://wiki.openstack.org

- Red Hat website: http://access.redhat.com

- Manuals

- Internet

OpenStack lab⌘

- Lab components:

- Laptop with Windows:

- Putty: download from here

- VMware environment

- Credentials: root / terminal

- Lab Exercise 1.1

- Snapshots

- Laptop with Windows:

Day I - Session II and III⌘

Basic terms⌘

- tenant - group of users

- instance - VM (Virtual Machine)

- image - uploaded VM template

- snapshot - created VM template

- emphemeral storage - volatile storage

- block storage - non-volatile storage

- security group - virtual firewall

IaaS model⌘

- IaaS (Infrastructure as a Service)

- Business model of provisioning virtual machines in the cloud

- Leverages compute and storage resources, and network virtualization techniques

- VMs run on the top of the hypervisors which are managed by the CSP (Cloud Service Provider)

- Users are responsible for the VM OS (aka Guest OS) management

Supported hypervisors⌘

- KVM - standard hypervisor for Linux systems

- QEMU - standard hypervisor for Linux systems

- Hyper-V - standard hypervisor for Windows systems

- ESXi - standard hypervisor for VMware systems

- Xenserver - enhanced hypervisor for Linux systems

- Ironic - no hypervisor

- LXC - simple hypervisor for Linux systems

Supported image formats⌘

- raw - unstructured format

- vhd - universal format

- vmdk - VMware format

- vdi - VirtualBox format

- iso - archive format

- qcow2 - QEMU format

- aki - Amazon kernel format

- ari - Amazon ramdisk format

- ami - Amazon machine format

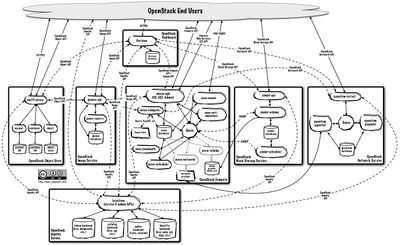

Basic architecture⌘

Design concerns⌘

- Considerations:

- hardware - performance, resilience, volume

- network - performance, resilience

- distribution - supported releases, architecture and operating system

- release - supported components and features

- architecture - performance, resilience

- operating system - performance, support, integration

- Selection criteria:

- business requirements - What needs to be provided?

- technical requirements - What needs to be built?

- customizability - How much influence will I have?

- integration - How easily will it fit into my existing IT infrastructure?

- support - Can I get a professional support?

- price - Will it cost me?

Installation concerns⌘

- Installation modes:

- manual:

- based on RDO, Ubuntu OpenStack, SUSE Cloud, etc.

- best suited for medium size development clusters

- manual installation is a proper way to learn OpenStack cluster

- automatic:

- based on RHEL OSP Installer, PackStack, DevStack, etc.

- best suited for small development and production clusters

- automatic installation is a proper way to administer OpenStack cluster

- manual:

Configuration concerns⌘

- Configuration sources:

- files - administrator's settings

- databases - users' settings

- Configuration files:

[section] [key]=[value]

- Configuration tools:

- crudini - updates values in configuration files:

crudini --set [file] [section] [key] [value]

- openstack-db - initializes databases

openstack-db --init --service [service]

Administration concerns⌘

- Interfaces:

- CLI - provided by OpenStack services

- web GUI - provided by the Horizon dashboard service

- API - provided by OpenStack services

- Basic commands:

- openstack - general CLI client

- openstack-status - shows status overview of installed OpenStack services

- openstack-service - controls enabled openstack services

- RC file:

- sets up required environmental variables

- can be downloaded from the OpenStack Horizon dashboard service

- must be sourced from the CLI

Automation concerns⌘

- Automation environments:

- Puppet:

- the most popular automation environment for OpenStack

- mostly liked by the developers

- Ansible:

- emerging, universal automation environment

- mostly liked by the administrators

- Puppet:

- Container orchestration engines:

- Docker - mature

- Kubernetes - emerging

Growth planning⌘

- Scalability:

- controller and networker nodes scale up (vertically)

- compute nodes scale out (horizontally)

- Considerations:

- compute resources:

- VCPUs - summary number of VCPUs utilized by instances

- RAM - summary amount of RAM utilized by instances

- storage resources:

- ephemeral storage - summary amount of volatile volumes utilized by instances

- Cinder volumes - summary amount of non-volatile volumes utilized by instances

- Glance images and snapshots - summary amount of storage utilized by instances' images

- backup volumes - summary amount of storage utilized by instances' backups

- compute resources:

- Monitoring software:

- SNMP (Simple Network Management Protocol) - basic monitoring solution

- Nagios - enhanced monitoring solution

High Availability concerns⌘

- Native solutions:

- Infrastructure layer:

- HAProxy - front-end (application) load balancing system

- MariaDB Galera cluster - back-end (database) replication system

- Pacemaker - cluster resource management system

- Corosync - cluster messaging system

- Application layer:

- Neutron LBaaS service - front-end to LB solutions

- Object storage backends - built-in replication mechanism

- Multiple backends - support for backup backends

- Infrastructure layer:

- Other solutions:

- RAID (Redundant Array of Independent Disks)

- LAG (Link AggreGation)

Day I - Session IV⌘

Prerequisites⌘

- OpenStack repositories

- Underpinning software

- NTP (Network Time Protocol)

- SQL database - MariaDB, MySQL, PostgreSQL and other

- AMQP - RabbitMQ, Qpid, ZeroMQ

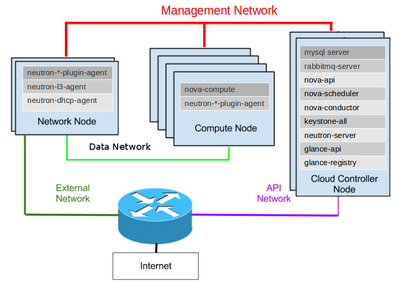

Nodes and networks⌘

- Nodes in the OpenStack lab

- Controller - controller / networker node

- Compute - compute node

- Networks in the OpenStack lab

- 10.0.0.0/24 (eth0) - OOB Management, External, API

- 172.16.0.0/24 (eth1) - Management, Data

AMQP⌘

- AMQP - application layer protocol for message-oriented middleware

- Roles:

- Publisher - publishes the message

- Broker - routes the message

- Consumer - processes the message

- The publisher and consumer roles are played by the OpenStack services

- The message format in the OpenStack cluster is RPC (Remote Procedure Call)

- AMQP message types:

- Cast - don't wait for results

- Call - wait for results

Day II - Session I⌘

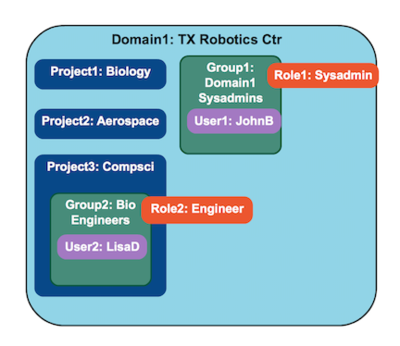

Objects⌘

- User - human or system user

- Group - group of users

- Project - inter-tenant unit of ownership

- Domain - intra-tenant unit of ownership

- Role - authorization level

- Token - identifying credential

- Extras - object metadata

- Service - OpenStack service

- Endpoint - Service URL

APIv3

Components⌘

- Keystone Client - any application that uses Keystone service

- Keystone API Service - handles XML requests

- Keystone Identity Service - provides data about users and groups, and authenticates them

- Keystone Resource Service - provides data about projects and domains

- Keystone Assignment Service - provides data about roles and authorizes users and groups

- Keystone Token Service - manages and validates tokens

- Keystone Catalog Service - provides data about endpoints

Backends⌘

- Keystone is not responsible for storing objects

- Instead, it leverages the following backends:

- SQL - local or remote SQL database

- LDAP (Lightweight Directory Access Protocol)

- Templated - endpoint templates

Tokens⌘

- Authentication process varies depending on a token type in use

- UUID - based on UUID4 standard; issued and validated by Keystone

- PKI - based on x509 certificates; issued by Keystone, validated by OpenStack services

- PKIZ - PKI tokens with zlib compression

- Fernet - based on Fernet algorighm; issued and validated by Keystone

- Token binding - embeds information from an external authentication mechanism inside a token

- Supported external authentication mechanism: Kerberos, x509

Authentication process for UUID tokens⌘

- A user sends its credentials to the Keystone API service

- Keystone authenticates and authorizes the user

- Keystone generates a token, saves it in a backend, and sends it back to the user

- A users sends an API call to a service's API service including the token

- The service sends the token to the Keystone API service

- Keystone validates the token and sens a reply to the service

- The service authenticates and authorizes the user

Configuration & Administration⌘

- Configuration files:

- /etc/keystone/keystone.conf - main Keystone service configuration file

- Services:

- openstack-keystone - Keystone service

- httpd - HTTP daemon

- Management tools:

- keystone - Keystone client

- keystone-manage - Keystone management tool

Day II - Session II⌘

Components⌘

- Glance Client - any application that uses Glance service

- Glance API Service - handles JSON requests

- Glance DAL (Database Abstraction Layer) - API for Glance <-> database communication

- Glance Store - middleware for Glance <-> backend communication

- Glance Domain Controller Service - middleware implementing main Glance functionalities

Backends⌘

- Glance is not responsible for physical data placement

- Instead, it leverages the following backends:

- Local - local directory / filesystem

- Swift - Swift object storage

- S3 - S3 object storage

- Ceph - Ceph block / object storage

- Sheepdog - Sheepdog object storage

- Cinder - Cinder volumes

- vSphere - VMware vSphere storage

- Glance cache

Configuration & Administration⌘

- Configuration files:

- /etc/glance/glance-api.conf - Glance API service configuration file

- /etc/glance/glance-registry.conf - main Glance service configuration file

- Services:

- openstack-glance-api - Glance API Service

- openstack-glance-registry - Glance service

- Management tools:

- glance - Glance client

- glance-manage - Glance management tool

Day II - Session III⌘

Components⌘

- Nova Client - any application that uses Nova service

- Nova API Service - handles JSON requests

- Nova Cert Service - manages X509 certificates for euca-bundle-image images

- Nova Compute Service - manages instances

- Nova Conductor Service - provides database-access support for compute nodes

- Nova Console Authentication Service - manages token authentication for VNC proxy

- Nova Scheduler Service - decides which compute node receives which instance

- Nova VNC Proxy Service - provides VNC proxy to instances

Flavors⌘

- Flavors are virtual hardware templates

- Flavors' parameters:

- VCPUs - virtual CPUs

- RAM - memory

- Swap - swap space

- Primary disk - root disk size

- Secondary disk - secondary ephemeral disk size

- OpenStack supports instances resizing feature

Instances launching and termination process⌘

- Instance launching process:

- A user sends a request to the Nova API service to launch an instance

- The Nova Scheduler service allocates a compute node for this request

- The Glance Store service streams the Glance image from the backend to the compute node

- The Nova Compute service creates ephemeral volumes

- The Cinder service creates block storage volumes if needed

- The Nova Compute service launches the instance

- Instance termination process:

- The Nova Compute service terminates the instance

- The Nova Compute service removes ephemeral volumes

- The Cinder service leaves the block storage volumes untouched

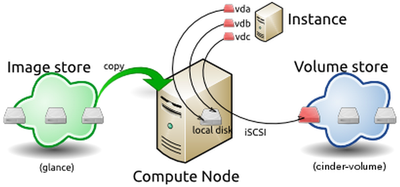

Instances launching and termination process #2⌘

Schedulers awareness⌘

- Nova Scheduler service performs its functions based on a scheduler in use

- Avialable schedulers:

- Filter scheduler:

- Is this host in the requested availability zone?

- Does it have sufficient amount of RAM available?

- Is it capable of serving requests (Does it pass filtering process)?

- If so, a host with the best weight is selected

- Chance scheduler:

- A random host which passes filtering process is selected

- Filter scheduler:

Remote access⌘

- There are 3 ways of getting an access to instances:

- VNC:

- via the VNC proxy

- available at the Horizon dashboard

- SPICE:

- successor of the VNC proxy

- available at the Horizon dashboard (requires additional configuration)

- SSH:

- directly over network

- SSH keys injection

- VNC:

Migrations⌘

- Allow to move an instance from one compute node to the other

- Available only for users with admin role

- Migration types:

- Non-live migration - the instance is shut down and moved to another compute node

- Live migration:

- Shared storage-based live migration - leverages shared storage solution

- Block live migration - copies the instance from one compute node to another

- Volume-backed live migration - leverages block storage volumes

- Evacuations

Host Aggregates and Availability Zones⌘

- Host aggregate:

- segregates compute nodes into logical groups

- commonly used to provide different classes of hardware

- can be bound with flavors (via metadata)

- invisible to users, but can be exposed as Availability Zones

- Availability zone:

- commonly used for the high availability purpose

- selected by users on instance launching time

- Compute nodes inside the Host Aggregate should be homogenous

Configuration & Administration⌘

- Configuration files:

- /etc/nova/nova.conf - main Nova configuration file

- /etc/nova/api-paste.ini - Nova API Service configuration file

- Services:

- openstack-nova-api - Nova API Service

- openstack-nova-cert - Nova Cert Service

- openstack-nova-compute - Nova Compute Service

- openstack-nova-conductor - Nova Conductor Service

- openstack-nova-consoleauth - Nova Console Authentication Service

- openstack-nova-scheduler - Nova Scheduler Service

- openstack-nova-novncproxy - Nova VNC Proxy Service

- libvirtd' - libvirt API service

- Management tools:

- nova - Nova client

- nova-manage - Nova management tool

Day II - Session IV & Day III - Session I⌘

Components⌘

- Nova Client - any application that uses Neutron service

- Neutron Server - Neutron API service

- L2 Agent - interconnects L2 devices

- L3 Agent - interconnects L2 networks

- DHCP Agent - automatically assigns IP addresses to instances

- Metadata Agent - exposes compute API metadata

- LBaaS Agent - creates and manages LB pools

Network virtualization⌘

- Linux namespaces:

- multiple namespaces on a single Linux host

- multiple IP addresses can be assigned to the same device in different namespaces

- virtual devices which exist in one namespace may not exist in other namespace

- particular network operations are performed withing particular namespace

- Tunneling mechanisms:

- Flat - no tunneling

- VLAN (Virtaul LAN) - IEEE 802.1Q standard

- GRE (Generic Routing Encapsulation) - RFC 2784 standard

- VXLAN (Virtual Extensible LAN) - RFC 7348 standard

- MTU, Jumbo Frames and GRO

Virtual network devices⌘

- eth - physical NIC

- tap - virtual NIC

- veth pair - pair of directly connected virtual NICs

- qbrXYZ - Linux Bridge

- br-int - OVS integration bridge

- br-ex - OVS external bridge

- br-ethX - OVS internal bridge

- qr-XYZ - OVS port

L2 agent⌘

- Runs on networker and compute nodes

- Interconnects L2 devices

- Backends:

- OVS (Open VSwitch)

- Linux Bridge

- Cisco plugin

- Brocade plugin

- And more

OVS⌘

- OVS (Open VSwitch) - virtual switch / bridge:

- interconnects physical devices with virtual devices

- the most common L2 backend for OpenStack deployments

- support for for NetFlow and SPAN/RSPAN

- OpenFlow - OVS engine:

- enables defining networking flow rules (flow forwarding)

- support for VLAN and GRE tunnels

- OVS vs Linux Bridge

ML2⌘

- ML2 (Modular Layer 2) framework

- Allows Neutron to simultaneously utilize different L2 backends

- Central and unified management of Neutron plugins

- Heterogeneous deployments

- Support for OVS, Linux Bridge and HyperV plugins

- Architecture:

- ML2 plugin

- API extension

- Type Manager

- Mechanism Manager

- ML2 framework communicates with plugins via RPC

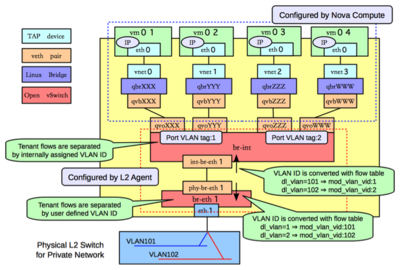

Bringing it all together - Compute⌘

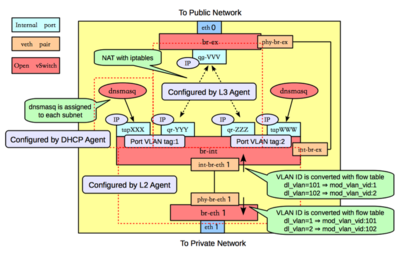

Bringing it all together - Networker⌘

Virtual networks⌘

- Neutron network types

- External:

- usually one per cluster

- usually attached to corporate network

- usually uses flat tunneling mechanism

- Tenant:

- usually many per cluster

- attached to compute nodes in the cluster

- uses flat, vlan, gre or vxlan tunneling mechanisms

- External:

- Floating IPs:

- allocated to projects from a pool configured in the external network's subnet

- associated with private IPs from a pool configured in the tenant network's subnet

L3 agent⌘

- Runs on networker node

- Interconnects L2 networks

- Isolated IP stacks

- Forwarding enabled

- Backend: static routes

DHCP agent⌘

- Runs on networker node

- Automatically assigns IP addresses to instances

- Isolated IP stacks

- Backend: dnsmasq

- Additional options (e.g. DNS servers)

LBaaS agent⌘

- Runs on networker node

- Creates and manages LB pools

- Exposes LB pools at unique VIP (Virtual IP)

- Backends:

- HAProxy

- F5

- AVI

Configuration⌘

- Configuration files:

- /etc/neutron/neutron.conf - main Neutron configuration file

- /etc/neutron/dhcp_agent.ini - DHCP Agent configuration file

- /etc/neutron/l3_agent.ini - L3 Agent configuration file

- /etc/neutron/metadata_agent.ini - Metadata Agent configuration file

- /etc/neutron/lbaas_agent.ini - LBaaS Agent configuration file

- /etc/neutron/plugins/ml2/ml2_conf.ini - ML2 configuration file

- /etc/neutron/plugins/openvswitch/ovs_neutron_plugin.ini - OVS plugin configuration file

- /etc/neutron/plugin.ini - symbolic link pointing to selected plugin's configuration file

Administration⌘

- Services:

- neutron-server - Neutron Server

- neutron-openvswitch-agent - Neutron OVS Agent

- neutron-dhcp-agent - Neutron DHCP Agent

- neutron-l3-agent - Neutron L3 Agent

- neutron-metadata-agent - Neutron Metadata Agent

- neutron-lbaas-agent - Neutron LBaaS Agent

- openvswitch' - OVS service

- Management tools:

- neutron - Neutron client

Day III - Session II⌘

Customization⌘

- The following settings can be easily customized:

- Site colors - theme colors

- Logo - company logo

- HTML title - service title

- Site branding link - company page

- Help URL - custom help page

- HTTP daemon needs to be restarted

Backends⌘

- Horizon is not responsible for handling user session data

- Instead, it leverages the following backends:

- Local - local memory cache

- Memcached - external distributed memory caching system

- Redis - external key-value store

- MariaDB - external SQL database

- Cookies - browser's cookies

- Cached database: Local + MariaDB

Configuration & Administration⌘

- Configuration files:

- /etc/openstack-dashboard/local_settings - main Horizon configuration file

- /usr/share/openstack-dashboard/openstack_dashboard/templates/_stylesheets.html - CSSs file

- /usr/share/openstack-dashboard/openstack_dashboard/static/dashboard/img/ - images store

- /usr/share/openstack-dashboard/openstack_dashboard/static/dashboard/css/ - CSSs store

- Services:

- openstack-dashboard - Horizon service

- httpd - HTTP daemon

Day III - Session III⌘

Volumes⌘

- Cinder Volumes:

- provide an access to block storage devices that can be attached to instances

- can be attached only to one instance at a time

- Volume Backups:

- not available from the Horizon dashboard

- Cinder volumes can be backed up (full + incremental)

- new volumes can be created from backups on demand

- usually stored on a different backend than volumes

- Volume Snapshots:

- available from the Horizon dashboard

- a snapshot can be taken from Cinder volumes (differential backup)

- new volumes can be created from snapshots on demand

- always stored on the same backend as volumes

Components⌘

- Cinder Client - any application that uses Cinder service

- Cinder API Service - handles JSON requests

- Cinder Scheduler Service - decides which host gets each volume

- Cinder Volume Service - manages volumes

- Cinder Backup Service - performs backup and restore operations

Backends⌘

- Cinder is not responsible for physical data placement

- Instead, it leverages the following backends:

- LVM - local LVM volume group

- Ceph - Ceph block / object storage

- NFS - remote NFS share

- GlusterFS - Red Hat shared storage

- Cinder is capable of dealing with multiple backends

Configuration & Administration⌘

- Configuration files:

- /etc/cinder/cinder.conf - main Cinder configuration file

- Services:

- openstack-cinder-api - Cinder API Service

- openstack-cinder-scheduler - Cinder Scheduler Service

- openstack-cinder-volume - Cinder Volume Service

- openstack-cinder-backup - Cinder Backup Service

- Management tools:

- cinder - Cinder client

- cinder-manage - Cinder management tool

Day III - Session IV⌘

What is object storage?⌘

- Object storage is a storage architecture that manages data as objects

- Other types of storage:

- File storage - manages data as a file hierarchy

- Block storage - manages data as blocks within sectors and tracks

- Object storage characteristics:

- It's accessible only via API

- It has a flat structure

- Objects' metadata live within the objects

- It ensures data durability thanks to replication

- It implements an eventual consistency model

- It is easily scalable

- Object storage is NOT a filesystem!!!

Replication⌘

- Replication unit: Swift partition

- Replication factor: 3 (editable)

- Replication areas:

- zones - e.g. geographically-distributed data centers

- nodes - servers within the same zone

- disks - disks within the same node

- partitions - virtual units within the same disk

- Swift does NOT store 2 or more replicas on the same disk

- Swift is an ideal solution for cloud storage purpose

Structure⌘

- Swift has a flat structure

- Data hierarchy:

- accounts - store information on containers of authorized users

- containers - store information on objects inside the container

- objects - store the actual data

- Virtual directories

Data addressing⌘

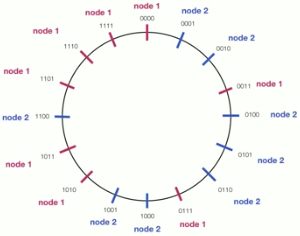

- Swift uses a modified consistent hashing ring for storing and retrieving the data

- Modified consistent hashing ring:

- 128-bit output space (MD5 hashing)

- the output space is represented as a circle

- partitions are placed on the circle at fixed offsets

- top N (depending on a part power) bits from the Swift hash function are taken

- the object is stored on a partition addressed by the N bits

- Separate rings for accounts, containers and objects

- The amount of data moved when adding a new node is small

Modified consistent hashing ring⌘

Data placement⌘

- Swift hash function:

hash(path) = md5(path + per-cluster_suffix)

- Data are stored under a name defined by the Swift hash function

- Top N bits from the Swift hash function are taken to locate a partition for the data

Metadata placement⌘

- Swift stores objects' metadata within objects

- Metadata are stored in the underlying file's extended attributes

- Example:

- Name: X-Container-Meta-Web-Error

- Value: error.html

Part power⌘

- Part power is an exponent defining a number of partitions in the Swift cluster:

2^part_power = number_of_partitions

- Defined on the ring creation time

- Immutable once chosen

- At least 100 partitions per disk

- Can be derived using the following formula:

part_power = ⌈log2(number_of_disks * 100)⌉

- Bigger part power equals to:

- higher RAM utilization

- faster data lookups

Ring internals⌘

- devs:

- array of devices

- provides an information on the device ID, zone, node and disk

{'id': 1, 'zone': 1, 'ip': X.Y.Z.O, 'device': sdb}

- replica2part2dev

- array or arrays mapping a replica and partition to the device ID

_replica2part2dev[0][1] = 1

- Swift provides an external interface to both of these structures

Ring builder⌘

- Creates and updates the ring internals

- Partitions placement:

- the ring builder automatically tries to balance the partitions across the cluster

- a cluster admin can manually rebalance the partitions

- Partitions rebalancing:

- only one replica can be moved at a time

- other replicas are locked for a number of hours specified by a min_part_hours parameter

- Handoffs in case of failures

Components⌘

- Swift Account Service - provides information on containers inside the account

- Swift Container Service - provides information on objects inside the container

- Swift Object Service - stores, retrieves and deletes objects

- Swift Proxy Service - binds together other Swift services

- Swift Rings - provide mapping between names of entities and their physical location

- Swift Updaters - queue and execute metadata update requests

- Swift Auditors - monitor and recover integrity of accounts, containers and objects

Backends⌘

- Swift is not responsible for physical data placement

- Instead, it leverages the following backends / filesystems:

- XFS - recommended by RackSpace

- EXT4 - popular Linux filesystem

- Any other filesystem with xattr support

- Swift file types:

- account files - sqlite database files

- container files - sqlite database files

- object files - binary files

Configuration⌘

- Configuration files:

- /etc/swift/swift.conf - main Swift configuration file

- /etc/swift/proxy-server.conf - Swift Proxy Service configuration file

- /etc/swift/account-server.conf - Swift Account Service configuration file

- /etc/swift/container-server.conf - Swift Container Service configuration file

- /etc/swift/object-server.conf - Swift Object Service configuration file

- /etc/swift/account.builder - Swift account ring builder's file

- /etc/swift/container.builder - Swift container ring builders' file

- /etc/swift/object.builder - Swift object ring builder's file

- /etc/swift/account.ring.gz - Swift account ring

- /etc/swift/container.ring.gz - Swift container ring

- /etc/swift/object.ring.gz - Swift object ring

Administration⌘

- Services:

- openstack-swift-proxy - Swift Proxy Service

- openstack-swift-account - Swift Account Service

- openstack-swift-container - Swift Container Service

- openstack-swift-object - Swift Object Service

- Management tools:

- swift - Swift client

- swift-ring-builder - Swift ring builder tool

Day IV - Session I⌘

Use Cases⌘

- Orchestration - automatic creation of OpenStack resources based on predefined templates:

- users

- tenants

- networks

- subnets

- routers

- floating-ips

- security groups

- key pairs

- instances

- volumes

- High Availability

- Nested Stacks

Components⌘

- Heat Client - any application that uses Heat service

- Heat API Service - handles JSON requests

- Heat CloudFormation API Service - CloudFormation compatible API for exposing Heat functionalities via REST

- Heat CloudWatch API Service - CloudWatch-like API for exposing Heat functionalities via REST

- Heat Engine Service - performs actual orchestration work

Templates⌘

- Templates - used to deploy Heat stacks

- Template types:

- HOT (Heat Orchestration Template):

- KeyName (required)

- InstanceType (required)

- ImageId (required)

- CNF (Amazon CloudFormation Template):

- KeyName (required)

- InstanceType (optional)

- DBName (optional)

- DBUserName (optional)

- DBPassword (optional)

- DBRootPassword (optional)

- LinuxDistribution (optional)

- HOT (Heat Orchestration Template):

"Hello World" template⌘

heat_template_version: 2015-05-28

description: Simple template to deploy a single compute instance

resources:

my_instance:

type: OS::Nova::Server

properties:

key_name: tytus

image: CirrOS

flavor: m1.small

Configuration & Administration⌘

- Configuration files:

- /etc/heat/heat.conf - main Heat configuration file

- /etc/heat/templates/* - Heat templates

- Services:

- openstack-heat-api - Heat API Service

- openstack-heat-api-cnf - Heat CloudFormation API Service

- openstack-heat-api-cloudwatch - Heat CloudWatch API Service

- openstack-heat-engine - Heat Engine Service

- Management tools:

- heat - Heat client

- heat-manage - Heat management tool

Day IV - Session II⌘

Use cases⌘

- Billing - pay-as-you-go approach

- Metering - collecting information

- Rating - analyzing the information

- Billing - transforming the analysis' reports into a value

- Autoscaling - automatic resizing of instances

- Planning - analyzing trend lines

Basic concepts⌘

- Meters - measure particular aspect of resource usage

- Samples - individual datapoints associated with a particular meter

- Statistics - set of samples aggregated over a time duration

- Pipelines - set of transformers applied to meters

- Alarm - set of rules defining a monitor

Components⌘

- Ceilometer Client - any software that uses Ceilometer service

- Ceilometer API Service - handles JSON requests

- Polling agents - poll OpenStack services and build meters

- Collector Service - gathers and records events and metering data

- Alarming Agent - determines when to fire alarms

- Notification Agent - initiates alarm actions

Polling agents⌘

- Central agent - polls meters over SNMP:

- Keystone meters

- Swift meters

- Cinder meters

- Glance meters

- Neutron meters

- Hardware resources meters

- Energy consumption meters

- Compute agent - sends meters via AMQP:

- Nova meters

- IPMI agent - sends meters via AMQP

Backends⌘

- Ceilometer is not responsible for storing data

- Instead, it leverages the following backends:

- File - local file

- ElasticSearch - search server

- MongoDB - no-SQL database

- MySQL - relational database

- PostgreSQL - relational database

- HBase - no-SQL database

- DB2 - relational database

- HTTP - external HTTP target

Configuration & Administration⌘

- Configuration files:

- /etc/ceilometer/ceilometer.conf - main Ceilometer configuration file

- /etc/ceilometer/pipeline.yaml - pipeline configuration file

- Services:

- openstack-ceilometer-api - Ceilometer API Service

- openstack-ceilometer-central - Ceilometer Central Agent

- openstack-ceilometer-compute - Ceilometer Compute Agent

- openstack-ceilometer-collector - Ceilometer Collector Service

- openstack-ceilometer-alarm-notifier - Ceilometer Alarm Notification Agent

- openstakc-ceilometer-alarm-evaluator - Ceilometer Alarm Evaluation Agent

- openstack-ceilometer-notification - Ceilometer Notifications Listener

- mongod - MongoDB service

- Management tools:

- ceilometer - Ceilometer client

Day IV - Session III⌘

Scalability and resilience⌘

- What tends to be exhausted the most are the compute resources

- OpenStack cluster scales out (horizontally)

- Adding the compute node is a simple process

- What tends to fail most often are the compute nodes

- The compute nodes run on the commodity hardware

- Decommissioning the compute is a simple process

Resources monitoring and growth planning⌘

- Primary resources:

- vCPUs - summary number of VCPUs utilized by instances

- RAM - summary amount of RAM utilized by instances

- ephemeral storage - summary amount of volatile volumes utilized by instances

- Growth planning:

- resources utilization graphs - show resources utilization over time

- resources utilization trend lines - show expected resources utilization in future

- operational baselines - show how long does the process of adding a new compute node take

Procedure⌘

- RHEL OSP Installer - provision the compute node

- PackStack - update the list of compute nodes and re-run PackStack installation process

- Manual Deployment:

- Update iptables service on the Controller node

- Install Neutron and Nova services on the Compute node

- Upload Neutron and Nova services configuration files to the Compute node

- Reconfigure Neutron and Nova services configuration files on the Compute node

- Start and configure supporting services on the Compute node

- Start Neutron and Nova services on the Compute node

Day IV - Session IV⌘

Certification and Surveys⌘

- Congratulations on completing the course!

- Official Red hat certification authority:

- Visit http://www.nobleprog.com for other courses

- Surveys: http://www.nobleprog.pl/te