Nodejs: Difference between revisions

Lsokolowski1 (talk | contribs) |

Lsokolowski1 (talk | contribs) |

||

| (6 intermediate revisions by the same user not shown) | |||

| Line 13: | Line 13: | ||

{{Can I use your material}} | {{Can I use your material}} | ||

== Nodejs Intro - Design and architecture | == Nodejs Intro - Design and architecture == | ||

* Introduction | * Introduction | ||

* Installation and requirements | * Installation and requirements | ||

| Line 21: | Line 21: | ||

* Reactor Pattern (The event Loop) | * Reactor Pattern (The event Loop) | ||

== Introduction | == Introduction == | ||

Definition | Definition | ||

* A '''platform''' built on Chrome’s '''JavaScript runtime''' for easily building '''fast''', '''scalable''' network applications | * A '''platform''' built on Chrome’s '''JavaScript runtime''' for easily building '''fast''', '''scalable''' network applications | ||

| Line 28: | Line 28: | ||

** Perfect for data-intensive '''real-time''' applications that run across '''distributed''' devices | ** Perfect for data-intensive '''real-time''' applications that run across '''distributed''' devices | ||

=== Intro Con't | === Intro Con't === | ||

* Started in '''2009''' | * Started in '''2009''' | ||

* Very popular project on [https://github.com/nodejs/node GitHub] | * Very popular project on [https://github.com/nodejs/node GitHub] | ||

* Good Following in [https://groups.google.com/forum/#!forum/nodejs Google group] | * Good Following in [https://groups.google.com/forum/#!forum/nodejs Google group] | ||

* '''Above | * '''Above 2.7 millions''' community modules published in '''npm''' (package manager) | ||

== Installation | == Installation == | ||

* '''Official packages''' for all the major platforms | * '''Official packages''' for all the major platforms | ||

** <small>https://nodejs.org/en/download/</small> | ** <small>https://nodejs.org/en/download/</small> | ||

* Package '''managers''' (apt, rpm, brew, etc) | * Package '''managers''' (apt, rpm, brew, etc) | ||

* '''nvm''' - allows to keep '''different versions''' | * '''nvm''' - allows to keep '''different versions''' | ||

** <small>https://github.com/nvm-sh/nvm</small> | ** Linux - <small>https://github.com/nvm-sh/nvm</small> | ||

** Windows - <small>https://github.com/coreybutler/nvm-windows</small> | |||

=== Update (Linux) | === Update (Linux) === | ||

<pre> | <pre> | ||

npm cache clean -f | npm cache clean -f | ||

| Line 50: | Line 51: | ||

</pre> | </pre> | ||

== Requirements | == Requirements == | ||

"Nice to know" '''JavaScript concepts''' | "Nice to know" '''JavaScript concepts''' | ||

* Lexical Structure, Expressions | * Lexical Structure, Expressions | ||

| Line 59: | Line 60: | ||

* Strict Mode, ECMAScript 6, 2016, 2017 | * Strict Mode, ECMAScript 6, 2016, 2017 | ||

=== Requirements Con't | === Requirements Con't === | ||

'''Asynchronous programming''' as a fundamental part of Node.js | '''Asynchronous programming''' as a fundamental part of Node.js | ||

* Asynchronous programming and callbacks | * Asynchronous programming and callbacks | ||

| Line 66: | Line 67: | ||

* Closures, The Event Loop | * Closures, The Event Loop | ||

== Node.js Philosophy | == Node.js Philosophy == | ||

* Small Core | * Small Core | ||

* Small modules | * Small modules | ||

| Line 72: | Line 73: | ||

* Simplicity | * Simplicity | ||

=== Small Core | === Small Core === | ||

* Small set of functionality leaves the rest to the so-called '''userland''' | * Small set of functionality leaves the rest to the so-called '''userland''' | ||

** ''Userspace'' or the ''ecosystem'' of modules living outside the core | ** ''Userspace'' or the ''ecosystem'' of modules living outside the core | ||

| Line 80: | Line 81: | ||

* Positive cultural impact that it brings on the evolution of the entire ecosystem | * Positive cultural impact that it brings on the evolution of the entire ecosystem | ||

=== Small Modules | === Small Modules === | ||

* One of the most evangelized principles is to design small modules | * One of the most evangelized principles is to design small modules | ||

** In terms of code size, and scope (principle has its roots in the Unix philosophy) | ** In terms of code size, and scope (principle has its roots in the Unix philosophy) | ||

| Line 90: | Line 91: | ||

** Applications are composed of a high number of small, well-focused dependencies | ** Applications are composed of a high number of small, well-focused dependencies | ||

=== Small Surface Area | === Small Surface Area === | ||

Node.js modules usually expose a minimal set of functionality | Node.js modules usually expose a minimal set of functionality | ||

* Increased usability of the API (intra and inter projects) | * Increased usability of the API (intra and inter projects) | ||

| Line 99: | Line 100: | ||

* Node.js modules are created to be used rather than extended | * Node.js modules are created to be used rather than extended | ||

=== Simplicity | === Simplicity === | ||

Simplicity and pragmatism | Simplicity and pragmatism | ||

* A simple, as opposed to a perfect, feature-full software, is a good practice | * A simple, as opposed to a perfect, feature-full software, is a good practice | ||

* '''"Simplicity is the ultimate sophistication"''' – ''Leonardo da Vinci'' | * '''"Simplicity is the ultimate sophistication"''' – ''Leonardo da Vinci'' | ||

== Asynchronous and Evented | == Asynchronous and Evented == | ||

* Browser side | * Browser side | ||

* Server side | * Server side | ||

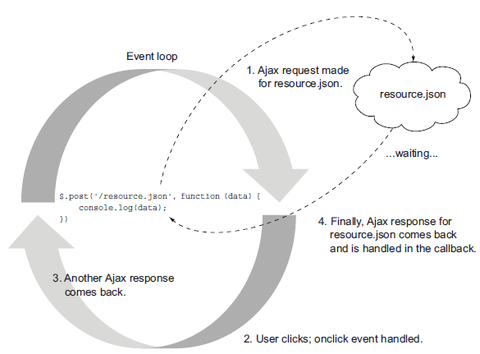

=== Browser Side | === Browser Side === | ||

[[File:AsyncAndEventBrowser.png|480px]] | [[File:AsyncAndEventBrowser.png|480px]] | ||

* I/O that happens in the browser is outside of the event loop (outside the main script execution) | * I/O that happens in the browser is outside of the event loop (outside the main script execution) | ||

| Line 114: | Line 115: | ||

* Event is handled by a function (the "callback" function) | * Event is handled by a function (the "callback" function) | ||

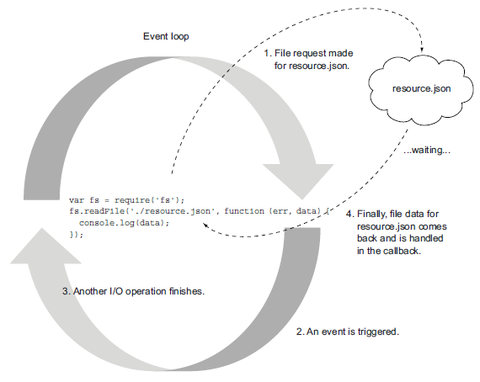

=== Server Side | === Server Side === | ||

Server side | Server side | ||

* <syntaxhighlight lang="sql" inline>$result = mysql_query('SELECT * FROM myTable');</syntaxhighlight> | * <syntaxhighlight lang="sql" inline>$result = mysql_query('SELECT * FROM myTable');</syntaxhighlight> | ||

| Line 129: | Line 130: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Server Side Con't | === Server Side Con't === | ||

[[File:AsyncAndEventServer.png|480px]] | [[File:AsyncAndEventServer.png|480px]] | ||

* An anonymous function is called (the “callback”) | * An anonymous function is called (the “callback”) | ||

** Containing eventually any error occurred, and data (file data) | ** Containing eventually any error occurred, and data (file data) | ||

== DIRTy Applications | == DIRTy Applications == | ||

Designed for '''Data Intensive Real Time''' (DIRT) applications | Designed for '''Data Intensive Real Time''' (DIRT) applications | ||

* Very lightweight on I/O | * Very lightweight on I/O | ||

| Line 141: | Line 142: | ||

* Designed to be responsive (like the browser) | * Designed to be responsive (like the browser) | ||

== Reactor Pattern (The event Loop) | == Reactor Pattern (The event Loop) == | ||

The reactor pattern is the heart of the Node.js asynchronous nature | The reactor pattern is the heart of the Node.js asynchronous nature | ||

* Main concepts | * Main concepts | ||

| Line 147: | Line 148: | ||

** Non-blocking I/O | ** Non-blocking I/O | ||

=== Reactor Pattern Con't | === Reactor Pattern Con't === | ||

I/O is slow - Not expensive in terms of CPU, but it adds a delay | I/O is slow - Not expensive in terms of CPU, but it adds a delay | ||

* I/O is the slowest among the fundamental operations | * I/O is the slowest among the fundamental operations | ||

| Line 156: | Line 157: | ||

** Disk and network varies from MB/s to, optimistically, GB/s | ** Disk and network varies from MB/s to, optimistically, GB/s | ||

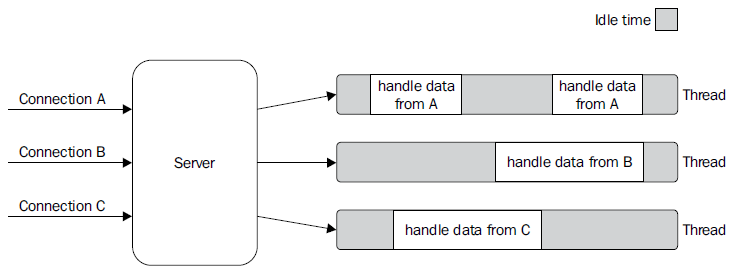

=== Blocking I/O | === Blocking I/O === | ||

* Web Servers that implement blocking I/O will handle concurrency | * Web Servers that implement blocking I/O will handle concurrency | ||

** by creating a thread or a process (Taken from a pool) for each concurrent connection that needs to be handled | ** by creating a thread or a process (Taken from a pool) for each concurrent connection that needs to be handled | ||

[[File:BlockingIO.png]] | [[File:BlockingIO.png]] | ||

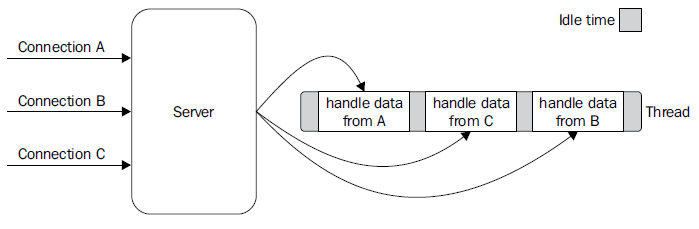

=== Non Blocking I/O | === Non Blocking I/O === | ||

Event Demultiplexing | Event Demultiplexing | ||

[[File:NonBlockingIO.png]] | [[File:NonBlockingIO.png]] | ||

=== Non Blocking I/O Con't | === Non Blocking I/O Con't === | ||

Another mechanism to access resources (non-blocking I/O) | Another mechanism to access resources (non-blocking I/O) | ||

* In this operating mode the system call returns immediately | * In this operating mode the system call returns immediately | ||

| Line 187: | Line 188: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Event Demultiplexing | === Event Demultiplexing === | ||

* Busy-waiting is not an ideal technique | * Busy-waiting is not an ideal technique | ||

* « Synchronous event demultiplexer » or « event notification interface » technique | * « Synchronous event demultiplexer » or « event notification interface » technique | ||

| Line 193: | Line 194: | ||

** and block until new events are available to process | ** and block until new events are available to process | ||

=== Event Demultiplexing Con't | === Event Demultiplexing Con't === | ||

An '''algorithm''' that uses a '''generic synchronous event demultiplexer''' | An '''algorithm''' that uses a '''generic synchronous event demultiplexer''' | ||

* Reads from two different resources | * Reads from two different resources | ||

| Line 215: | Line 216: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Event Demultiplexing Expl | === Event Demultiplexing Expl === | ||

# '''Resources''' are added to a '''data structure''' | # '''Resources''' are added to a '''data structure''' | ||

#* '''Associating''' each with a '''specific''' operation (read) | #* '''Associating''' each with a '''specific''' operation (read) | ||

| Line 226: | Line 227: | ||

#* This is called the '''event loop''' | #* This is called the '''event loop''' | ||

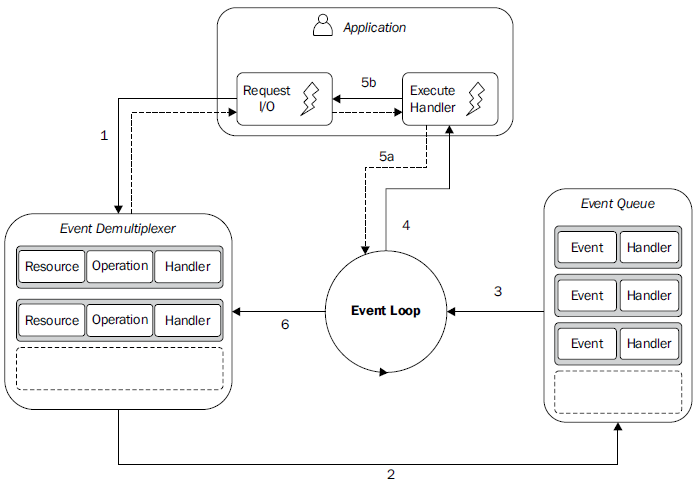

=== Reactor Pattern Expl | === Reactor Pattern Expl === | ||

Reactor Pattern | Reactor Pattern | ||

* A specialization of the previous algorithm | * A specialization of the previous algorithm | ||

| Line 232: | Line 233: | ||

** It will be invoked as soon as an event is produced and processed by the event loop | ** It will be invoked as soon as an event is produced and processed by the event loop | ||

=== Reactor Pattern Flow | === Reactor Pattern Flow === | ||

[[File:ReactorPattern.png]] | [[File:ReactorPattern.png]] | ||

=== Reactor Pattern Flow Expl | === Reactor Pattern Flow Expl === | ||

<pre> | <pre> | ||

At the heart of Node.js that pattern: | At the heart of Node.js that pattern: | ||

| Line 243: | Line 244: | ||

</pre> | </pre> | ||

=== Reactor Pattern Flow Expl Con't | === Reactor Pattern Flow Expl Con't === | ||

'''AP''' = ''Application'', '''ED''' = ''Event Demultiplexer'', '''EQ''' = ''Event Queue'', '''EL''' = ''Event Loop'' | '''AP''' = ''Application'', '''ED''' = ''Event Demultiplexer'', '''EQ''' = ''Event Queue'', '''EL''' = ''Event Loop'' | ||

# The '''AP''' submits a request (new I/O operation) to the '''ED''' | # The '''AP''' submits a request (new I/O operation) to the '''ED''' | ||

| Line 259: | Line 260: | ||

#* the loop will block again on the '''ED''' which will then trigger '''another cycle''' | #* the loop will block again on the '''ED''' which will then trigger '''another cycle''' | ||

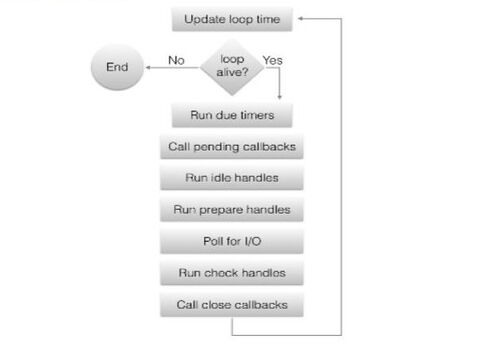

=== Libuv - I/O engine of Nodejs | === Libuv - I/O engine of Nodejs === | ||

* Running Node.js across and within the different operating systems requires an abstraction level for the Event Demultiplexer | * Running Node.js across and within the different operating systems requires an abstraction level for the Event Demultiplexer | ||

* The Node.js core team created the "libuv" library (C library) with the objectives | * The Node.js core team created the "libuv" library (C library) with the objectives | ||

| Line 267: | Line 268: | ||

* <small>http://nikhilm.github.io/uvbook/</small> | * <small>http://nikhilm.github.io/uvbook/</small> | ||

=== Libuv - the event loop | === Libuv - the event loop === | ||

[[File:Libuv.jpg|480px]] | [[File:Libuv.jpg|480px]] | ||

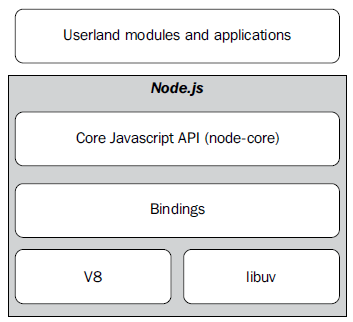

=== Nodejs - the whole platform | === Nodejs - the whole platform === | ||

To build the platform we still need: | To build the platform we still need: | ||

* A set of bindings responsible for wrapping and exposing libuv and other low-level | * A set of bindings responsible for wrapping and exposing libuv and other low-level | ||

| Line 278: | Line 279: | ||

* A core JavaScript library (called node-core) that implements the high-level Node.js API | * A core JavaScript library (called node-core) that implements the high-level Node.js API | ||

=== Nodejs Platform Con't | === Nodejs Platform Con't === | ||

[[File:NodejsPlatform.png]] | [[File:NodejsPlatform.png]] | ||

== The Callback Pattern | == The Callback Pattern == | ||

* Handlers of the Reactor Pattern | * Handlers of the Reactor Pattern | ||

* Synchronous CPS | * Synchronous CPS | ||

| Line 288: | Line 289: | ||

* Callback Conventions | * Callback Conventions | ||

=== Handlers of the Reactor Pattern | === Handlers of the Reactor Pattern === | ||

* Callbacks are the handlers of the reactor pattern | * Callbacks are the handlers of the reactor pattern | ||

** They are part of what give Node.js its distinctive programming style | ** They are part of what give Node.js its distinctive programming style | ||

| Line 295: | Line 296: | ||

** With closures we consve the context in which a function was created no matter when or where its callback is invoked | ** With closures we consve the context in which a function was created no matter when or where its callback is invoked | ||

=== Synchronous CPS | === Synchronous CPS === | ||

* The add() function is a synchronous CPS function it returns a value only when the callback completes its execution | * The add() function is a synchronous CPS function it returns a value only when the callback completes its execution | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 311: | Line 312: | ||

</pre> | </pre> | ||

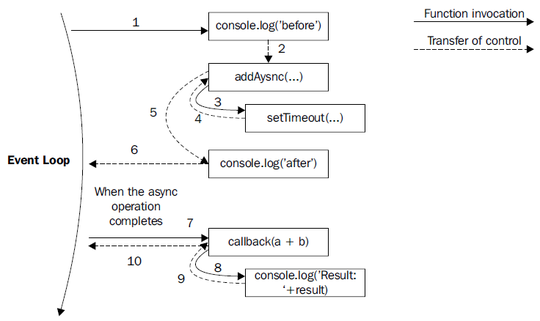

=== Asynchronous CPS | === Asynchronous CPS === | ||

* The use of setTimeout() simulates an asynchronous invocation of the callback | * The use of setTimeout() simulates an asynchronous invocation of the callback | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 332: | Line 333: | ||

</pre> | </pre> | ||

=== Asynchronous CPS and event loop | === Asynchronous CPS and event loop === | ||

[[File:AsyncCPS.png|540px]] | [[File:AsyncCPS.png|540px]] | ||

=== Asynchronous CPS Con't | === Asynchronous CPS Con't === | ||

* When the asynchronous operation completes, the execution is then resumed starting from the callback | * When the asynchronous operation completes, the execution is then resumed starting from the callback | ||

* The execution will start from the Event Loop, so it will have a fresh stack | * The execution will start from the Event Loop, so it will have a fresh stack | ||

| Line 342: | Line 343: | ||

** A new event from the queue can be processed | ** A new event from the queue can be processed | ||

=== Unpredictable Functions, async read | === Unpredictable Functions, async read === | ||

Example | Example | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 361: | Line 362: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Unpredictable Functions, wrapper | === Unpredictable Functions, wrapper === | ||

Example | Example | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 381: | Line 382: | ||

* All the listeners will be invoked at once when the read operation completes and the data is available | * All the listeners will be invoked at once when the read operation completes and the data is available | ||

=== Unpredictable Functions, main | === Unpredictable Functions, main === | ||

Example | Example | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 397: | Line 398: | ||

Result?? >> First call data: some data | Result?? >> First call data: some data | ||

=== Unpredictable Functions, Expl | === Unpredictable Functions, Expl === | ||

Explanation - behavior | Explanation - behavior | ||

* Reader2 is created in a cycle of the event loop in which the cache for the requested file already exists. | * Reader2 is created in a cycle of the event loop in which the cache for the requested file already exists. | ||

| Line 405: | Line 406: | ||

* We register the listeners after the creation of reader2 => They will never be invoked. | * We register the listeners after the creation of reader2 => They will never be invoked. | ||

=== Unpredictable Functions, Concl | === Unpredictable Functions, Concl === | ||

Conclusions | Conclusions | ||

* >> It is imperative for an API to clearly define its nature, either synchronous or Asynchronous | * >> It is imperative for an API to clearly define its nature, either synchronous or Asynchronous | ||

| Line 411: | Line 412: | ||

* >> Bugs can be extremely complicated to identify and reproduce in a real application | * >> Bugs can be extremely complicated to identify and reproduce in a real application | ||

=== Unpredictable Functions, Sync Sol | === Unpredictable Functions, Sync Sol === | ||

Solution - Synchronous API | Solution - Synchronous API | ||

>> Entire function converted to direct style | >> Entire function converted to direct style | ||

| Line 429: | Line 430: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Unpredictable Functions, Sync Sol Con't | === Unpredictable Functions, Sync Sol Con't === | ||

Solution - Synchronous API | Solution - Synchronous API | ||

* Changing an API from CPS to direct style, or from asynchronous to synchronous, or vice versa might also require a change to the style of all the code using it | * Changing an API from CPS to direct style, or from asynchronous to synchronous, or vice versa might also require a change to the style of all the code using it | ||

| Line 439: | Line 440: | ||

** This solution is strongly discouraged if we have to read many files only once | ** This solution is strongly discouraged if we have to read many files only once | ||

=== Unpredictable Functions, Deferred Sol | === Unpredictable Functions, Deferred Sol === | ||

Solution - Deferred Execution | Solution - Deferred Execution | ||

* Instead of running it immediately in the same event loop cycle we schedule the synchronous callback invocation to be executed at the next pass of the event loop: | * Instead of running it immediately in the same event loop cycle we schedule the synchronous callback invocation to be executed at the next pass of the event loop: | ||

| Line 460: | Line 461: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Callback Conventions | === Callback Conventions === | ||

* In Node.js CPS APIs and callbacks follow a set of specific conventions they apply to the Node.js core API and are followed by every userland module | * In Node.js CPS APIs and callbacks follow a set of specific conventions they apply to the Node.js core API and are followed by every userland module | ||

* Callbacks come last | * Callbacks come last | ||

| Line 479: | Line 480: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Callback Conventions - Propagating Errors | === Callback Conventions - Propagating Errors === | ||

* Propagating errors in synchronous, direct style functions is done with the well-known throw command (The error to jump up in the call stack until it's caught | * Propagating errors in synchronous, direct style functions is done with the well-known throw command (The error to jump up in the call stack until it's caught | ||

* In asynchronous CPS error propagation is done by passing the error to the next callback in the CPS chain | * In asynchronous CPS error propagation is done by passing the error to the next callback in the CPS chain | ||

| Line 499: | Line 500: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Callback Conventions - Uncaught Exceptions | === Callback Conventions - Uncaught Exceptions === | ||

* In order to avoid any exception to be thrown into the fs.readFile() callback, we put a try-catch block around JSON.parse() | * In order to avoid any exception to be thrown into the fs.readFile() callback, we put a try-catch block around JSON.parse() | ||

* Throwing inside an asynchronous callback will cause the exception to jump up to the event loop and never be propagated to the next callback | * Throwing inside an asynchronous callback will cause the exception to jump up to the event loop and never be propagated to the next callback | ||

* In Node.js, this is an unrecoverable state and the application will simply shut down printing the error to the stderr interface. | * In Node.js, this is an unrecoverable state and the application will simply shut down printing the error to the stderr interface. | ||

=== Uncaught Exceptions - Behavior | === Uncaught Exceptions - Behavior === | ||

* In the case of an uncaught exception: | * In the case of an uncaught exception: | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 523: | Line 524: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Uncaught Exceptions - Behavior Con't | === Uncaught Exceptions - Behavior Con't === | ||

* … Would result with the following message printed in the console | * … Would result with the following message printed in the console | ||

<pre> | <pre> | ||

| Line 535: | Line 536: | ||

* The application is aborted the moment an exception reaches the event loop!! | * The application is aborted the moment an exception reaches the event loop!! | ||

=== Behavior - Node Anti-pattern | === Behavior - Node Anti-pattern === | ||

* Wrapping the invocation of readJSONThrows() with a try-catch block will not work | * Wrapping the invocation of readJSONThrows() with a try-catch block will not work | ||

* The stack in which the block operates is different from the one in which our callback is invoked | * The stack in which the block operates is different from the one in which our callback is invoked | ||

| Line 549: | Line 550: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Uncaught Exceptions – "Last chance" | === Uncaught Exceptions – "Last chance" === | ||

* Node.js emits a special event called uncaughtException just before exiting the process | * Node.js emits a special event called uncaughtException just before exiting the process | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 564: | Line 565: | ||

* It is always advised, especially in production, to exit anyway from the application after an uncaught exception is received. | * It is always advised, especially in production, to exit anyway from the application after an uncaught exception is received. | ||

== Callback & Flow Control | == Callback & Flow Control == | ||

Node.js & the callback discipline – Asynchronous Flow control patterns | Node.js & the callback discipline – Asynchronous Flow control patterns | ||

* Introduction | * Introduction | ||

| Line 572: | Line 573: | ||

* Parallel Execution | * Parallel Execution | ||

=== Intro | === Intro === | ||

* Writing asynchronous code can be a different experience, especially when it comes to control flow | * Writing asynchronous code can be a different experience, especially when it comes to control flow | ||

* To avoid ending up writing inefficient and unreadable code require the developer to take new approaches and techniques | * To avoid ending up writing inefficient and unreadable code require the developer to take new approaches and techniques | ||

* Sacrificing qualities such as modularity, reusability, and maintainability leads to the uncontrolled proliferation of callback nesting, the growth in the size of functions, and will lead to poor code organization | * Sacrificing qualities such as modularity, reusability, and maintainability leads to the uncontrolled proliferation of callback nesting, the growth in the size of functions, and will lead to poor code organization | ||

=== The Callback Hell | === The Callback Hell === | ||

Simple Web Spider | Simple Web Spider | ||

* Code for a simple web spider: a command-line application that takes in a web URL as input and downloads its contents locally into a file. | * Code for a simple web spider: a command-line application that takes in a web URL as input and downloads its contents locally into a file. | ||

| Line 586: | Line 587: | ||

** In fact what we have is one of the most well recognized and severe anti-patterns in Node.js and JavaScript | ** In fact what we have is one of the most well recognized and severe anti-patterns in Node.js and JavaScript | ||

=== The Callback Hell Con't | === The Callback Hell Con't === | ||

Simple Web Spider | Simple Web Spider | ||

* The anti-pattern | * The anti-pattern | ||

| Line 602: | Line 603: | ||

** Overlapping variable names used in each scope (Similar names to describe the content of a variable >> err, error, err1, err2… ) | ** Overlapping variable names used in each scope (Similar names to describe the content of a variable >> err, error, err1, err2… ) | ||

=== The Callback Discipline | === The Callback Discipline === | ||

Basic principles - to keep the nesting level low and improve the organization of our code in general: | Basic principles - to keep the nesting level low and improve the organization of our code in general: | ||

* Exit as soon as possible. Use return, continue, or break, depending on the context, to immediately exit the current statement | * Exit as soon as possible. Use return, continue, or break, depending on the context, to immediately exit the current statement | ||

| Line 610: | Line 611: | ||

* Modularize the code >> Split the code into smaller, reusable functions whenever it's possible. | * Modularize the code >> Split the code into smaller, reusable functions whenever it's possible. | ||

=== The Callback Discipline Con't | === The Callback Discipline Con't === | ||

Basic principles | Basic principles | ||

* Use | * Use | ||

| Line 628: | Line 629: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== The Callback Discipline Example | === The Callback Discipline Example === | ||

Basic principles | Basic principles | ||

* The functionality that wris a given string to a file can be easily factored out into a separate function as follows | * The functionality that wris a given string to a file can be easily factored out into a separate function as follows | ||

| Line 646: | Line 647: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

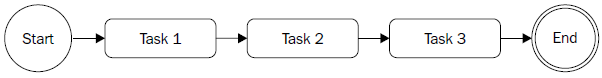

=== Sequential Execution | === Sequential Execution === | ||

The Need | The Need | ||

* Executing a set of tasks in sequence is running them one at a time one after the other. The order of execution matters and must be preserved | * Executing a set of tasks in sequence is running them one at a time one after the other. The order of execution matters and must be preserved | ||

| Line 655: | Line 656: | ||

[[File:SeqExec.png]] | [[File:SeqExec.png]] | ||

=== Sequential Execution Con't | === Sequential Execution Con't === | ||

Pattern | Pattern | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 678: | Line 679: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Sequential Execution Example | === Sequential Execution Example === | ||

Web Spider Version 2 | Web Spider Version 2 | ||

* Download all the links contained in a web page recursively | * Download all the links contained in a web page recursively | ||

| Line 684: | Line 685: | ||

* The spider() function will use a function spiderLinks() for a recursive download of all the links of a page | * The spider() function will use a function spiderLinks() for a recursive download of all the links of a page | ||

=== Sequential Execution Pattern | === Sequential Execution Pattern === | ||

The Pattern | The Pattern | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 703: | Line 704: | ||

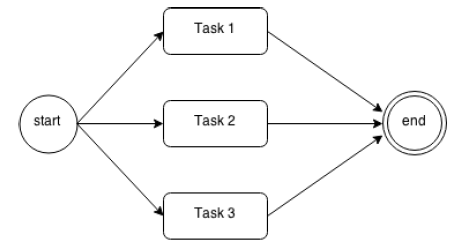

=== Parallel Execution | === Parallel Execution === | ||

The Need | The Need | ||

* The order of the execution of a set of asynchronous tasks is not important and all we want is just to be notified when all those running tasks are completed | * The order of the execution of a set of asynchronous tasks is not important and all we want is just to be notified when all those running tasks are completed | ||

| Line 709: | Line 710: | ||

[[File:ParallelExec.png]] | [[File:ParallelExec.png]] | ||

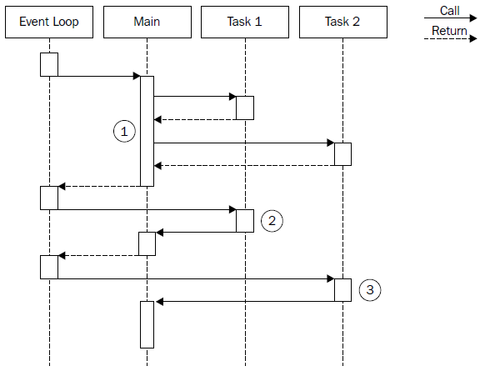

=== Parallel Execution Con't | === Parallel Execution Con't === | ||

Web Spider Version 3 | Web Spider Version 3 | ||

[[File:ParallelExecDiag.png|480 px]] | [[File:ParallelExecDiag.png|480 px]] | ||

=== Parallel Execution Example | === Parallel Execution Example === | ||

Web Spider Version 3 | Web Spider Version 3 | ||

# The '''Main''' function triggers the execution of '''Task 1''' and '''Task 2'''. As these trigger an asynchronous operation, they immediately return the control back to the '''Main''' function, which then returns it to the event loop. | # The '''Main''' function triggers the execution of '''Task 1''' and '''Task 2'''. As these trigger an asynchronous operation, they immediately return the control back to the '''Main''' function, which then returns it to the event loop. | ||

| Line 720: | Line 721: | ||

# When the asynchronous operation triggered by '''Task 2''' is completed, the event loop invokes its callback, giving the control back to '''Task 2'''. At the end of '''Task 2''', the '''Main''' function is again notified. At this point, the '''Main''' function knows that both '''Task 1''' and '''Task 2''' are complete, so it can continue its execution or return the results of the operations to another callback … | # When the asynchronous operation triggered by '''Task 2''' is completed, the event loop invokes its callback, giving the control back to '''Task 2'''. At the end of '''Task 2''', the '''Main''' function is again notified. At this point, the '''Main''' function knows that both '''Task 1''' and '''Task 2''' are complete, so it can continue its execution or return the results of the operations to another callback … | ||

=== Parallel Execution Example Con't | === Parallel Execution Example Con't === | ||

Web Spider Version 3 | Web Spider Version 3 | ||

* Improve the performance of the web spider by downloading all the linked pages in parllel | * Improve the performance of the web spider by downloading all the linked pages in parllel | ||

| Line 728: | Line 729: | ||

** When number of completed downloads reaches the size of the links array, the final callback is invoked | ** When number of completed downloads reaches the size of the links array, the final callback is invoked | ||

=== Parallel Execution Pattern | === Parallel Execution Pattern === | ||

The Pattern | The Pattern | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 745: | Line 746: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

== Module System, Patterns | == Module System, Patterns == | ||

* Module Intro | * Module Intro | ||

* Homemade Module Loader | * Homemade Module Loader | ||

| Line 756: | Line 757: | ||

* Modules worth to know | * Modules worth to know | ||

=== Nodejs Modules | === Nodejs Modules === | ||

* Resolve one of the major problems with JavaScript >> the absence of namespacing | * Resolve one of the major problems with JavaScript >> the absence of namespacing | ||

* Are the bricks for structuring non-trivial applications | * Are the bricks for structuring non-trivial applications | ||

* Are the main mechanism to enforce '''information hiding''' (keeping private all the functions and variables that are not explicitly marked to be '''exported''') | * Are the main mechanism to enforce '''information hiding''' (keeping private all the functions and variables that are not explicitly marked to be '''exported''') | ||

=== Modules Con't | === Modules Con't === | ||

They are based on the '''revealing module pattern''' | They are based on the '''revealing module pattern''' | ||

* A self-invoking function to create a private scope, exporting only the parts that are meant to be public | * A self-invoking function to create a private scope, exporting only the parts that are meant to be public | ||

| Line 777: | Line 778: | ||

* This pattern is used as a base for the Node.js module system | * This pattern is used as a base for the Node.js module system | ||

=== Modules Con't | === Modules Con't === | ||

Node.js Modules | Node.js Modules | ||

* CommonJS is a group with the aim to standardize the JavaScript ecosystem | * CommonJS is a group with the aim to standardize the JavaScript ecosystem | ||

** One of their most popular proposals is called CommonJS modules. | ** One of their most popular proposals is called CommonJS modules. | ||

* Node.js built its module | * Node.js built its module system on top of this specification, with the addition of some custom extensions: | ||

** Each module runs in a private scope | ** Each module runs in a private scope | ||

** Every variable that is defined locally does not pollute the global namespace | ** Every variable that is defined locally does not pollute the global namespace | ||

=== Homemade Module Loader | === Homemade Module Loader === | ||

The behavior of ''loadModule'' | The behavior of ''loadModule'' | ||

* The code that follows creates a function that mimics a subset of the functionality of the original ''require()'' function of Node.js | * The code that follows creates a function that mimics a subset of the functionality of the original ''require()'' function of Node.js | ||

| Line 798: | Line 799: | ||

* We pass/inject a list of variables to the module, in particular: '''module''' , '''exports''' , and '''require''' . | * We pass/inject a list of variables to the module, in particular: '''module''' , '''exports''' , and '''require''' . | ||

=== Module Loader Con't | === Module Loader Con't === | ||

The behavior of ''require'' | The behavior of ''require'' | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 825: | Line 826: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

==== Behavior of require | ==== Behavior of require ==== | ||

The ''require()'' function of Node.js loads modules | The ''require()'' function of Node.js loads modules | ||

# With the module name we resolve the full path of the module | # With the module name we resolve the full path of the module | ||

| Line 838: | Line 839: | ||

# The content of module.exports (the public API of the module) is returned to the caller | # The content of module.exports (the public API of the module) is returned to the caller | ||

=== Defining Modules & Globals | === Defining Modules & Globals === | ||

Defining a Module | Defining a Module | ||

* You need not worry about wrapping your code in a module | * You need not worry about wrapping your code in a module | ||

| Line 856: | Line 857: | ||

* The contents of this variable are then cached and returned when the module is loaded using require() | * The contents of this variable are then cached and returned when the module is loaded using require() | ||

=== Modules & Globals Con't | === Modules & Globals Con't === | ||

Defining Globals | Defining Globals | ||

* All the variables and functions that are declared in a module are defined in its local scope | * All the variables and functions that are declared in a module are defined in its local scope | ||

| Line 862: | Line 863: | ||

** The module system exposes a special variable called ''global'' that can be used for this purpose. | ** The module system exposes a special variable called ''global'' that can be used for this purpose. | ||

=== exports & require | === exports & require === | ||

exports & module.exports - the variable '''exports''' is just a | exports & module.exports - the variable '''exports''' is just a reference to the initial value of '''module.exports''' (simple object literal created before the module is loaded). This means: | ||

* We can only attach new properties to the object referenced by the '''exports''' variable, as shown in the following code: | * We can only attach new properties to the object referenced by the '''exports''' variable, as shown in the following code: | ||

<syntaxhighlight lang="javascript">exports.hello = function() {console.log('Hello');}</syntaxhighlight> | <syntaxhighlight lang="javascript">exports.hello = function() {console.log('Hello');}</syntaxhighlight> | ||

| Line 871: | Line 872: | ||

<syntaxhighlight lang="javascript">module.exports = function() { console.log('Hello');}</syntaxhighlight> | <syntaxhighlight lang="javascript">module.exports = function() { console.log('Hello');}</syntaxhighlight> | ||

=== exports & require con't | === exports & require con't === | ||

require is synchronous | require is synchronous | ||

* That our homemade '''require''' function is synchronous. It returns the module contents using a simple direct style | * That our homemade '''require''' function is synchronous. It returns the module contents using a simple direct style | ||

| Line 886: | Line 887: | ||

* In its early days, Node had an asynchronous version of '''require()''' , it was removed (making over complicated a functionality that was meant to be used only at initialization time) | * In its early days, Node had an asynchronous version of '''require()''' , it was removed (making over complicated a functionality that was meant to be used only at initialization time) | ||

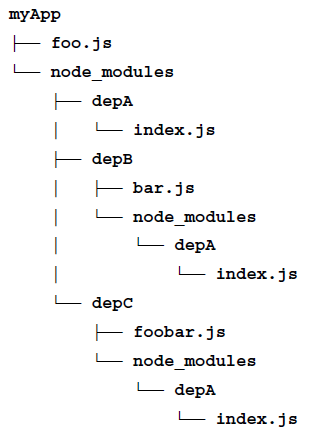

=== Resolving Algorithm | === Resolving Algorithm === | ||

Dependency hell | Dependency hell | ||

* A situation whereby the dependencies of a software, in turn depend on a shared dependency, but require different incompatible versions | * A situation whereby the dependencies of a software, in turn depend on a shared dependency, but require different incompatible versions | ||

| Line 896: | Line 897: | ||

** The path is used to load its code and also to identify the module uniquely | ** The path is used to load its code and also to identify the module uniquely | ||

=== Resolving Algorithm - Branches | === Resolving Algorithm - Branches === | ||

The resolving algorithm can be divided into the following three major branches: | The resolving algorithm can be divided into the following three major branches: | ||

* '''File modules''': If ''moduleName'' starts with "/" it's considered already an absolute path to the module and it's returned as it is. If it starts with "./", then moduleName is considered a relative path, which is calculated starting from the requiring module. | * '''File modules''': If ''moduleName'' starts with "/" it's considered already an absolute path to the module and it's returned as it is. If it starts with "./", then moduleName is considered a relative path, which is calculated starting from the requiring module. | ||

| Line 902: | Line 903: | ||

* '''Package modules''': If no core module is found matching ''moduleName'' , then the search continues by looking for a matching module into the first '''node_modules''' directory that is found navigating up in the directory structure starting from the requiring module. The algorithm continues to search for a match by looking into the next ''node_modules'' directory up in the directory tree, until it reaches the root of the filesystem. | * '''Package modules''': If no core module is found matching ''moduleName'' , then the search continues by looking for a matching module into the first '''node_modules''' directory that is found navigating up in the directory structure starting from the requiring module. The algorithm continues to search for a match by looking into the next ''node_modules'' directory up in the directory tree, until it reaches the root of the filesystem. | ||

=== Resolving Algorithm, matching | === Resolving Algorithm, matching === | ||

* For file and package modules, both the individual files and directories can match moduleName . In particular, the algorithm will try to match the following: | * For file and package modules, both the individual files and directories can match moduleName . In particular, the algorithm will try to match the following: | ||

** < moduleName >.js | ** < moduleName >.js | ||

| Line 908: | Line 909: | ||

** The directory/file specified in the main property of < moduleName >/package.json | ** The directory/file specified in the main property of < moduleName >/package.json | ||

=== Resolving Algorithm, dependency | === Resolving Algorithm, dependency === | ||

{| | {| | ||

|- | |- | ||

| Line 919: | Line 920: | ||

|} | |} | ||

=== Module Cache | === Module Cache === | ||

* Each module is loaded and evaluated only the first time it is required | * Each module is loaded and evaluated only the first time it is required | ||

** Any subsequent call of '''require()''' will simply return the cached version (… homemade require function) | ** Any subsequent call of '''require()''' will simply return the cached version (… homemade require function) | ||

| Line 927: | Line 928: | ||

* The module cache is exposed in the '''require.cache''' variable. It ispossible to directly access it if needed (a common use case is to invalidate any cached module … useful during testing) | * The module cache is exposed in the '''require.cache''' variable. It ispossible to directly access it if needed (a common use case is to invalidate any cached module … useful during testing) | ||

=== Cycles | === Cycles === | ||

* Circular module dependencies can happen in a real project, so it's useful for us to know how this works in Node.js | * Circular module dependencies can happen in a real project, so it's useful for us to know how this works in Node.js | ||

* Module a.js | * Module a.js | ||

| Line 948: | Line 949: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Cycles Con't | === Cycles Con't === | ||

* If we load these from another module, main.js, as follows: | * If we load these from another module, main.js, as follows: | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 971: | Line 972: | ||

* b.js then finishes loading, and its exports object is provided to the a.js module. | * b.js then finishes loading, and its exports object is provided to the a.js module. | ||

=== Module Definition Patterns | === Module Definition Patterns === | ||

* Module System & APIs | * Module System & APIs | ||

* Patterns – Named Exports | * Patterns – Named Exports | ||

| Line 979: | Line 980: | ||

* Monkey patching | * Monkey patching | ||

==== Module System & APIs | ==== Module System & APIs ==== | ||

* The module system, besides being a mechanism for loading dependencies, is also a tool for defining APIs | * The module system, besides being a mechanism for loading dependencies, is also a tool for defining APIs | ||

* The main factor to consider is the balance between private and public functionality | * The main factor to consider is the balance between private and public functionality | ||

* The aim is to maximize information hiding and API usability, while balancing these with other software qualities like extensibility and code reuse. | * The aim is to maximize information hiding and API usability, while balancing these with other software qualities like extensibility and code reuse. | ||

==== Patterns – Named Exports | ==== Patterns – Named Exports ==== | ||

The most basic method for exposing a public API is using named exports | The most basic method for exposing a public API is using named exports | ||

* Consists in assigning all the values we want to make public to properties of the object referenced by exports (or module.exports ) | * Consists in assigning all the values we want to make public to properties of the object referenced by exports (or module.exports ) | ||

| Line 999: | Line 1,000: | ||

* The exported functions are then available as properties of the loaded module | * The exported functions are then available as properties of the loaded module | ||

==== Patterns – Named Exports Con't | ==== Patterns – Named Exports Con't ==== | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

//file main.js | //file main.js | ||

| Line 1,010: | Line 1,011: | ||

* The use of module.exports is an extension provided by Node.js to support a broader range of module definition patterns … | * The use of module.exports is an extension provided by Node.js to support a broader range of module definition patterns … | ||

==== Patterns – Exporting a Function ( substack pattern ) | ==== Patterns – Exporting a Function ( substack pattern ) ==== | ||

* One of the most popular module definition patterns consists in reassigning the whole module.exports variable to a function. | * One of the most popular module definition patterns consists in reassigning the whole module.exports variable to a function. | ||

* Its main strength it's the fact that it exposes only a single functionality, which provides a clear entry point for the module, and makes it simple to understand and use | * Its main strength it's the fact that it exposes only a single functionality, which provides a clear entry point for the module, and makes it simple to understand and use | ||

| Line 1,021: | Line 1,022: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

==== Substack pattern | ==== Substack pattern ==== | ||

* A possible extension of this pattern is using the exported function as namespace for other public APIs. | * A possible extension of this pattern is using the exported function as namespace for other public APIs. | ||

* This is a very powerful combination, because it still gives the module the clarity of a single entry point (the main exported function) | * This is a very powerful combination, because it still gives the module the clarity of a single entry point (the main exported function) | ||

| Line 1,031: | Line 1,032: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

==== Substack pattern con't | ==== Substack pattern con't ==== | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

//file main.js | //file main.js | ||

| Line 1,039: | Line 1,040: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

==== Patterns – Exporting a Constructor | ==== Patterns – Exporting a Constructor ==== | ||

* Specialization of a module that exports a function. The difference is that with this new pattern low the user to create new instances using the constructor | * Specialization of a module that exports a function. The difference is that with this new pattern low the user to create new instances using the constructor | ||

** We also give them the ability to extend its prototype and forge new classes | ** We also give them the ability to extend its prototype and forge new classes | ||

| Line 1,059: | Line 1,060: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

==== Exporting a Constructor | ==== Exporting a Constructor ==== | ||

* We can use the preceding module as follows | * We can use the preceding module as follows | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 1,072: | Line 1,073: | ||

** It allows much more power when it comes to extending its functionality | ** It allows much more power when it comes to extending its functionality | ||

==== Exporting a Constructor Con't | ==== Exporting a Constructor Con't ==== | ||

* A variation of this pattern consists in applying a guard against invocations that don't use the new instruction. | * A variation of this pattern consists in applying a guard against invocations that don't use the new instruction. | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 1,083: | Line 1,084: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

==== Patterns – Exporting an Instance | ==== Patterns – Exporting an Instance ==== | ||

* We can leverage the caching mechanism of require() to easily define stateful Instances: | * We can leverage the caching mechanism of require() to easily define stateful Instances: | ||

** Objects with a state created from a constructor or a factory, which can be shared across different modules | ** Objects with a state created from a constructor or a factory, which can be shared across different modules | ||

| Line 1,099: | Line 1,100: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

==== Exporting an Instance | ==== Exporting an Instance ==== | ||

* This newly defined module can then be used as follows: | * This newly defined module can then be used as follows: | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 1,110: | Line 1,111: | ||

** In the resolving algorithm, we have seen that that a module might be installed multiple times inside the dependency tree of an application. | ** In the resolving algorithm, we have seen that that a module might be installed multiple times inside the dependency tree of an application. | ||

==== Exporting an Instance Con't | ==== Exporting an Instance Con't ==== | ||

* An extension to the pattern we just described consists in exposing the constructor used to create the instance, in addition to the instance itself, we can then | * An extension to the pattern we just described consists in exposing the constructor used to create the instance, in addition to the instance itself, we can then | ||

** Create new instances of the same object | ** Create new instances of the same object | ||

| Line 1,120: | Line 1,121: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

==== Patterns - Modifying modules or the global scope (monkey patching) | ==== Patterns - Modifying modules or the global scope (monkey patching) ==== | ||

* A module can export nothing and modify the global scope and any object in it, including other modules in the cache | * A module can export nothing and modify the global scope and any object in it, including other modules in the cache | ||

* Considered bad practice but can be useful and safe under some circumstances (testing) and is sometimes used in the wild | * Considered bad practice but can be useful and safe under some circumstances (testing) and is sometimes used in the wild | ||

| Line 1,132: | Line 1,133: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

==== Monkey patching Con't | ==== Monkey patching Con't ==== | ||

* Using our new patcher module would be as easy as writing the following code: | * Using our new patcher module would be as easy as writing the following code: | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 1,143: | Line 1,144: | ||

* Be careful you can affect the state of entities outside their scope | * Be careful you can affect the state of entities outside their scope | ||

== npm modules worth to know | == npm modules worth to know == | ||

* Frameworks and Tools | * Frameworks and Tools | ||

** <small>https://nodejs.dev/learn#nodejs-frameworks-and-tools</small> | ** <small>https://nodejs.dev/learn#nodejs-frameworks-and-tools</small> | ||

| Line 1,149: | Line 1,150: | ||

** <small>https://www.npmtrends.com/node-fetch-vs-got-vs-axios-vs-superagent</small> | ** <small>https://www.npmtrends.com/node-fetch-vs-got-vs-axios-vs-superagent</small> | ||

== Event Emitters - The Observer Pattern | == Event Emitters - The Observer Pattern == | ||

* The Pattern – The EventEmitter | * The Pattern – The EventEmitter | ||

* Create and use EventEmitters | * Create and use EventEmitters | ||

| Line 1,159: | Line 1,160: | ||

* Patterns | * Patterns | ||

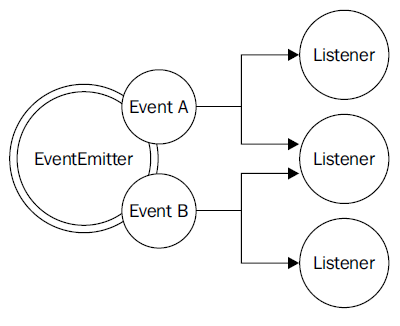

=== The Pattern – The EventEmitter | === The Pattern – The EventEmitter === | ||

* The Observer Pattern | * The Observer Pattern | ||

** Fundamental pattern used in Node.js. (one of the pillars of the platform) | ** Fundamental pattern used in Node.js. (one of the pillars of the platform) | ||

| Line 1,167: | Line 1,168: | ||

** Defines an object (called subject), which can notify a set of observers (or listeners), when a change in its state happens. | ** Defines an object (called subject), which can notify a set of observers (or listeners), when a change in its state happens. | ||

=== The Pattern – The EventEmitter Con't | === The Pattern – The EventEmitter Con't === | ||

* Requires: interfaces, concrete classes, hierarchy | * Requires: interfaces, concrete classes, hierarchy | ||

* In Node.j it's already built into the core and is available through the EventEmitter class | * In Node.j it's already built into the core and is available through the EventEmitter class | ||

** that class allows to register one or more functions as listeners, which will be invoked when a particular event type is fired | ** that class allows to register one or more functions as listeners, which will be invoked when a particular event type is fired | ||

=== The Pattern – The EventEmitter as prototype | === The Pattern – The EventEmitter as prototype === | ||

* The EventEmitter is a prototype, and it is exported from the events core module. | * The EventEmitter is a prototype, and it is exported from the events core module. | ||

* The following code shows how we can obtain a reference to it: | * The following code shows how we can obtain a reference to it: | ||

| Line 1,182: | Line 1,183: | ||

[[File:EventEmitProto.png]] | [[File:EventEmitProto.png]] | ||

=== The Pattern – The EventEmitter methods | === The Pattern – The EventEmitter methods === | ||

*<syntaxhighlight lang="javascript" inline>on(event, listener)</syntaxhighlight>: allows to register a new listener (a function) for the given event type (a string) | *<syntaxhighlight lang="javascript" inline>on(event, listener)</syntaxhighlight>: allows to register a new listener (a function) for the given event type (a string) | ||

*<syntaxhighlight lang="javascript" inline>once(event, listener)</syntaxhighlight>: registers a new listener, removed after the event is emitted for the first time | *<syntaxhighlight lang="javascript" inline>once(event, listener)</syntaxhighlight>: registers a new listener, removed after the event is emitted for the first time | ||

| Line 1,188: | Line 1,189: | ||

*<syntaxhighlight lang="javascript" inline>removeListener(event, listener)</syntaxhighlight>: removes a listener for the specified event type | *<syntaxhighlight lang="javascript" inline>removeListener(event, listener)</syntaxhighlight>: removes a listener for the specified event type | ||

=== The Pattern – The EventEmitter methods con't | === The Pattern – The EventEmitter methods con't === | ||

* All the preceding methods will return the EventEmitter instance to allow chaining. | * All the preceding methods will return the EventEmitter instance to allow chaining. | ||

* The listener function has the signature, '''function([arg1], […])''' , it accepts the arguments provided the moment the event is emitted | * The listener function has the signature, '''function([arg1], […])''' , it accepts the arguments provided the moment the event is emitted | ||

* Inside the listener, '''this''' refers to the instance of the '''EventEmitter''' that produced the event. | * Inside the listener, '''this''' refers to the instance of the '''EventEmitter''' that produced the event. | ||

=== Create and use EventEmitters | === Create and use EventEmitters === | ||

* Use an '''EventEmitter''' to notify its subscribers in real time when a particular pattern is found in a list of files: | * Use an '''EventEmitter''' to notify its subscribers in real time when a particular pattern is found in a list of files: | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 1,216: | Line 1,217: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Create and use EventEmitters Con't | === Create and use EventEmitters Con't === | ||

* The EventEmitter created by the preceding function will produce the following three events: | * The EventEmitter created by the preceding function will produce the following three events: | ||

** '''fileread''': This event occurs when a file is read | ** '''fileread''': This event occurs when a file is read | ||

| Line 1,232: | Line 1,233: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Propagating Errors | === Propagating Errors === | ||

* The '''EventEmitter''' - as it happens for callbacks - cannot just throw exceptions when an error condition occurs | * The '''EventEmitter''' - as it happens for callbacks - cannot just throw exceptions when an error condition occurs | ||

** They would be lost in the event loop if the event is emitted asynchronously ! | ** They would be lost in the event loop if the event is emitted asynchronously ! | ||

| Line 1,238: | Line 1,239: | ||

** If no associated listener is found Node.js will automatically throw an exception and exit from the program | ** If no associated listener is found Node.js will automatically throw an exception and exit from the program | ||

=== Make an Object Observable | === Make an Object Observable === | ||

* It is more common (rather than always use a dedicated object to manage events) to use a generic object observable | * It is more common (rather than always use a dedicated object to manage events) to use a generic object observable | ||

** This is done by extending the EventEmitter class. | ** This is done by extending the EventEmitter class. | ||

| Line 1,273: | Line 1,274: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Make an Object Observable - usage | === Make an Object Observable - usage === | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

const findRegexInstance = new FindRegex(/hello \w+/) | const findRegexInstance = new FindRegex(/hello \w+/) | ||

| Line 1,284: | Line 1,285: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Make an Object Observable - more | === Make an Object Observable - more === | ||

More | More | ||

* The Server object of the core http module defines methods such as '''listen(), close(), setTimeout()''' | * The Server object of the core http module defines methods such as '''listen(), close(), setTimeout()''' | ||

| Line 1,293: | Line 1,294: | ||

* Other notable examples of objects extending the '''EventEmitter''' are streams. | * Other notable examples of objects extending the '''EventEmitter''' are streams. | ||

=== Synchronous & Asynchronous Events | === Synchronous & Asynchronous Events === | ||

Events & The event loop | Events & The event loop | ||

* The '''EventListener''' calls all listeners synchronously in the order in which they were registered. | * The '''EventListener''' calls all listeners synchronously in the order in which they were registered. | ||

| Line 1,302: | Line 1,303: | ||

* The main difference between emitting synchronous or asynchronous events is in the way listeners can be registered | * The main difference between emitting synchronous or asynchronous events is in the way listeners can be registered | ||

=== Synchronous & Asynchronous Events Con't | === Synchronous & Asynchronous Events Con't === | ||

* Asynchronous Events | * Asynchronous Events | ||

** The user has all the time to register new listeners even after the '''EventEmitter''' is initialized (why?) | ** The user has all the time to register new listeners even after the '''EventEmitter''' is initialized (why?) | ||

| Line 1,311: | Line 1,312: | ||

** The event is produced synchronously and the listener is registered after the event was already sent, so the result is that the listener is never invoked; the code will print nothing to the console | ** The event is produced synchronously and the listener is registered after the event was already sent, so the result is that the listener is never invoked; the code will print nothing to the console | ||

=== EventEmitters vs Callbacks | === EventEmitters vs Callbacks === | ||

Reusability | Reusability | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 1,327: | Line 1,328: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== EventEmitters vs Callbacks Con't | === EventEmitters vs Callbacks Con't === | ||

* Two functions equivalent; one communicates the completion of the timeout using an event, the other uses a callback | * Two functions equivalent; one communicates the completion of the timeout using an event, the other uses a callback | ||

** Callbacks have some limitations when it comes to supporting different types of events | ** Callbacks have some limitations when it comes to supporting different types of events | ||

| Line 1,336: | Line 1,337: | ||

** Using an EventEmitter function it's possible for multiple listeners to receive the same notification (loose coupling) | ** Using an EventEmitter function it's possible for multiple listeners to receive the same notification (loose coupling) | ||

=== Combine Callbacks & EventEmitters | === Combine Callbacks & EventEmitters === | ||

* The Pattern | * The Pattern | ||

** Useful pattern for small surface area done by : | ** Useful pattern for small surface area done by : | ||

| Line 1,346: | Line 1,347: | ||

** At the same time, the function returns an EventEmitter that provides a more fine-grained report over the state of the process | ** At the same time, the function returns an EventEmitter that provides a more fine-grained report over the state of the process | ||

=== Combine Callbacks & EventEmitters Con't | === Combine Callbacks & EventEmitters Con't === | ||

* Exposing a simple, clean, and minimal entry point | * Exposing a simple, clean, and minimal entry point | ||

** while still providing more advanced or less important features with secondary means | ** while still providing more advanced or less important features with secondary means | ||

| Line 1,365: | Line 1,366: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

== Buffers & Data Serialization | == Buffers & Data Serialization == | ||

* Introduction | * Introduction | ||

* Changing Encodings | * Changing Encodings | ||

=== Introduction | === Introduction === | ||

* The need for Buffers | * The need for Buffers | ||

** The ability to serialize data is fundamental to cross-application and cross-network communication. | ** The ability to serialize data is fundamental to cross-application and cross-network communication. | ||

| Line 1,377: | Line 1,378: | ||

*** Buffers are exposed globally and therefore don’t need to be required, and can be thought of as just another JavaScript type (like String or Number) | *** Buffers are exposed globally and therefore don’t need to be required, and can be thought of as just another JavaScript type (like String or Number) | ||

=== Changing Encodings | === Changing Encodings === | ||

Buffers to Plain Text | Buffers to Plain Text | ||

* If no encoding is given, file operations and many network operations will return data as a Buffer: | * If no encoding is given, file operations and many network operations will return data as a Buffer: | ||

| Line 1,388: | Line 1,389: | ||

* By default, Node’s core APIs returns a buffer unless an encoding is specified, but buffers easily convert to other formats | * By default, Node’s core APIs returns a buffer unless an encoding is specified, but buffers easily convert to other formats | ||

=== Changing Encodings Con't | === Changing Encodings Con't === | ||

Buffers to Plain Text | Buffers to Plain Text | ||

* File '''names.txt''' that contains: | * File '''names.txt''' that contains: | ||

| Line 1,409: | Line 1,410: | ||

</pre> | </pre> | ||

=== Changing Encodings - types | === Changing Encodings - types === | ||

Buffers to Plain Text | Buffers to Plain Text | ||

* The Buffer class provides a method called toString to convert our data into a UTF-8 encoded string: | * The Buffer class provides a method called toString to convert our data into a UTF-8 encoded string: | ||

| Line 1,427: | Line 1,428: | ||

* The Buffer API provides other encodings such as '''utf16le''' , '''base64''' , and '''hex''' | * The Buffer API provides other encodings such as '''utf16le''' , '''base64''' , and '''hex''' | ||

=== Changing Encodings - auth header | === Changing Encodings - auth header === | ||

Changing String Encodings - Authentication header | Changing String Encodings - Authentication header | ||

* The Node Buffer API provides a mechanism to change encodings. | * The Node Buffer API provides a mechanism to change encodings. | ||

| Line 1,439: | Line 1,440: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Changing Encodings - auth header con't | === Changing Encodings - auth header con't === | ||

Changing String Encodings - Authentication header | Changing String Encodings - Authentication header | ||

* Convert it into a Buffer in order to change it into another encoding. | * Convert it into a Buffer in order to change it into another encoding. | ||

| Line 1,453: | Line 1,454: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Changing Encodings - data URI | === Changing Encodings - data URI === | ||

* Data URIs allow a resource to be embedded inline on a web page using the following scheme: | * Data URIs allow a resource to be embedded inline on a web page using the following scheme: | ||

<pre>data:[MIME-type][;charset=<encoding>[;base64],<data></pre> | <pre>data:[MIME-type][;charset=<encoding>[;base64],<data></pre> | ||

| Line 1,468: | Line 1,469: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Changing Encodings - data URI con't | === Changing Encodings - data URI con't === | ||

* The other way around: | * The other way around: | ||

<syntaxhighlight lang="javascript"> | <syntaxhighlight lang="javascript"> | ||

| Line 1,479: | Line 1,480: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

== Streams - Types, Usage & Implementation, Flow Control | == Streams - Types, Usage & Implementation, Flow Control == | ||

* Importance of Streams | * Importance of Streams | ||

* Anatomy of a Stream | * Anatomy of a Stream | ||

| Line 1,489: | Line 1,490: | ||

* Flow Control With Streams | * Flow Control With Streams | ||

=== Importance of Streams | === Importance of Streams === | ||

Introduction | Introduction | ||

* Streams are one of the most important components and patterns of Node.js | * Streams are one of the most important components and patterns of Node.js | ||

| Line 1,497: | Line 1,498: | ||

** Sending the output as soon as it is produced by the application | ** Sending the output as soon as it is produced by the application | ||

=== Importance of Streams Con't | === Importance of Streams Con't === | ||

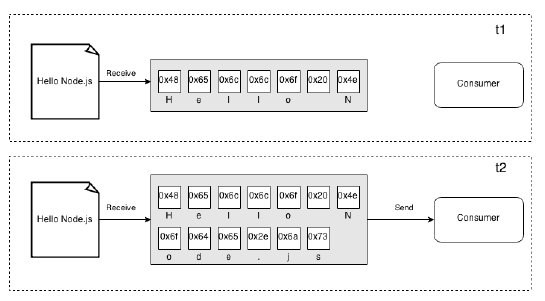

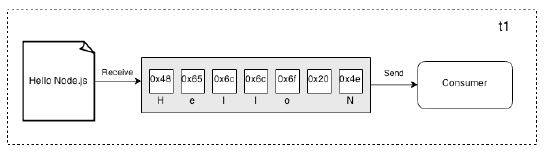

Buffers vs Streams | Buffers vs Streams | ||

* All the asynchronous APIs that we've seen so far work using the buffer mode. | * All the asynchronous APIs that we've seen so far work using the buffer mode. | ||

| Line 1,504: | Line 1,505: | ||

[[File:BufVSstream1.png]] | [[File:BufVSstream1.png]] | ||

=== Buffers vs Streams Con't | === Buffers vs Streams Con't === | ||

* On the other side, streams allow you to process the data as soon as it arrives from the resource | * On the other side, streams allow you to process the data as soon as it arrives from the resource | ||

** Each new chunk of data is received from the resource and is immediately provided to the consumer, (can process it straightaway) | ** Each new chunk of data is received from the resource and is immediately provided to the consumer, (can process it straightaway) | ||

| Line 1,513: | Line 1,514: | ||

[[File:BufVSstream2.png]] | [[File:BufVSstream2.png]] | ||

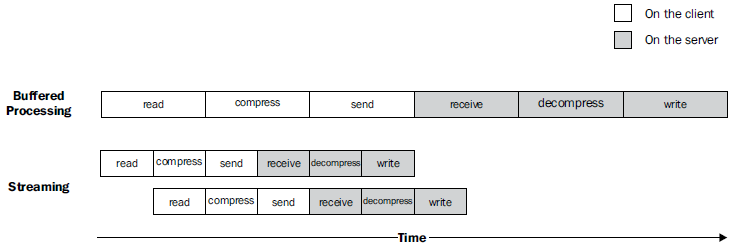

=== Buffers vs Streams – Time Efficiency | === Buffers vs Streams – Time Efficiency === | ||

[[File:BufVSstream3.png]] | [[File:BufVSstream3.png]] | ||

=== Buffers vs Streams – Composability | === Buffers vs Streams – Composability === | ||

* The code we have seen so far has already given us an overview of how streams can be composed, thanks to pipes | * The code we have seen so far has already given us an overview of how streams can be composed, thanks to pipes | ||

* This is possible because streams have a uniform interface | * This is possible because streams have a uniform interface | ||

** The only prerequisitis that the next stream in the pipeline has to support the data type produced by the previous stream (binary, text, or even objects) | ** The only prerequisitis that the next stream in the pipeline has to support the data type produced by the previous stream (binary, text, or even objects) | ||

=== Streams and Node.js core | === Streams and Node.js core === | ||

* Streams are powerful and are everywhere in Node.js, starting from its core modules. | * Streams are powerful and are everywhere in Node.js, starting from its core modules. | ||

** The '''fs''' module has '''createReadStream()''' and '''createWriteStream()''' | ** The '''fs''' module has '''createReadStream()''' and '''createWriteStream()''' | ||

| Line 1,528: | Line 1,529: | ||

streaming interface. | streaming interface. | ||

=== Anatomy of a Stream | === Anatomy of a Stream === | ||

* Every stream in Node.js is an implementation of one of the four base abstract classes available in the stream core module: | * Every stream in Node.js is an implementation of one of the four base abstract classes available in the stream core module: | ||

** '''stream.Readable''' | ** '''stream.Readable''' | ||

| Line 1,542: | Line 1,543: | ||

** Object mode: Where the streaming data is treated as a sequence of discreet objects (allowing to use almost any JavaScript value) | ** Object mode: Where the streaming data is treated as a sequence of discreet objects (allowing to use almost any JavaScript value) | ||

=== Readable Streams | === Readable Streams === | ||

* Implementation | * Implementation | ||

** Represents a source of data | ** Represents a source of data | ||

| Line 1,551: | Line 1,552: | ||

*** Flowing | *** Flowing | ||

=== Non-Flowing mode | === Non-Flowing mode === | ||

* Default pattern for reading from a Readable stream | * Default pattern for reading from a Readable stream | ||

* Consists of attaching a listener for the readable event that signals the availability of new data to read. | * Consists of attaching a listener for the readable event that signals the availability of new data to read. | ||

| Line 1,561: | Line 1,562: | ||

* The data is read exclusively from within the readable listener, which is invoked as soon as new data is available | * The data is read exclusively from within the readable listener, which is invoked as soon as new data is available | ||

=== Non-Flowing mode Con't | === Non-Flowing mode Con't === | ||

* When a stream is working in binary mode, we can specify the size we are interested in reading a specific amount of data by passing a '''size''' value to the '''read()''' method | * When a stream is working in binary mode, we can specify the size we are interested in reading a specific amount of data by passing a '''size''' value to the '''read()''' method | ||

* This is particularly useful when implementing network protocols or when parsing specific data formats | * This is particularly useful when implementing network protocols or when parsing specific data formats | ||

* Streaming paradigm is a '''universal interface''', which enables our programs to communicate, regardless of the language they are written in. | * Streaming paradigm is a '''universal interface''', which enables our programs to communicate, regardless of the language they are written in. | ||

=== Flowing mode | === Flowing mode === | ||

* Another way to read from a stream is by attaching a listener to the data event | * Another way to read from a stream is by attaching a listener to the data event | ||

* This will switch the stream into using the '''flowing''' mode where the data is '''not pulled''' using '''read()''' , but instead it's '''pushed''' to the data listener as soon as it arrives | * This will switch the stream into using the '''flowing''' mode where the data is '''not pulled''' using '''read()''' , but instead it's '''pushed''' to the data listener as soon as it arrives | ||

| Line 1,573: | Line 1,574: | ||

* To temporarily stop the stream from emitting data events, we can then invoke the '''pause()''' method, causing any incoming data to be cached in the internal buffer. | * To temporarily stop the stream from emitting data events, we can then invoke the '''pause()''' method, causing any incoming data to be cached in the internal buffer. | ||

=== Readable Stream implementation | === Readable Stream implementation === | ||

* Now that we know how to read from a stream, the next step is to learn how to implement a new Readable stream. | * Now that we know how to read from a stream, the next step is to learn how to implement a new Readable stream. | ||

** Create a new class by inheriting the prototype of '''stream.Readable''' | ** Create a new class by inheriting the prototype of '''stream.Readable''' | ||

| Line 1,583: | Line 1,584: | ||

* '''read()''' is a method called by the stream consumers, while '''_read()''' is a method to be implemented by a stream subclass and should never be called directly (underscore indicates method is not public) | * '''read()''' is a method called by the stream consumers, while '''_read()''' is a method to be implemented by a stream subclass and should never be called directly (underscore indicates method is not public) | ||

=== Readable Stream implementation Con't | === Readable Stream implementation Con't === | ||

<pre>stream.Readable.call(this, options);</pre> | <pre>stream.Readable.call(this, options);</pre> | ||

* Call the constructor of the parent class to initialize its '''internal state''', and forward the options argument received as input. | * Call the constructor of the parent class to initialize its '''internal state''', and forward the options argument received as input. | ||

| Line 1,591: | Line 1,592: | ||

*** The upper limit of the data stored in the internal buffer after which no more reading from the source should be done (highWaterMark defaults to 16 KB) | *** The upper limit of the data stored in the internal buffer after which no more reading from the source should be done (highWaterMark defaults to 16 KB) | ||

=== Writable Streams | === Writable Streams === | ||

Write in a Stream | Write in a Stream | ||

* A writable stream represents a data destination | * A writable stream represents a data destination | ||

| Line 1,600: | Line 1,601: | ||

<pre>writable.write(chunk, [encoding], [callback])</pre> | <pre>writable.write(chunk, [encoding], [callback])</pre> | ||

=== Write in a Stream Con't | === Write in a Stream Con't === | ||

* To signal that no more data will be written to the stream, we have to use the '''end()''' method | * To signal that no more data will be written to the stream, we have to use the '''end()''' method | ||

<pre>writable.end([chunk], [encoding], [callback])</pre> | <pre>writable.end([chunk], [encoding], [callback])</pre> | ||

| Line 1,606: | Line 1,607: | ||

** Fired when all the data written in the stream has been flushed into the underlying resource. | ** Fired when all the data written in the stream has been flushed into the underlying resource. | ||

=== Writable Stream Implementation | === Writable Stream Implementation === | ||

* To Implement a new Writable stream | * To Implement a new Writable stream | ||

** Inherit the prototype of '''stream.Writable''' | ** Inherit the prototype of '''stream.Writable''' | ||

| Line 1,621: | Line 1,622: | ||