Natural Language Processing in Python

1. Accessing Text Corpora and Lexical Resources ¶

A text corpus is a structured collections of texts. It’s a collection of files inside a directory.

Corporas can be categorized by genre or topic. Sometimes the categories may overlap, that is one text may belong to more than one genre or topic.

1.1. Common Sources for Corpora ¶

There are some builtin corpuses distributed along with nltk library. All corpuses live inside nltk.corpus subpackage. One of the corpuses is named gutenberg.

Corpuses may need to be downloaded. Execute the following code:

import nltk

print(nltk.corpus.gutenberg.fileids())

If it results in an error:

**********************************************************************

Resource u'corpora/gutenberg' not found. Please use the NLTK

Downloader to obtain the resource: >>> nltk.download()

Searched in:

- '/home/chris/nltk_data'

- '/usr/share/nltk_data'

- '/usr/local/share/nltk_data'

- '/usr/lib/nltk_data'

- '/usr/local/lib/nltk_data'

**********************************************************************

You need to open the NLTK downloader by typing:

nltk.download()

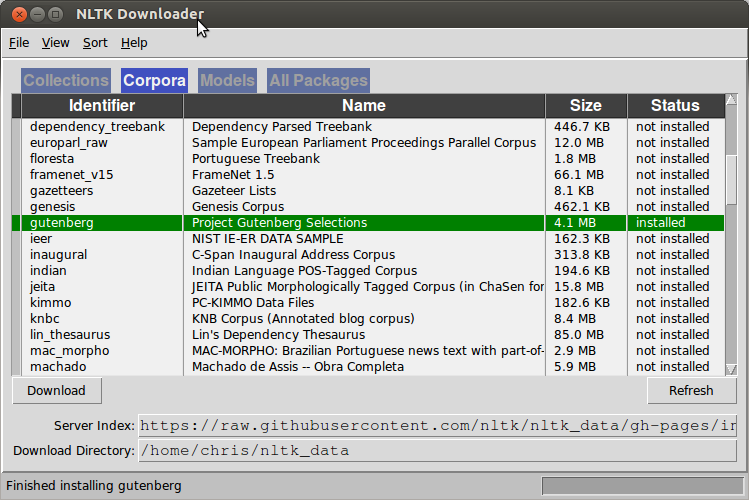

A new window appears. You need to go to “corpora” tab. And then install appropriate corpuses. Here is how the window looks like:

Once you do this, you can close the window and again execute the following code. It’ll print file names in this corpus.

import nltk

nltk.corpus.gutenberg.fileids()

[u'austen-emma.txt',

u'austen-persuasion.txt',

u'austen-sense.txt',

u'bible-kjv.txt',

u'blake-poems.txt',

u'bryant-stories.txt',

u'burgess-busterbrown.txt',

u'carroll-alice.txt',

u'chesterton-ball.txt',

u'chesterton-brown.txt',

u'chesterton-thursday.txt',

u'edgeworth-parents.txt',

u'melville-moby_dick.txt',

u'milton-paradise.txt',

u'shakespeare-caesar.txt',

u'shakespeare-hamlet.txt',

u'shakespeare-macbeth.txt',

u'whitman-leaves.txt']

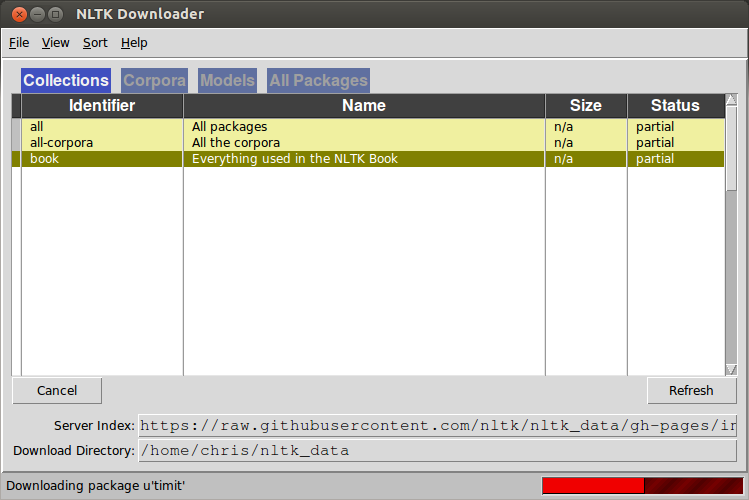

We’ll also some other corporas, so please download entire collection:

nltk.download()

In the collections tab, select book collection and click download. Wait while all files are downloaded.

You can specify where nltk should look for corpora:

nltk.data.path

['/home/chris/nltk_data',

'/usr/share/nltk_data',

'/usr/local/share/nltk_data',

'/usr/lib/nltk_data',

'/usr/local/lib/nltk_data']

Corpuses most important methods¶

from nltk.corpus import gutenberg

raw(filename) let you get the file content as a string:

print(gutenberg.raw('bible-kjv.txt')[:500]) # display only first 500 characters

[The King James Bible]

The Old Testament of the King James Bible

The First Book of Moses: Called Genesis

1:1 In the beginning God created the heaven and the earth.

1:2 And the earth was without form, and void; and darkness was upon

the face of the deep. And the Spirit of God moved upon the face of the

waters.

1:3 And God said, Let there be light: and there was light.

1:4 And God saw the light, that it was good: and God divided the light

from the darkness.

1:5 And God called the light Da

raw() returns raw content of entire corpus. All files are concatenated:

print(gutenberg.raw()[:500])

[Emma by Jane Austen 1816]

VOLUME I

CHAPTER I

Emma Woodhouse, handsome, clever, and rich, with a comfortable home

and happy disposition, seemed to unite some of the best blessings

of existence; and had lived nearly twenty-one years in the world

with very little to distress or vex her.

She was the youngest of the two daughters of a most affectionate,

indulgent father; and had, in consequence of her sister's marriage,

been mistress of his

words(filename) splits the file content into list of words:

gutenberg.words('bible-kjv.txt')

[u'[', u'The', u'King', u'James', u'Bible', u']', ...]

Get list of sentences, where each sentence is represented as a list of words:

gutenberg.sents('bible-kjv.txt')

[[u'[', u'The', u'King', u'James', u'Bible', u']'], [u'The', u'Old', u'Testament', u'of', u'the', u'King', u'James', u'Bible'], ...]

Find the absolute path to given corpora file. Useful if you want to know where it lives.

gutenberg.abspath('bible-kjv.txt')

FileSystemPathPointer(u'/home/chris/nltk_data/corpora/gutenberg/bible-kjv.txt')

Exercise¶

Explore file austen-persuasion.txt from gutenberg corpus. How many words does the file has? How many different words are there?

New concepts introduced in this exercise:

- len()

- set()

Solution¶

We can get a list of words and count them:

words = gutenberg.words('austen-persuasion.txt')

len(words)

98171

Given the list of words, we can delete duplications by converting it to a set, and then, count unique words.

len(set(words))

6132

However, if we do care about letter case and we don’t want to treat own and Own as two different words, we can lower case all words:

all_words = len(set(w.lower() for w in words))

all_words

5835

And if we want to filter out punctuation:

unique = len(set(w.lower() for w in words if w.isalpha()))

unique

5739

You can compute lexical dispersion:

all_words / unique

1

Exercise¶

A trigram is a tuple of three words. You can generate trigrams for any text:

words = 'The Tragedie of Macbeth by William Shakespeare . The Tragedie is great .'.split(' ')

nltk.trigrams(words)

<generator object trigrams at 0xadf7a2c>

You can generate random text by following this algorithm:

- Start from two random words. The random words can be considered the seed. Output both words.

- Output any word that appears in corpus next to the last two outputted word.

- Repeat step 2 as many times as you want.

Write a program that generates random text using this algorithm. Hint: use random.choice to choose randomly one element from a list. New concepts introduced in this exercise:

- nltk.trigram

- tuples (pairs, unpacking)

- dictionaries (creating new key, in, accessing existing key, iterating)

- list.append

- generate the mapper

- defaultdict

- generate the mapper with defaultdict

- str.lower()

- list comprehension

- str.isalpha()

- show the stub of generate function and its usage

- write generate function

Solution¶

from collections import defaultdict

from random import choice

words = (word.lower() for word in gutenberg.words() if word.isalpha())

trigrams = nltk.trigrams(words)

mapper = defaultdict(list)

for a, b, c in trigrams:

mapper[a, b].append(c)

def generate(word1, word2, N):

sentence = [word1, word2]

for i in xrange(N):

new_word = choice(mapper[word1, word2])

sentence.append(new_word)

word1, word2 = word2, new_word

return " ".join(sentence)

# word1, word2 = choice(mapper.keys()) # use for random results

word1, word2 = 'i', 'can'

generate(word1, word2, 30)

u'i can make me say not ye that speak evil against you and i fear this glorious thing is against them since by many a good thousand a year two thousand pounds'

Exercise¶

Write function find_language that takes a word and returns a list of language that this word may be in. Use udhr corpus. Narrow down your search scope to files in Latin1 encoding. Lookup words one and ein. New concepts introduced in this exercise:

- str.endswith

- and boolean operator

Solution¶

from nltk.corpus import udhr

def find_language(word):

word = word.lower()

return [

language

for language in udhr.fileids()

if language.endswith('Latin1')

and word in nltk.corpus.udhr.words(language)

]

find_language('one')

[u'English-Latin1', u'NigerianPidginEnglish-Latin1', u'Picard-Latin1']

find_language('ein')

[u'Faroese-Latin1',

u'German_Deutsch-Latin1',

u'Norwegian_Norsk-Nynorsk-Latin1']

1.2. Conditional Frequency Distributions¶

Frequency distribution¶

A frequency distribution tells you how often given word appears in a corpus. nltk library has a builtin class to compute frequency distribution:

fd = nltk.FreqDist(gutenberg.words('bible-kjv.txt'))

How many times does the word the appear?

fd['the']

62103

What percentage of all words is the word the?

fd.freq('the') * 100

6.1448329497533285

What are the most common 10 words?

fd.most_common(10)

[(u',', 70509),

(u'the', 62103),

(u':', 43766),

(u'and', 38847),

(u'of', 34480),

(u'.', 26160),

(u'to', 13396),

(u'And', 12846),

(u'that', 12576),

(u'in', 12331)]

If you’re using jupyter notebook (formerly known as ipython notebook), include the following line:

%matplotlib inline

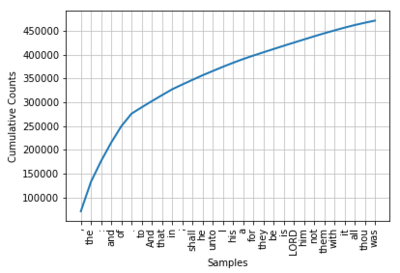

Let’s generate cumulative frequency plot for most common words:

fd.plot(30, cumulative=True)

Exercise¶

Find all words that occur at least ten times in brown corpus.

Solution¶

from nltk.corpus import brown

fd = nltk.FreqDist(brown.words())

frequent_words = [word

for word, frequency in fd.iteritems()

if frequency >= 10]

frequent_words[:20]

[u'woods',

u'hanging',

u'Western',

u'co-operation',

u'bringing',

u'wooden',

u'non-violent',

u'inevitably',

u'errors',

u'cooking',

u'Hamilton',

u'College',

u'Foundation',

u'kids',

u'climbed',

u'controversy',

u'rebel',

u'golden',

u'Harvey',

u'stern']

Exercise¶

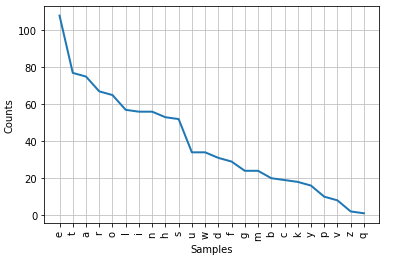

How frequently do letters appear in English text? Use swadesh corpora.

Solution¶

Let’s see how the corpus is structured:

from nltk.corpus import swadesh

swadesh.fileids()

[u'be',

u'bg',

u'bs',

u'ca',

u'cs',

u'cu',

u'de',

u'en',

u'es',

u'fr',

u'hr',

u'it',

u'la',

u'mk',

u'nl',

u'pl',

u'pt',

u'ro',

u'ru',

u'sk',

u'sl',

u'sr',

u'sw',

u'uk']

Each file contains an example text in one language.

text = swadesh.raw('en')

# Filter out punctuation, spaces etc.

letters = [letter for letter in text.lower() if letter.isalpha()]

fd = nltk.FreqDist(letters)

fd.plot()

Exercise¶

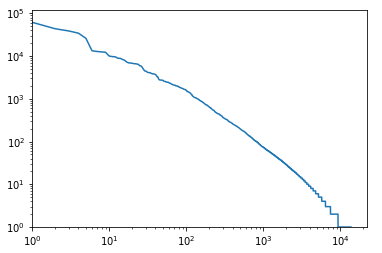

Zipf’s Law states that the frequency of a word is inversely proportional to its rank. Does this law holds for English?

Hint: use logarithmic x and y axes when plotting number of occurrences against rank.

New concepts introduced in this exercise:

- plotting with matplotlib: xscale(‘log’), yscale(‘log’), plot(array)

Solution¶

fd = nltk.FreqDist(gutenberg.words('bible-kjv.txt'))

occurences = [o for word, o in fd.most_common()]

from matplotlib import pylab

pylab.xscale('log')

pylab.yscale('log')

pylab.plot(occurences)

[<matplotlib.lines.Line2D at 0x15fe490c>]

Conditional frequency distribution¶

A conditional frequency distribution is a collection of frequency distributions, each one for a different “condition”. The condition is usually the category of a text.

nltk provides ConditionalFreqDist that accepts list of (category, word) pairs, in contrast to FreqDist which accepts simply list of words.

We’ll work on brown corpus where files have categories:

from nltk.corpus import brown

brown.categories()

[u'adventure',

u'belles_lettres',

u'editorial',

u'fiction',

u'government',

u'hobbies',

u'humor',

u'learned',

u'lore',

u'mystery',

u'news',

u'religion',

u'reviews',

u'romance',

u'science_fiction']

Let’s count the conditional frequency distribution:

from nltk.corpus import brown

cfd = nltk.ConditionalFreqDist(

(category, word)

for category in brown.categories()

for word in brown.words(categories=category))

You can see, that indeed conditions are categories:

cfd.conditions()

[u'mystery',

u'belles_lettres',

u'humor',

u'government',

u'fiction',

u'reviews',

u'religion',

u'romance',

u'science_fiction',

u'adventure',

u'editorial',

u'hobbies',

u'lore',

u'news',

u'learned']

How many times does the word love appear in romances?

cfd['romance']['love']

32

1.3. Counting Words by Genre¶

Let’s display more data. How many times does the most common words appear in different genres?

fd = nltk.FreqDist(brown.words())

words_and_counts = fd.most_common(10)

words = [x[0] for x in words_and_counts]

cfd.tabulate(samples=words)

the , . of and to a in that is

adventure 3370 3488 4057 1322 1622 1309 1354 847 494 98

belles_lettres 9726 9166 6397 6289 4282 4084 3308 3089 1896 1799

editorial 3508 2766 2481 1976 1302 1554 1095 1001 578 744

fiction 3423 3654 3639 1419 1696 1489 1281 916 530 144

government 4143 3405 2493 3031 1923 1829 867 1319 489 649

hobbies 4300 3849 3453 2390 2144 1797 1737 1427 514 959

humor 930 1331 877 515 512 463 505 334 241 117

learned 11079 8242 6773 7418 4237 3882 3215 3644 1695 2403

lore 6328 5519 4367 3668 2758 2530 2304 2001 984 1007

mystery 2573 2805 3326 903 1215 1284 1136 658 494 116

news 5580 5188 4030 2849 2146 2116 1993 1893 802 732

religion 2295 1913 1382 1494 921 882 655 724 475 533

reviews 2048 2318 1549 1299 1103 706 874 656 336 513

romance 2758 3899 3736 1186 1776 1502 1335 875 583 150

science_fiction 652 791 786 321 278 305 222 152 126 47

Exercise¶

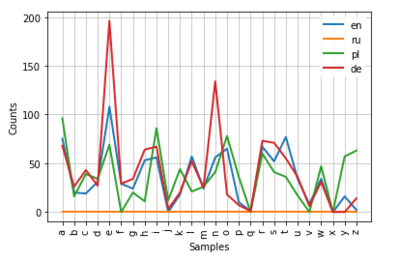

How frequently do letters appear in different languages? Use swadesh corpus. New concepts introduced in this exercise:

- list comprehension with multiple _for_s

- cfd.tabulate(**conditions*=...)* and cfd.plot(**conditions*=...)*

Solution¶

cfd = nltk.ConditionalFreqDist(

(lang, letter)

for lang in swadesh.fileids()

for letter in swadesh.raw(lang).lower()

if letter.isalpha())

cfd.tabulate(samples='abcdefghijklmnopqrstuvwxyz')

a b c d e f g h i j k l m n o p q r s t u v w x y z

be 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

bg 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

bs 124 16 13 31 71 0 21 7 117 46 43 37 22 40 77 29 0 57 46 95 36 37 0 0 0 16

ca 149 16 48 23 115 13 30 3 49 7 0 104 34 57 65 39 10 149 53 60 59 15 0 6 6 0

cs 67 13 27 36 68 0 0 38 25 12 40 45 27 37 69 32 0 43 39 107 26 36 0 0 11 20

cu 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

de 68 26 43 27 196 29 34 64 67 3 20 52 25 134 18 7 1 73 71 55 36 6 31 0 0 14

en 75 20 19 31 108 29 24 53 56 0 18 57 24 56 65 10 1 67 52 77 34 8 34 0 16 2

es 158 19 56 42 141 10 18 21 59 13 0 69 32 60 140 40 9 139 64 49 46 21 0 0 2 10

fr 63 13 44 27 181 19 22 14 79 7 0 53 32 72 81 32 9 135 59 57 76 23 0 3 1 1

hr 121 16 13 32 71 0 20 7 117 46 42 37 22 41 80 29 0 56 46 96 35 37 0 0 0 16

it 130 17 76 37 174 18 36 13 105 0 0 53 30 74 135 30 8 121 57 70 45 22 0 0 0 2

la 108 15 50 39 169 17 25 6 109 0 0 56 40 69 48 30 15 132 109 49 113 30 0 7 0 0

mk 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

nl 73 20 15 51 182 4 39 25 65 20 27 48 23 121 70 13 0 72 42 62 29 37 35 0 0 21

pl 96 16 39 34 69 0 20 11 86 12 44 21 26 41 78 36 0 60 41 36 17 0 47 0 57 63

pt 189 19 59 44 158 20 32 27 70 5 0 51 55 65 157 43 14 187 72 63 63 31 0 5 0 5

ro 214 24 79 35 147 20 32 10 92 3 0 44 47 84 52 56 0 100 60 83 79 16 0 1 0 7

ru 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

sk 91 11 21 31 83 1 1 30 51 6 48 34 26 40 68 32 0 59 45 45 20 41 0 0 19 16

sl 94 21 8 32 104 0 17 13 112 23 44 45 25 41 76 29 0 60 38 93 17 34 0 0 0 20

sr 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

sw 121 17 6 43 47 21 42 20 35 12 37 51 40 73 25 8 0 78 60 73 25 33 0 0 12 0

uk 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

You can even plot the results:

cfd.plot(samples='abcdefghijklmnopqrstuvwxyz',

conditions=['en', 'ru', 'pl', 'de'])

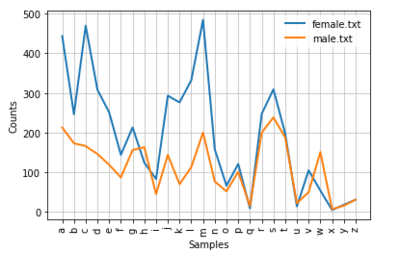

Exercise¶

Which initial letters are more frequent for males versus females? Use names corpus.

New concepts introduced in this exercise:

- str[0]

Solution¶

from nltk.corpus import names

cfd = nltk.ConditionalFreqDist(

(gender, name[0].lower())

for gender in names.fileids()

for name in names.words(gender))

cfd.plot()

Exercise¶

What is the lexical diversity of different genres? Lexical diversity is the ratio of number of words and number of unique words. That is, it’s the average number of times each word appear in the text.

New concepts introduced in this exercise:

- 3/2==1 issue

Solution¶

def get_lexical_diversity(words):

return len(words) / float(len(set(words)))

for category in brown.categories():

words = brown.words(categories=[category])

diversity = get_lexical_diversity(words)

print('{:5.2f} {}'.format(diversity, category))

7.81 adventure

9.40 belles_lettres

6.23 editorial

7.36 fiction

8.57 government

6.90 hobbies

4.32 humor

10.79 learned

7.61 lore

8.19 mystery

6.99 news

6.18 religion

4.72 reviews

8.28 romance

4.48 science_fiction

1.4. Creating Own Corpus¶

A corpus is just a collection of files. You can easily create your own corpus by enumerating a list of all files creating your corpus. It’s wise to keep all the files in one directory.

my_corpus = nltk.corpus.PlaintextCorpusReader('/home/chris/nltk_data/corpora/abc/', '.*')

my_corpus.fileids()

['README', 'rural.txt', 'science.txt']

Your own corporas have access to all the methods available in standard corporas:

my_corpus.words()

[u'Australian', u'Broadcasting', u'Commission', ...]

1.5. Pronouncing Dictionary¶

A pronouncing dictionary is a lexical resource where for each word you’re given pronunciation. This pronunciation was designed to be used by speech synthesizers.

entries = nltk.corpus.cmudict.entries()

len(entries)

133737

133737 The entries are basically pairs of word and pronunciation. Pronunciation is a list of phones represented as strings. The interpretation of pronunciation is described in more details on Wikipedia

for entry in entries[39943:39951]:

print(entry)

(u'explorer', [u'IH0', u'K', u'S', u'P', u'L', u'AO1', u'R', u'ER0'])

(u'explorers', [u'IH0', u'K', u'S', u'P', u'L', u'AO1', u'R', u'ER0', u'Z'])

(u'explores', [u'IH0', u'K', u'S', u'P', u'L', u'AO1', u'R', u'Z'])

(u'exploring', [u'IH0', u'K', u'S', u'P', u'L', u'AO1', u'R', u'IH0', u'NG'])

(u'explosion', [u'IH0', u'K', u'S', u'P', u'L', u'OW1', u'ZH', u'AH0', u'N'])

(u'explosions', [u'IH0', u'K', u'S', u'P', u'L', u'OW1', u'ZH', u'AH0', u'N', u'Z'])

(u'explosive', [u'IH0', u'K', u'S', u'P', u'L', u'OW1', u'S', u'IH0', u'V'])

(u'explosively', [u'EH2', u'K', u'S', u'P', u'L', u'OW1', u'S', u'IH0', u'V', u'L', u'IY0'])

1.6. Shoebox and Toolbox Lexicons¶

A Toolbox file, previously known as Shoebox file, is one of the most popular tools used by linguists. This kind of file let you associate as many data about each word as you want. On the other side, the loose structure of Toolbox files makes it hard to use it.

Each line in the file represents one word. For each word, there is a dictionary of information about this word. The keys of this dictionary represents what type of data it is. For example, ps stands for part-of-speech. The value of this dictionary is actual data. This dictionary is represented as a list of pairs instead of pure Python dictionary.

from nltk.corpus import toolbox

toolbox.entries('rotokas.dic')[:2]

[(u'kaa',

[(u'ps', u'V'),

(u'pt', u'A'),

(u'ge', u'gag'),

(u'tkp', u'nek i pas'),

(u'dcsv', u'true'),

(u'vx', u'1'),

(u'sc', u'???'),

(u'dt', u'29/Oct/2005'),

(u'ex', u'Apoka ira kaaroi aioa-ia reoreopaoro.'),

(u'xp', u'Kaikai i pas long nek bilong Apoka bikos em i kaikai na toktok.'),

(u'xe', u'Apoka is gagging from food while talking.')]),

(u'kaa',

[(u'ps', u'V'),

(u'pt', u'B'),

(u'ge', u'strangle'),

(u'tkp', u'pasim nek'),

(u'arg', u'O'),

(u'vx', u'2'),

(u'dt', u'07/Oct/2006'),

(u'ex', u'Rera rauroro rera kaarevoi.'),

(u'xp', u'Em i holim pas em na nekim em.'),

(u'xe', u'He is holding him and strangling him.'),

(u'ex',

u'Iroiro-ia oirato okoearo kaaivoi uvare rirovira kaureoparoveira.'),

(u'xp',

u'Ol i pasim nek bilong man long rop bikos em i save bikhet tumas.'),

(u'xe',

u"They strangled the man's neck with rope because he was very stubborn and arrogant."),

(u'ex',

u'Oirato okoearo kaaivoi iroiro-ia. Uva viapau uvuiparoi ra vovouparo uva kopiiroi.'),

(u'xp',

u'Ol i pasim nek bilong man long rop. Olsem na em i no pulim win olsem na em i dai.'),

(u'xe',

u"They strangled the man's neck with a rope. And he couldn't breathe and he died.")])]

1.7. Senses and Synonyms¶

Let’s consider the following sentences:

Benz is credited with the invention of the motorcar.

Benz is credited with the invention of the automobile.

Both sentences have exactly the same meaning, because motorcar and automobile are synonyms. These words have the same meaning.

WordNet is semantically oriented dictionary of English. It’s aware that some words are synonyms.

from nltk.corpus import wordnet as wn

We can find all synsets of motorcar. A synset is a collections of synonyms.

wn.synsets('motorcar')

[Synset('car.n.01')]

Let’s see what are the synonyms in this synset:

wn.synset('car.n.01').lemma_names()

[u'car', u'auto', u'automobile', u'machine', u'motorcar']

WordNet contains much more data, like examples for given synset:

wn.synset('car.n.01').examples()

[u'he needs a car to get to work']

And even definition:

wn.synset('car.n.01').definition()

u'a motor vehicle with four wheels; usually propelled by an internal combustion engine'

Word car is ambiguous and belongs to multiple synsets:

for synset in wn.synsets('car'):

print("{} -> {}".format(

synset.name(),

car.n.01 -> car, auto, automobile, machine, motorcar

car.n.02 -> car, railcar, railway_car, railroad_car

car.n.03 -> car, gondola

car.n.04 -> car, elevator_car

cable_car.n.01 -> cable_car, car

", ".join(synset.lemma_names())))

1.8. Hierarchies¶

WordNet is aware of hierarchies of words.

Hyponyms are more specific concepts of a general concept. For example, ambulance is a hyponym of car.

motorcar = wn.synset('car.n.01')

hyponyms = motorcar.hyponyms()

hyponyms[0]

Synset('ambulance.n.01')

We can display all words that are more specific than motorcar:

[u'Model_T',

u'S.U.V.',

u'SUV',

u'Stanley_Steamer',

u'ambulance',

u'beach_waggon',

u'beach_wagon',

u'bus',

u'cab',

u'compact',

u'compact_car',

u'convertible',

u'coupe',

u'cruiser',

u'electric',

u'electric_automobile',

u'electric_car',

u'estate_car',

u'gas_guzzler',

u'hack',

u'hardtop',

u'hatchback',

u'heap',

u'horseless_carriage',

u'hot-rod',

u'hot_rod',

u'jalopy',

u'jeep',

u'landrover',

u'limo',

u'limousine',

u'loaner',

u'minicar',

u'minivan',

u'pace_car',

u'patrol_car',

u'phaeton',

u'police_car',

u'police_cruiser',

u'prowl_car',

u'race_car',

u'racer',

u'racing_car',

u'roadster',

u'runabout',

u'saloon',

u'secondhand_car',

u'sedan',

u'sport_car',

u'sport_utility',

u'sport_utility_vehicle',

u'sports_car',

u'squad_car',

u'station_waggon',

u'station_wagon',

u'stock_car',

u'subcompact',

u'subcompact_car',

u'taxi',

u'taxicab',

u'tourer',

u'touring_car',

u'two-seater',

u'used-car',

u'waggon',

u'wagon']

A hypernym is the opposite to a hyponym. For example, car is a hypernym of ambulance.

ambulance = wn.synset('ambulance.n.01')

ambulance.hypernyms()

[Synset('car.n.01')]

Exercise¶

What percentage of noun synsets have no hyponyms?

You can get all noun synsets using wn.all_synsets('n').

New concepts introduced in this exercise:

- sum()

- wn.all_synsets

Solution¶

all = sum(1 for x in wn.all_synsets('n'))

no_hyponyms = sum(1 for n in wn.all_synsets('n') if n.hyponyms())

no_hyponyms / float(all) * 100

20.328807160689276

Exercise¶

What is the branching factor of noun hypernyms? That is, how many hyponyms on average has each noun synset?

Solution¶

hyponyms = [len(n.hyponyms()) for n in wn.all_synsets('n')]

sum(hyponyms) / float(len(hyponyms))

0.9237045606770992

1.9. Lexical Relations: Meronyms, Holonyms¶

A word is meronym of another word if the former is component of the latter. For example, trunk is a part of tree, so trunk is a meronym of a tree.

wn.synset('tree.n.01').part_meronyms()

[Synset('burl.n.02'),

Synset('crown.n.07'),

Synset('limb.n.02'),

Synset('stump.n.01'),

Synset('trunk.n.01')]

wn.synset('tree.n.01').substance_meronyms()

[Synset('heartwood.n.01'), Synset('sapwood.n.01')]

A holonym is kind of the opposite to a meronym. Holonyms of a word are the things it’s contained in. For example, a collection of trees forms a forest, so forest is a holonym of tree.

wn.synset('tree.n.01').member_holonyms()

[Synset('forest.n.01')]

Note that if A is a meronym of B, it doesn’t mean that B is a holonym of A. For example, tree is not a holonym of trunk.

wn.synset('trunk.n.01').member_holonyms()

[]

1.10. Semantic Similarity¶

We have seen that WordNet is aware of semantic relations between words.

All words create a network where each word is a node and hyponyms and hypernyms relations are edges.

If two synsets are closely related, they must share a very specific hypernym:

right_whale = wn.synset('right_whale.n.01')

orca = wn.synset('orca.n.01')

right_whale.lowest_common_hypernyms(orca)

[Synset('whale.n.02')]

And that hypernym must be low down in the hierarchy:

wn.synset('whale.n.02').min_depth()

13

In contrast, two synsets that are not related at all share a very general hypernym:

novel = wn.synset('novel.n.01')

right_whale.lowest_common_hypernyms(novel)

[Synset('entity.n.01')]

This hypernym is actually the root of the hierarchy:

wn.synset('entity.n.01').min_depth()

0

Exercise¶

Given list of words, find similarity of each pair of synsets. Use synset.path_similarity(another_synset) method.

New concepts introduced in this exercise:

- synset.path_similarity

- write solution without string formatting

- str.split

- str.join

- str.format

Solution¶

words = 'food fruit car'.split(' ')

synsets = [synset

for word in words

for synset in wn.synsets(word, 'n')]

for s in synsets:

similarities = [s.path_similarity(t)*100 for t in synsets]

row = ' '.join('{:3.0f}'.format(s) for s in similarities)

print('{:20} {}'.format(s.name(), row))

food.n.01 100 20 10 9 10 8 8 9 8 8 8

food.n.02 20 100 10 9 10 8 8 9 8 8 8

food.n.03 10 10 100 7 8 10 6 7 7 7 7

fruit.n.01 9 9 7 100 10 6 8 9 8 8 8

yield.n.03 10 10 8 10 100 6 10 12 11 11 11

fruit.n.03 8 8 10 6 6 100 5 6 6 6 6

car.n.01 8 8 6 8 10 5 100 20 8 8 8

car.n.02 9 9 7 9 12 6 20 100 10 10 10

car.n.03 8 8 7 8 11 6 8 10 100 33 33

car.n.04 8 8 7 8 11 6 8 10 33 100 33

cable_car.n.01 8 8 7 8 11 6 8 10 33 33 100

2. Processing Raw Text¶

In this chapter, we need the following imports:

import nltk, re

2.0. Accessing raw text¶

Downloading files¶

from urllib import urlopen

url = "http://www.gutenberg.org/files/1112/1112.txt"

raw = urlopen(url).read()

print(raw[:200])

The Project Gutenberg EBook of Romeo and Juliet, by William Shakespeare

This eBook is for the use of anyone anywhere at no cost and with

almost no restrictions whatsoever. You may copy it, give i

As you can see, there is some metadata at the beginning of the file.

print(raw[3040:4290])

THE PROLOGUE

Enter Chorus.

Chor. Two households, both alike in dignity,

In fair Verona, where we lay our scene,

From ancient grudge break to new mutiny,

Where civil blood makes civil hands unclean.

From forth the fatal loins of these two foes

A pair of star-cross'd lovers take their life;

Whose misadventur'd piteous overthrows

Doth with their death bury their parents' strife.

The fearful passage of their death-mark'd love,

And the continuance of their parents' rage,

Which, but their children's end, naught could remove,

Is now the two hours' traffic of our stage;

The which if you with patient ears attend,

What here shall miss, our toil shall strive to mend.

[Exit.]

ACT I. Scene I.

Verona. A public place.

Enter Sampson and Gregory (with swords and bucklers) of the house

of Capulet.

Samp. Gregory, on my word, we'll not carry coals.

Greg. No, for then we should be colliers.

Samp. I mean, an we be in choler, we'll draw.

Greg. Ay, while you live, draw your neck out of collar.

Samp. I strike quickly, being moved.

Let’s analyze this text

tokens = nltk.word_tokenize(raw)

tokens[:20]

['The',

'Project',

'Gutenberg',

'EBook',

'of',

'Romeo',

'and',

'Juliet',

',',

'by',

'William',

'Shakespeare',

'This',

'eBook',

'is',

'for',

'the',

'use',

'of',

'anyone']

Let’s find collocations – sequences of words that co-occur more often thatn would be expected by chance.

text = nltk.Text(tokens)

text.collocations(num=50)

Project Gutenberg-tm; Project Gutenberg; Literary Archive; Gutenberg-

tm electronic; Archive Foundation; electronic works; Gutenberg

Literary; United States; thou art; thou wilt; William Shakespeare;

thou hast; art thou; public domain; electronic work; Friar Laurence;

County Paris; set forth; Gutenberg-tm License; Chief Watch; thousand

times; PROJECT GUTENBERG; good night; Scene III; Enter Juliet;

copyright holder; Enter Romeo; Make haste; Complete Works; pray thee;

Enter Friar; Wilt thou; Friar John; upon thy; free distribution; woful

day; paragraph 1.F.3; Lammas Eve; Plain Vanilla; considerable effort;

intellectual property; restrictions whatsoever; solely singular;

derivative works; Enter Old; honest gentleman; Thou shalt; Enter

Benvolio; Art thou; Hast thou

2.1. Printing¶

In Python 2, print is a special keyword:

text = 'In 2015, e-commerce sales by businesses with 10 or more employees'

print text

In 2015, e-commerce sales by businesses with 10 or more employees

In Python 3, print is a function and parenthesis are required:

print(text)

In 2015, e-commerce sales by businesses with 10 or more employees

The above code works also in Python 2 - the parenthesis are simply ignored.

2.2. Truncating¶

Truncating restricts the maximum length of strings. We truncate text, so that it has a substring of the first specified number of characters.

print(text[:20])

In 2015, e-commerce

2.3. Extracting Parts of String¶

Also named slicing:

print(text[5:20])

15, e-commerce

This works also for lists:

l = ['first', 'second', 'third', '4th', '5th']

print(l[2:4])

['third', '4th']

2.4. Accessing Individual Characters¶

This works in the same way as accessing individual elements in a list:

print(text[5])

1

Or you can get the n-th element from the end:

print(text[-5])

0

2.5. Searching, Replacing, Splitting, Joining¶

Searching¶

text.find('sales')

20

or

'sales' in text

True

Replacing¶

text.replace('2015', '2016')

'In 2016, e-commerce sales by businesses with 10 or more employees'

Splitting¶

tokens = text.split(' ')

tokens

['In',

'2015,',

'e-commerce',

'sales',

'by',

'businesses',

'with',

'10',

'or',

'more',

'employees']

Joining¶

' '.join(tokens)

'In 2015, e-commerce sales by businesses with 10 or more employees'

or

print('\n'.join(tokens))

In

2015,

e-commerce

sales

by

businesses

with

10

or

more

employees

2.6. Regular Expressions¶

The simplest kinds of pattern matching can be handled with string methods:

'worked'.endswith('ed')

True

However, if you want to be able to do more complex stuff, you need a more powerful tool. Regular expressions come to rescue!

We need the right module:

import re

Use PyRegex for cheat sheet.

There are three most important methods in re module: search, match and findall.

We can use re.search function to look for a pattern anywhere inside a string.

help(re.search)

Help on function search in module re:

search(pattern, string, flags=0)

Scan through string looking for a match to the pattern, returning

a match object, or None if no match was found.

We can use re.match function to look for a pattern at the beginning of the string.

help(re.match)

Help on function match in module re:

match(pattern, string, flags=0)

Try to apply the pattern at the start of the string, returning

a match object, or None if no match was found.

There is also re.findall method:

help(re.findall)

Help on function findall in module re:

findall(pattern, string, flags=0)

Return a list of all non-overlapping matches in the string.

If one or more groups are present in the pattern, return a

list of groups; this will be a list of tuples if the pattern

has more than one group.

Empty matches are included in the result.

Exercises¶

We will use words corpus:

wordlist = nltk.corpus.words.words('en')

Find all English words in words corpus ending with ed (without using endswith method):

wordlist = nltk.corpus.words.words('en')

[w for w in wordlist if re.search('ed$', w)][:10]

[u'abaissed',

u'abandoned',

u'abased',

u'abashed',

u'abatised',

u'abed',

u'aborted',

u'abridged',

u'abscessed',

u'absconded']

Suppose we have room in a crossword puzzle for an 8-letter word with j as its third letter and t as its sixth letter. Find matching words?

[w for w in wordlist if re.search('^..j..t..$', w)][:10]

[u'abjectly',

u'adjuster',

u'dejected',

u'dejectly',

u'injector',

u'majestic',

u'objectee',

u'objector',

u'rejecter',

u'rejector']

The T9 system is used for entering text on mobile phones). Two or more words that are entered with the same sequence of keystrokes are known as textonyms. For example, both hole and golf are entered by pressing the sequence 4653. What other words could be produced with the same sequence?

[w for w in wordlist if re.search('^[ghi][mno][jlk][def]$', w)][:10]

[u'gold', u'golf', u'hold', u'hole']

Exercise¶

Now, we’ll use treebank corpus.

wsj = sorted(set(nltk.corpus.treebank.words()))

- Find all words that contain at least one digit.

- Find four-digit numbers.

- Find all integer numbers that have four or more digits.

- Find decimal numbers.

- Find words ending with ed or ing.

Solution¶

1. Find all words that contain at least one digit.

[w for w in wsj if re.search('[0-9]', w)][:10]

[u"'30s",

u"'40s",

u"'50s",

u"'80s",

u"'82",

u"'86",

u'-1',

u'-10',

u'-100',

u'-101']

2. Find four-digit numbers:

[w for w in wsj if re.search('^[0-9]{4}$', w)][:10]

[u'1614',

u'1637',

u'1787',

u'1901',

u'1903',

u'1917',

u'1925',

u'1929',

u'1933',

u'1934']

3. Find all integer numbers that have four or more digits.

[w for w in wsj if re.search('^[0-9]{4,}$', w)][:10]

[u'1614',

u'1637',

u'1787',

u'1901',

u'1903',

u'1917',

u'1925',

u'1929',

u'1933',

u'1934']

4. Find decimal numbers.

[w for w in wsj if re.search('^[0-9]+\.[0-9]+$', w)][:10]

[u'0.0085',

u'0.05',

u'0.1',

u'0.16',

u'0.2',

u'0.25',

u'0.28',

u'0.3',

u'0.4',

u'0.5']

5. Find words ending with ed or ing.

[w for w in wsj if re.search('(ed|ing)$', w)][:10]

[u'62%-owned',

u'Absorbed',

u'According',

u'Adopting',

u'Advanced',

u'Advancing',

u'Alfred',

u'Allied',

u'Annualized',

u'Anything']

6. Find words like black-and-white, father-in-law etc.

[w for w in wsj if re.search('^[a-z]+-[a-z]+-[a-z]+$', w)][:10]

[u'anti-morning-sickness',

u'black-and-white',

u'bread-and-butter',

u'built-from-kit',

u'cash-and-stock',

u'cents-a-unit',

u'computer-system-design',

u'day-to-day',

u'do-it-yourself',

u'easy-to-read']

2.7. Detecting Word Patterns¶

Example with .startswith.

Find out all vowels:

word = 'supercalifragilisticexpialidocious'

re.findall(r'[aeiou]', word)

['u', 'e', 'a', 'i', 'a', 'i', 'i', 'i', 'e', 'i', 'a', 'i', 'o', 'i', 'o', 'u']

len(re.findall(r'[aeiou]', word))

16

Exercise¶

What are the most common sequences of two or more vowels in English language?

Use entire treebank corpus. What is the format of treebank corpus files?

Solution¶

wsj = sorted(set(nltk.corpus.treebank.words()))

fd = nltk.FreqDist(vs

for word in wsj

for vs in re.findall(r'[aeiou]{2,}', word))

fd.most_common()

[(u'io', 549),

(u'ea', 476),

(u'ie', 331),

(u'ou', 329),

(u'ai', 261),

(u'ia', 253),

(u'ee', 217),

(u'oo', 174),

(u'ua', 109),

(u'au', 106),

(u'ue', 105),

(u'ui', 95),

(u'ei', 86),

(u'oi', 65),

(u'oa', 59),

(u'eo', 39),

(u'iou', 27),

(u'eu', 18),

(u'oe', 15),

(u'iu', 14),

(u'ae', 11),

(u'eau', 10),

(u'uo', 8),

(u'oui', 6),

(u'ao', 6),

(u'uou', 5),

(u'eou', 5),

(u'uee', 4),

(u'aa', 3),

(u'ieu', 3),

(u'uie', 3),

(u'eei', 2),

(u'iao', 1),

(u'iai', 1),

(u'aii', 1),

(u'aiia', 1),

(u'eea', 1),

(u'ueui', 1),

(u'ooi', 1),

(u'oei', 1),

(u'ioa', 1),

(u'uu', 1),

(u'aia', 1)]

Exercise¶

Extract all consonant-vowel sequences from the words of Rotokas, such as ka and si. Build an dictionary mapping from the sequences to list of words containing given sequence. New concepts introduced in this exercise:

- return value of re.findall

- two-letter strings can be interpreted as a pair

- build the mapping using a dictionary

- nltk.Index

- build the mapping using an index

Solution¶

rotokas_words = nltk.corpus.toolbox.words('rotokas.dic')

cvs = [cv

for w in rotokas_words

for cv in re.findall(r'[ptksvr][aeiou]', w)]

cfd = nltk.ConditionalFreqDist(cvs)

cfd.tabulate()

a e i o u

k 418 148 94 420 173

p 83 31 105 34 51

r 187 63 84 89 79

s 0 0 100 2 1

t 47 8 0 148 37

v 93 27 105 48 49

Building the dictionary:

from collections import defaultdict

mapping = defaultdict(list)

for w in rotokas_words:

for cv in re.findall(r'[ptksvr][aeiou]', w):

mapping[cv].append(w)

mapping['su']

[u'kasuari']

Building the mapping using nltk.Index:

cv_word_pairs = [(cv, w)

for w in rotokas_words

for cv in re.findall(r'[ptksvr][aeiou]', w)]

cv_index = nltk.Index(cv_word_pairs)

cv_index['su']

[u'kasuari']

2.8. Stemming¶

nltk library is well documented. You can learn a lot just by inspecting its modules and classes:

help(nltk.stem)

Help on package nltk.stem in nltk:

NAME

nltk.stem - NLTK Stemmers

FILE

/usr/local/lib/python2.7/dist-packages/nltk/stem/__init__.py

DESCRIPTION

Interfaces used to remove morphological affixes from words, leaving

only the word stem. Stemming algorithms aim to remove those affixes

required for eg. grammatical role, tense, derivational morphology

leaving only the stem of the word. This is a difficult problem due to

irregular words (eg. common verbs in English), complicated

morphological rules, and part-of-speech and sense ambiguities

(eg. ceil- is not the stem of ceiling).

StemmerI defines a standard interface for stemmers.

PACKAGE CONTENTS

api

isri

lancaster

porter

regexp

rslp

snowball

util

wordnet

Exercise¶

However, as an exercise of writing regular expression, write a function that returns a stem of given word.

New concepts in this exercise:

- more than one group in regular expressions; how to process them?

Solution¶

def stem(word):

regexp = r'^(.*?)(ing|ly|ed|ious|ies|ive|es|s|ment)?$'

stem, suffix = re.findall(regexp, word)[0]

return stem

Results:

raw = """

DENNIS: Listen, strange women lying in ponds distributing swords

is no basis for a system of government. Supreme executive power derives from

a mandate from the masses, not from some farcical aquatic ceremony.

"""

tokens = nltk.word_tokenize(raw)

[stem(t) for t in tokens]

['DENNIS',

':',

'Listen',

',',

'strange',

'women',

'ly',

'in',

'pond',

'distribut',

'sword',

'i',

'no',

'basi',

'for',

'a',

'system',

'of',

'govern',

'.',

'Supreme',

'execut',

'power',

'deriv',

'from',

'a',

'mandate',

'from',

'the',

'mass',

',',

'not',

'from',

'some',

'farcical',

'aquatic',

'ceremony',

'.']

Builtin stemmers¶

We don’t have to write our own regular expressions. nltk library has builtin stemmers:

[x for x in dir(nltk) if 'stemmer' in x.lower()]

['ISRIStemmer',

'LancasterStemmer',

'PorterStemmer',

'RSLPStemmer',

'RegexpStemmer',

'SnowballStemmer',

'StemmerI']

The most important one is PorterStemmer. It can handle correctly words like lying (which is mapped to lie, not ly):

porter = nltk.PorterStemmer()

[porter.stem(t) for t in tokens]

[u'DENNI',

u':',

u'Listen',

u',',

u'strang',

u'women',

u'lie',

u'in',

u'pond',

u'distribut',

u'sword',

u'is',

u'no',

u'basi',

u'for',

u'a',

u'system',

u'of',

u'govern',

u'.',

u'Suprem',

u'execut',

u'power',

u'deriv',

u'from',

u'a',

u'mandat',

u'from',

u'the',

u'mass',

u',',

u'not',

u'from',

u'some',

u'farcic',

u'aquat',

u'ceremoni',

u'.']

2.9. Tokenization¶

Searching tokenized text¶

So far, we searched across one long string. However, it’s possible to write regular expressions to search across multiple strings, i.e. list of words. Use nltk.Text.findall method, not the one from re library which cannot handle such kind of regular expressions.

moby = nltk.Text(nltk.corpus.gutenberg.words('melville-moby_dick.txt'))

moby.findall(r"<a> (<.*>) <man>")

monied; nervous; dangerous; white; white; white; pious; queer; good;

mature; white; Cape; great; wise; wise; butterless; white; fiendish;

pale; furious; better; certain; complete; dismasted; younger; brave;

brave; brave; brave

Exercise¶

Search for patterns like A and other Bs in brown corpus.

Solution¶

from nltk.corpus import brown

words = nltk.corpus.brown.words()

hobbies_learned = nltk.Text(words)

hobbies_learned.findall(r"<\w*> <and> <other> <\w*s>")

companies and other corporations; Union and other members; Wagner and

other officials; grillwork and other things; this and other units;

supervisors and other employees; suits and other misunderstandings;

dormitories and other buildings; ships and other submarines; Taylor

and other officers; chemicals and other products; amount and other

particulars; wife and other employes; Vietnam and other nations;

British and other replies; civic and other leaders; political and

other resources; Legion and other groups; this and other origins;

these and other matters; mink and other animals; These and other

figures; Ridge and other parts; Newman and other Tractarians; lawyers

and other managers; Awakening and other revivals; speed and other

activities; water and other liquids; tomb and other landmarks; Statues

and other monuments; pearls and other jewels; charts and other items;

roads and other features; figures and other objects; manure and other

fertilizers; these and other reasons; duration and other

circumstances; experience and other sources; television and other

mass; compromise and other factors; This and other fears; peoples and

other nations; head and other members; prints and other things; Bank

and other instrumentalities; candy and other presents; accepted and

other letters; schoolmaster and other privileges; proctors and other

officers; color and other varieties; Providence and other garages;

taxes and other revenues; Central and other railroads; this and other

departments; polls and other reports; ethnic and other lines;

management and other resources; loan and other provisions; India and

other countries; memoranda and other records; textile and other

industries; Leesona and other companies; Admissions and other issues;

military and other areas; demands and other factors; abstracts and

other compilations; iron and other metals; House and other places;

Bordel and other places; blues and other songs; oxygen and other

gases; structure and other buildings; sand and other girls; this and

other spots

Approaches to tokenization¶

So far, we used the builtin nltk.word_tokenize function that accepts a long string and returns a list of words.

help(nltk.tokenize)

Help on package nltk.tokenize in nltk:

NAME

nltk.tokenize - NLTK Tokenizer Package

FILE

/usr/local/lib/python2.7/dist-packages/nltk/tokenize/__init__.py

DESCRIPTION

Tokenizers divide strings into lists of substrings. For example,

tokenizers can be used to find the words and punctuation in a string:

>>> from nltk.tokenize import word_tokenize

>>> s = '''Good muffins cost $3.88nin New York. Please buy me

... two of them.nnThanks.'''

>>> word_tokenize(s)

['Good', 'muffins', 'cost', '$', '3.88', 'in', 'New', 'York', '.',

'Please', 'buy', 'me', 'two', 'of', 'them', '.', 'Thanks', '.']

This particular tokenizer requires the Punkt sentence tokenization

models to be installed. NLTK also provides a simpler,

regular-expression based tokenizer, which splits text on whitespace

and punctuation:

>>> from nltk.tokenize import wordpunct_tokenize

>>> wordpunct_tokenize(s)

['Good', 'muffins', 'cost', '$', '3', '.', '88', 'in', 'New', 'York', '.',

'Please', 'buy', 'me', 'two', 'of', 'them', '.', 'Thanks', '.']

We can also operate at the level of sentences, using the sentence

tokenizer directly as follows:

>>> from nltk.tokenize import sent_tokenize, word_tokenize

>>> sent_tokenize(s)

['Good muffins cost $3.88nin New York.', 'Please buy mentwo of them.', 'Thanks.']

>>> [word_tokenize(t) for t in sent_tokenize(s)]

[['Good', 'muffins', 'cost', '$', '3.88', 'in', 'New', 'York', '.'],

['Please', 'buy', 'me', 'two', 'of', 'them', '.'], ['Thanks', '.']]

Caution: when tokenizing a Unicode string, make sure you are not

using an encoded version of the string (it may be necessary to

decode it first, e.g. with s.decode("utf8").

NLTK tokenizers can produce token-spans, represented as tuples of integers

having the same semantics as string slices, to support efficient comparison

of tokenizers. (These methods are implemented as generators.)

>>> from nltk.tokenize import WhitespaceTokenizer

>>> list(WhitespaceTokenizer().span_tokenize(s))

[(0, 4), (5, 12), (13, 17), (18, 23), (24, 26), (27, 30), (31, 36), (38, 44),

(45, 48), (49, 51), (52, 55), (56, 58), (59, 64), (66, 73)]

There are numerous ways to tokenize text. If you need more control over

tokenization, see the other methods provided in this package.

For further information, please see Chapter 3 of the NLTK book.

PACKAGE CONTENTS

api

casual

mwe

punkt

regexp

sexpr

simple

stanford

stanford_segmenter

texttiling

treebank

util

FUNCTIONS

sent_tokenize(text, language='english')

Return a sentence-tokenized copy of text,

using NLTK's recommended sentence tokenizer

(currently PunktSentenceTokenizer

for the specified language).

:param text: text to split into sentences

:param language: the model name in the Punkt corpus

word_tokenize(text, language='english')

Return a tokenized copy of text,

using NLTK's recommended word tokenizer

(currently TreebankWordTokenizer

along with PunktSentenceTokenizer

for the specified language).

:param text: text to split into sentences

:param language: the model name in the Punkt corpus

2.10. Normalization of Text¶

Normalization of text is not only stemming, but also some more basic operations. For example, we may want to convert text to lowercase, so that we treat words the and The as the same word

2.11. Word Segmentation (especially in Chinese)¶

Sentence Segmentation¶

So far, we manipulated text at the low level, that is at the level of single words. However, it’s useful to operate on bigger structures, like i.e. sentences. nltk supports sentence segmentation. For example, you can easily find out what is the average sentences length:

len(nltk.corpus.brown.words()) / len(nltk.corpus.brown.sents())

20

There is a builtin function nltk.sent_tokenize that works in similar way to nltk.word_tokenize:

raw = nltk.corpus.gutenberg.raw('austen-emma.txt')

sentences = nltk.sent_tokenize(raw)

for sent in sentences[:5]:

print(sent)

print('---')

[Emma by Jane Austen 1816]

VOLUME I

CHAPTER I

Emma Woodhouse, handsome, clever, and rich, with a comfortable home

and happy disposition, seemed to unite some of the best blessings

of existence; and had lived nearly twenty-one years in the world

with very little to distress or vex her.

---

She was the youngest of the two daughters of a most affectionate,

indulgent father; and had, in consequence of her sister's marriage,

been mistress of his house from a very early period.

---

Her mother

had died too long ago for her to have more than an indistinct

remembrance of her caresses; and her place had been supplied

by an excellent woman as governess, who had fallen little short

of a mother in affection.

---

Sixteen years had Miss Taylor been in Mr. Woodhouse's family,

less as a governess than a friend, very fond of both daughters,

but particularly of Emma.

---

Between _them_ it was more the intimacy

of sisters.

Word Segmentation¶

For some writing systems, tokenizing text is made more difficult by the fact that there is no visual representation of word boundaries. For example, in Chinese, the three-character string: 爱国人 (ai4 “love” [verb], guo3 “country”, ren2 “person”) could be tokenized as 爱国 / 人, “country-loving person,” or as 爱 / 国人, “love country-person.”

NLTK book

3. Categorizing and Tagging Words¶

The following imports are required in this chapter:

import nltk

Most of the time we’ll use nltk.tag submodule. Its docstring is a great introduction to categorizing and tagging words.

help(nltk.tag)

Help on package nltk.tag in nltk:

NAME

nltk.tag - NLTK Taggers

FILE

/usr/local/lib/python2.7/dist-packages/nltk/tag/__init__.py

DESCRIPTION

This package contains classes and interfaces for part-of-speech

tagging, or simply "tagging".

A "tag" is a case-sensitive string that specifies some property of a token,

such as its part of speech. Tagged tokens are encoded as tuples

(tag, token). For example, the following tagged token combines

the word 'fly' with a noun part of speech tag ('NN'):

>>> tagged_tok = ('fly', 'NN')

An off-the-shelf tagger is available. It uses the Penn Treebank tagset:

>>> from nltk import pos_tag, word_tokenize

>>> pos_tag(word_tokenize("John's big idea isn't all that bad."))

[('John', 'NNP'), ("'s", 'POS'), ('big', 'JJ'), ('idea', 'NN'), ('is', 'VBZ'),

("n't", 'RB'), ('all', 'PDT'), ('that', 'DT'), ('bad', 'JJ'), ('.', '.')]

This package defines several taggers, which take a list of tokens,

assign a tag to each one, and return the resulting list of tagged tokens.

Most of the taggers are built automatically based on a training corpus.

For example, the unigram tagger tags each word w by checking what

the most frequent tag for w was in a training corpus:

>>> from nltk.corpus import brown

>>> from nltk.tag import UnigramTagger

>>> tagger = UnigramTagger(brown.tagged_sents(categories='news')[:500])

>>> sent = ['Mitchell', 'decried', 'the', 'high', 'rate', 'of', 'unemployment']

>>> for word, tag in tagger.tag(sent):

... print(word, '->', tag)

Mitchell -> NP

decried -> None

the -> AT

high -> JJ

rate -> NN

of -> IN

unemployment -> None

Note that words that the tagger has not seen during training receive a tag

of None.

We evaluate a tagger on data that was not seen during training:

>>> tagger.evaluate(brown.tagged_sents(categories='news')[500:600])

0.73...

For more information, please consult chapter 5 of the NLTK Book.

PACKAGE CONTENTS

api

brill

brill_trainer

crf

hmm

hunpos

mapping

perceptron

senna

sequential

stanford

tnt

util

FUNCTIONS

pos_tag(tokens, tagset=None)

Use NLTK's currently recommended part of speech tagger to

tag the given list of tokens.

>>> from nltk.tag import pos_tag

>>> from nltk.tokenize import word_tokenize

>>> pos_tag(word_tokenize("John's big idea isn't all that bad."))

[('John', 'NNP'), ("'s", 'POS'), ('big', 'JJ'), ('idea', 'NN'), ('is', 'VBZ'),

("n't", 'RB'), ('all', 'PDT'), ('that', 'DT'), ('bad', 'JJ'), ('.', '.')]

>>> pos_tag(word_tokenize("John's big idea isn't all that bad."), tagset='universal')

[('John', 'NOUN'), ("'s", 'PRT'), ('big', 'ADJ'), ('idea', 'NOUN'), ('is', 'VERB'),

("n't", 'ADV'), ('all', 'DET'), ('that', 'DET'), ('bad', 'ADJ'), ('.', '.')]

NB. Use pos_tag_sents() for efficient tagging of more than one sentence.

:param tokens: Sequence of tokens to be tagged

:type tokens: list(str)

:param tagset: the tagset to be used, e.g. universal, wsj, brown

:type tagset: str

:return: The tagged tokens

:rtype: list(tuple(str, str))

pos_tag_sents(sentences, tagset=None)

Use NLTK's currently recommended part of speech tagger to tag the

given list of sentences, each consisting of a list of tokens.

:param tokens: List of sentences to be tagged

:type tokens: list(list(str))

:param tagset: the tagset to be used, e.g. universal, wsj, brown

:type tagset: str

:return: The list of tagged sentences

:rtype: list(list(tuple(str, str)))

DATA

print_function = _Feature((2, 6, 0, 'alpha', 2), (3, 0, 0, 'alpha', 0)...

3.1. Tagged Corpora¶

Just use .tagged_words() method instead of .words():

nltk.corpus.brown.tagged_words(categories='news')

[(u'The', u'AT'), (u'Fulton', u'NP-TL'), ...]

Make sure that your corpus is tagged. If your corpus is not tagged, there will be no .tagged_words() method and you’ll get an error:

nltk.corpus.gutenberg.tagged_words(categories='news')

Traceback (most recent call last):

File "", line 1, in

nltk.corpus.gutenberg.tagged_words(categories='news')

AttributeError: 'PlaintextCorpusReader' object has no attribute 'tagged_words'

Exercise¶

How often do different parts of speech appear in different genres? Use brown corpus.

New concepts introduced in this exercise:

- brown.categories

- brown.tagged_words(**tagset*=...)*

Solution¶

cfd = nltk.ConditionalFreqDist(

(category, tag)

for category in nltk.corpus.brown.categories()

for (word, tag) in nltk.corpus.brown.tagged_words(

categories=category, tagset='universal'))

cfd.tabulate()

. ADJ ADP ADV CONJ DET NOUN NUM PRON PRT VERB X

adventure 10929 3364 7069 3879 2173 8155 13354 466 5205 2436 12274 38

belles_lettres 20975 13311 23214 8498 5954 21695 39546 1566 7715 3919 26354 349

editorial 7099 4958 7613 2997 1862 7416 15170 722 2291 1536 9896 44

fiction 10170 3684 7198 3716 2261 8018 13509 446 4799 2305 12299 83

government 7598 5692 10221 2333 2560 8043 19486 1612 1269 1358 9872 73

hobbies 9792 6559 10070 3736 2948 9497 21276 1426 2334 1889 12733 85

humor 3419 1362 2392 1172 713 2459 4405 154 1357 663 3546 53

learned 19632 15520 25938 8582 5783 22296 46295 3187 3799 3471 27145 240

lore 13073 8445 14405 5500 3793 13381 26749 1270 3970 2644 16972 97

mystery 8962 2721 5755 3493 1696 6209 10673 472 4362 2426 10383 17

news 11928 6706 12355 3349 2717 11389 30654 2166 2535 2264 14399 92

religion 4809 3004 5335 2087 1353 4948 8616 510 1770 910 6036 21

reviews 5354 3554 4832 2083 1453 4720 10528 477 1246 870 5478 109

romance 11397 3912 6918 3986 2469 7211 12550 321 5748 2655 12784 71

science_fiction 2428 929 1451 828 416 1582 2747 79 934 483 2579 14

Exercise¶

What words do follow often? How often are they verbs? Use news category from brown corpus. Remove duplicates and sort the result. New concepts introduced in this exercise:

- remind of nltk.bigrams

- set to remove duplicates

- sorted

Solution¶

Words that follow often:

words = nltk.corpus.brown.words(categories='news')

sorted(set(b for (a, b) in nltk.bigrams(words) if a == 'often'))

[u',',

u'.',

u'a',

u'acceptable',

u'ambiguous',

u'build',

u'did',

u'enough',

u'hear',

u'in',

u'mar',

u'needs',

u'obstructed',

u'that']

What part-of-speech are these words?

tagged_words = nltk.corpus.brown.tagged_words(categories='news', tagset='universal')

fd = nltk.FreqDist(b[1]

for (a, b) in nltk.bigrams(tagged_words)

if a[0] == 'often')

fd.tabulate()

RB ADP . ADJ ADV DET

6 2 2 2 1 1

How often (in percentages) are they verbs?

fd.freq('VERB')

0.42857142857142855

Exercise¶

Find out words which are highly ambiguous as to their part of speech tag. That is, find words that are at least four different parts of speech in different contexts. Use brown corpus.

For example, close can be:

- an adjective: Only the families and a dozen close friends will be present.

- an adverb: Both came close to playing football at the University of Oklahoma.

- a verb: Position your components before you close them in.

- a noun: One night, at the close of the evening service, he came forward.

Solution¶

tagged_words = nltk.corpus.brown.tagged_words(categories='news', tagset='universal')

cfd = nltk.ConditionalFreqDist((word.lower(), tag)

for (word, tag) in tagged_words)

for word in sorted(cfd.conditions()):

if len(cfd[word]) > 3:

tags = [tag for (tag, _) in cfd[word].most_common()]

print('{:20} {}'.format(word, ' '.join(tags)))

best ADJ ADV NOUN VERB

close ADV ADJ VERB NOUN

open ADJ VERB NOUN ADV

present ADJ ADV NOUN VERB

that ADP DET PRON ADV

3.2. Tagged Tokens¶

You can construct tagged tokens from scratch:

tagged_token = nltk.tag.str2tuple('fly/NN')

tagged_token

('fly', 'NN')

However, most of the time you’ll operate on a much higher level, that is on whole tagged corpora.

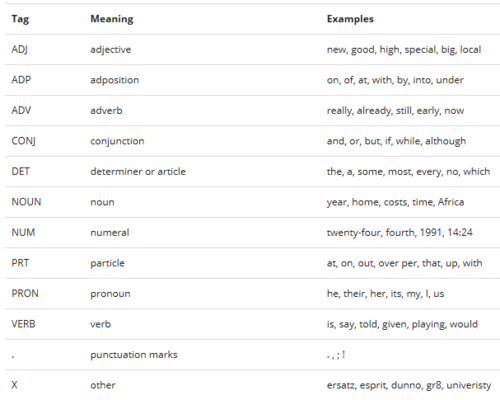

3.3. Part-of-Speech Tagset¶

Here is a simplified part-of-speech tagset:

3.4. Python Dictionaries¶

Python dictionaries are a data type that let you map from arbitrary immutable data (called keys) to another data (named values). Most of the time, keys are strings. For example, you can map from string to strings:

pos = {}

pos['ideas'] = 'NOUN'

pos

{'ideas': 'NOUN'}

defaultdict¶

A very common scenario is when you want to have a default value when a key is not in the dictionary. For example, if you count words, you map from words to number of occurrences. The default value should be zero.

from collections import defaultdict

words = 'A B C A'.split(' ')

count = defaultdict(int)

for word in words:

count[word] += 1

count

defaultdict(int, {'A': 2, 'B': 1, 'C': 1})

Another use case is when you want to have more than one value for each key. This can be simulated by a dictionary mapping from keys to list of values. The default value is a new empty list:

all_words = nltk.corpus.brown.tagged_words(categories='news', tagset='universal')

words_by_part_of_speech = defaultdict(list)

for word, part_of_speech in all_words[:100]:

words_by_part_of_speech[part_of_speech].append(word)

for key, words in words_by_part_of_speech.items():

print('{} -> {}'.format(key, ", ".join(words)))

ADV -> further

NOUN -> Fulton, County, Jury, Friday, investigation, Atlanta's, primary, election, evidence, irregularities, place, jury, term-end, presentments, City, Committee, charge, election, praise, thanks, City, Atlanta, manner, election, September-October, term, jury, Fulton, Court, Judge, Durwood, Pye, reports, irregularities, primary, Mayor-nominate, Ivan

ADP -> of, that, in, that, of, of, of, for, in, by, of, in, by

DET -> The, an, no, any, The, the, which, the, the, the, the, which, the, The, the, which

. -> ``, '', ., ,, ,, ``, '', ., ``, ''

PRT -> to

VERB -> said, produced, took, said, had, deserves, was, conducted, had, been, charged, investigate, was, won

CONJ -> and

ADJ -> Grand, recent, Executive, over-all, Superior, possible, hard-fought

3.5. Words to Properties mapping¶

Exercise¶

Create a mapping from a word to its part of speech. Note that one word can have more than one part of speech tag (i.e. close). Use news category from brown corpus.

New concepts introduced in this exercise:

- set.add

Solution¶

tagged_words = nltk.corpus.brown.tagged_words(categories='news', tagset='universal')

words_to_part_of_speech = defaultdict(set)

for word, part_of_speech in tagged_words[:200]:

words_to_part_of_speech[word].add(part_of_speech)

for word, part_of_speeches in words_to_part_of_speech.items()[:10]:

print('{} -> {}'.format(word, ", ".join(part_of_speeches)))

modernizing -> VERB

produced -> VERB

thanks -> NOUN

Durwood -> NOUN

find -> VERB

Jury -> NOUN

September-October -> NOUN

had -> VERB

, -> .

to -> PRT, ADP

3.6. Automatic Tagging¶

Sometimes you don’t have access to tagged corpus. You have only a raw text. In that case, you may want to tag the text.

Builtin Taggers¶

nltk has a lot of builtin taggers:

[x for x in dir(nltk) if 'tagger' in x.lower()]

['AffixTagger',

'BigramTagger',

'BrillTagger',

'BrillTaggerTrainer',

'CRFTagger',

'ClassifierBasedPOSTagger',

'ClassifierBasedTagger',

'ContextTagger',

'DefaultTagger',

'HiddenMarkovModelTagger',

'HunposTagger',

'NgramTagger',

'PerceptronTagger',

'RegexpTagger',

'SennaChunkTagger',

'SennaNERTagger',

'SennaTagger',

'SequentialBackoffTagger',

'StanfordNERTagger',

'StanfordPOSTagger',

'StanfordTagger',

'TaggerI',

'TrigramTagger',

'UnigramTagger']

We’ll use brown corpus to evaluate taggers performance:

brown_tagged_sents = nltk.corpus.brown.tagged_sents(categories='news', tagset='universal')

DefaultTagger¶

Default tagger assigns the same tag to every token. Let’s see what is the most common part of speech?

tagged_words = nltk.corpus.brown.tagged_words(categories='news', tagset='universal')

tags = [tag for (word, tag) in tagged_words]

fd = nltk.FreqDist(tags)

fd.most_common(10)

[(u'NOUN', 30654),

(u'VERB', 14399),

(u'ADP', 12355),

(u'.', 11928),

(u'DET', 11389),

(u'ADJ', 6706),

(u'ADV', 3349),

(u'CONJ', 2717),

(u'PRON', 2535),

(u'PRT', 2264)]

This is the simplest possible tagger and it’s not very good:

default_tagger = nltk.DefaultTagger('NOUN')

default_tagger.evaluate(brown_tagged_sents)

0.30485112476878096

RegexpTagger¶

The regular expression tagger tries to match a word against different regular expressions. The regular expression can i.e. check the suffix. If there is a match, it assigns the tag associated with the regexp.

patterns = [

(r'.*ing$', 'VERB'), # gerunds

(r'.*ed$', 'VERB'), # simple past

(r'.*es$', 'VERB'), # 3rd singular present

(r'.*ould$', 'VERB'), # modals

(r'.*\'s$', 'NOUN'), # possessive nouns

(r'.*s$', 'NOUN'), # plural nouns

(r'^-?[0-9]+(.[0-9]+)?$', 'NUM'), # cardinal numbers

(r'.*', 'NOUN'), # nouns (default)

]

regexp_tagger = nltk.RegexpTagger(patterns)

However, it’s performance is not very good:

regexp_tagger.evaluate(brown_tagged_sents)

0.34932474093521887

UnigramTagger¶

This tagger is given the list of i.e. hundred most frequent words along with their most likely tag. This data is used to tag new words. If a word is not known to the tagger, it assigns None.

fd = nltk.FreqDist(nltk.corpus.brown.words(categories='news'))

most_freq_words = fd.most_common(100)

cfd = nltk.ConditionalFreqDist(nltk.corpus.brown.tagged_words(categories='news', tagset='universal'))

likely_tags = dict((word, cfd[word].max()) for (word, _) in most_freq_words)

likely_tags.items()[:20]

[(u'all', u'PRT'),

(u'over', u'ADP'),

(u'years', u'NOUN'),

(u'against', u'ADP'),

(u'its', u'DET'),

(u'before', u'ADP'),

(u'(', u'.'),

(u'had', u'VERB'),

(u',', u'.'),

(u'to', u'PRT'),

(u'only', u'ADJ'),

(u'under', u'ADP'),

(u'has', u'VERB'),

(u'New', u'ADJ'),

(u'them', u'PRON'),

(u'his', u'DET'),

(u'Mrs.', u'NOUN'),

(u'they', u'PRON'),

(u'not', u'ADV'),

(u'now', u'ADV')]

What is its performance?

unigram_tagger = nltk.UnigramTagger(model=likely_tags)

unigram_tagger.evaluate(brown_tagged_sents)

0.46503371322871295

UnigramTagger with backlog¶

If the UnigramTagger does not recognize a word and cannot assign any tag, it can delegate to another tagger. What is the performance if we delegate to the default tagger?

unigram_with_backlog = nltk.UnigramTagger(model=likely_tags,

backoff=nltk.DefaultTagger('NOUN'))

unigram_with_backlog.evaluate(brown_tagged_sents)

0.7582194641685065

If we use 10000 most common words instead of 100 for the unigram tagger, we’ll get much better results:

fd = nltk.FreqDist(nltk.corpus.brown.words(categories='news'))

most_freq_words = fd.most_common(10000)

cfd = nltk.ConditionalFreqDist(nltk.corpus.brown.tagged_words(categories='news', tagset='universal'))

likely_tags = dict((word, cfd[word].max()) for (word, _) in most_freq_words)

unigram_with_backlog = nltk.UnigramTagger(model=likely_tags,

backoff=nltk.DefaultTagger('NOUN'))

unigram_with_backlog.evaluate(brown_tagged_sents)

0.9489826361954771

3.7. Determining the Category of a Word¶

An example regular expression tagger tried to determine the part of speech by looking at the suffix of a word. This is known as morphological clue.

If you look at context in which a word can occur, you try to leverage syntactic clues. For example, adjectives can occur before a noun or after words like be. This way, you can identify that near is an adjective in the following parts of sentences:

- the near window

- the end is near

The meaning of a word can help you determine the part of speech. For example, nouns are basically names of people, places or things. This is known as semantic clues.

4. Text Classification (Machine Learning)¶

import nltk

Docstrings of nltk submodules are very helpful to start learning a new topic:

help(nltk.classify)

Help on package nltk.classify in nltk:

NAME

nltk.classify

FILE

/usr/local/lib/python2.7/dist-packages/nltk/classify/__init__.py

DESCRIPTION

Classes and interfaces for labeling tokens with category labels (or

"class labels"). Typically, labels are represented with strings

(such as 'health' or 'sports'). Classifiers can be used to

perform a wide range of classification tasks. For example,

classifiers can be used...

- to classify documents by topic

- to classify ambiguous words by which word sense is intended

- to classify acoustic signals by which phoneme they represent

- to classify sentences by their author

Features

========

In order to decide which category label is appropriate for a given

token, classifiers examine one or more 'features' of the token. These

"features" are typically chosen by hand, and indicate which aspects

of the token are relevant to the classification decision. For

example, a document classifier might use a separate feature for each

word, recording how often that word occurred in the document.

Featuresets

===========

The features describing a token are encoded using a "featureset",

which is a dictionary that maps from "feature names" to "feature

values". Feature names are unique strings that indicate what aspect

of the token is encoded by the feature. Examples include

'prevword', for a feature whose value is the previous word; and

'contains-word(library)' for a feature that is true when a document

contains the word 'library'. Feature values are typically

booleans, numbers, or strings, depending on which feature they

describe.

Featuresets are typically constructed using a "feature detector"

(also known as a "feature extractor"). A feature detector is a

function that takes a token (and sometimes information about its

context) as its input, and returns a featureset describing that token.

For example, the following feature detector converts a document

(stored as a list of words) to a featureset describing the set of

words included in the document:

>>> # Define a feature detector function.

>>> def document_features(document):

... return dict([('contains-word(%s)' % w, True) for w in document])

Feature detectors are typically applied to each token before it is fed

to the classifier:

>>> # Classify each Gutenberg document.

>>> from nltk.corpus import gutenberg

>>> for fileid in gutenberg.fileids(): # doctest: +SKIP

... doc = gutenberg.words(fileid) # doctest: +SKIP

... print fileid, classifier.classify(document_features(doc)) # doctest: +SKIP

The parameters that a feature detector expects will vary, depending on

the task and the needs of the feature detector. For example, a

feature detector for word sense disambiguation (WSD) might take as its

input a sentence, and the index of a word that should be classified,

and return a featureset for that word. The following feature detector

for WSD includes features describing the left and right contexts of

the target word:

>>> def wsd_features(sentence, index):

... featureset = {}

... for i in range(max(0, index-3), index):

... featureset['left-context(%s)' % sentence[i]] = True

... for i in range(index, max(index+3, len(sentence))):

... featureset['right-context(%s)' % sentence[i]] = True

... return featureset

Training Classifiers

====================

Most classifiers are built by training them on a list of hand-labeled

examples, known as the "training set". Training sets are represented

as lists of (featuredict, label) tuples.

PACKAGE CONTENTS

api

decisiontree

maxent

megam

naivebayes

positivenaivebayes

rte_classify

scikitlearn

senna

svm

tadm

textcat

util

weka

4.1. Supervised Classification¶

Classification is the process of assigning a class label to an input. For example, you may want to find out whether an email is spam or not. In that case there are two class labels: spam and non-spam, and the input is the email.

Supervised classification is built based on some training corpus where each input has an already assigned class label. For example, for supervised email classification you may want to use a corpus of emails along with information whether they’re spam or not.

Classifiers don’t work directly in the input. Instead, you convert the input to a featureset, that is a dictionary mapping from feature names to their values. Then, classifiers look at the featuresets.

Features are some properties of the input. For example, in the case of the spam classification, it can be a number of occurrences for each word. That is, the featureset can be a dictionary mapping from words to the number its occurrences. It’s important that feature values are primitive values, like boolean, number or a string.

4.2. Sentence Segmentation¶

We’ll use supervised classification for sentence segmentation, that is to the task of splitting list of tokens into list of sentences (which are list of tokens).

This indeed is classification problem – for each token, you can assign whether it’s an end of sentence or not.

Input¶

We’ll use treebank_raw corpus. We need to convert the input into a format that is more convenient for supervised learning:

sents = nltk.corpus.treebank_raw.sents()

tokens = []

boundaries = set()

offset = 0

for sent in sents:

tokens.extend(sent)

offset += len(sent)

boundaries.add(offset-1)

print(' '.join(tokens[:50]))

. START Pierre Vinken , 61 years old , will join the board as a nonexecutive director Nov . 29 . Mr . Vinken is chairman of Elsevier N . V ., the Dutch publishing group . . START Rudolph Agnew , 55 years old and former chairman of Consolidated

Extracting Features¶

Classifiers don’t operate directly at the input. Instead, they have a look at featuresets which contains some properties of the input. Therefore, we need a function that convert from the input to the featuresets:

def punct_features(tokens, i):

return {'next-word-capitalized': tokens[i+1][0].isupper(),

'prev-word': tokens[i-1].lower(),

'punct': tokens[i],

'prev-word-is-one-char': len(tokens[i-1]) == 1}

And then, convert each dot token to the featureset:

featuresets = [(punct_features(tokens, i), (i in boundaries))

for i in range(1, len(tokens)-1)

if tokens[i] in '.?!']

featuresets[:8]

[({'next-word-capitalized': False,

'prev-word': u'nov',

'prev-word-is-one-char': False,

'punct': u'.'},

False),

({'next-word-capitalized': True,

'prev-word': u'29',

'prev-word-is-one-char': False,

'punct': u'.'},

True),

({'next-word-capitalized': True,

'prev-word': u'mr',

'prev-word-is-one-char': False,

'punct': u'.'},