Hadoop Administration

Jump to navigation

Jump to search

Hadoop Administration

hat does Hadoop mean?⌘===

Outline⌘

- First Day:

- Session I:

- Introduction to the course

- Introduction to Big Data and Hadoop

- Session II:

- HDFS

- Session III:

- YARN

- Session IV:

- MapReduce

- Session I:

Outline #2⌘

- Second Day:

- Session I:

- Installation and configuration of the Hadoop in a pseudo-distributed mode

- Session II:

- Running MapReduce jobs on the Hadoop cluster

- Session III:

- Hadoop ecosystem tools: Pig, Hive, Sqoop, HBase, Flume, Oozie

- Session IV:

- Supporting tools: Hue, Cloudera Manager

- Big Data future: Impala, Tez, Spark, NoSQL

- Session I:

Outline #3⌘

- Third Day:

- Session I:

- Hadoop cluster planning

- Session II:

- Hadoop cluster installation and configuration

- Session III:

- Hadoop cluster installation and configuration

- Session IV:

- Case Studies

- Certification and Surveys

- Session I:

First Day - Session I⌘

"I think there is a world market for maybe five computers"⌘

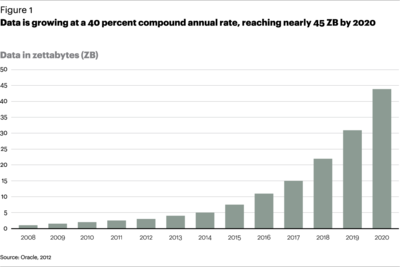

How big are the data we are talking about?⌘

Foundations of Big Data"⌘

What is Hadoop?⌘

- Apache project for storing and processing large data sets

- Open-source implementation of Google Big Data solutions

- Components:

- core:

- HDFS (Hadoop Distributed File System)

- YARN (Yet Another Resource Negotiator)

- noncore:

- Data processing paradigms (MapReduce, Spark, Impala, Tez, etc.)

- Ecosystem tools (Pig, Hive, Sqoop, HBase, etc.)

- core:

- Written in Java

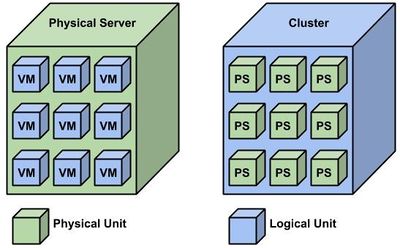

Virtualization vs clustering⌘

Data storage evolution⌘

- 1956 - HDD (Hard Disk Drive), now up to 6 TB

- 1983 - SDD (Solid State Drive), now up to 16 TB

- 1984 - NFS (Network File System), first NAS (Network Attached Storage) implementation

- 1987 - RAID (Redundant Array of Independent Disks), now up to ~100 disks

- 1993 - Disk Arrays, now up to ~200 disks

- 1994 - Fibre-channel, first SAN (Storage Area Network) implementation

- 2003 - GFS (Google File System), first Big Data implementation

Big Data and Hadoop evolution⌘

- 2003 - GFS (Google File System) publication, available here

- 2004 - Google MapReduce publication, available here

- 2005 - Hadoop project founded

- 2006 - Google BigTable publication, available here

- 2008 - First Hadoop implementation

- 2010 - Google Dremel publication, available here

- 2010 - First HBase implementation

- 2012 - Apache Hadoop YARN project founded

- 2013 - Cloudera releases Impala

- 2014 - Apache releases Spark

Hadoop distributions⌘

- Apache Hadoop, http://hadoop.apache.org/

- CDH (Cloudera Distribution including apache Hadoop), http://www.cloudera.com/

- HDP (Hortonworks Data Platform), http://hortonworks.com/

- M3, M5 and M7, http://www.mapr.com/

- Amazon Elastic MapReduce, https://aws.amazon.com/elasticmapreduce/

- BigInsights Enterprise Edition, http://www.ibm.com/

- Intel Distribution for Apache Hadoop, http://hadoop.intel.com/

- And more ...

Hadoop automated deployment tools⌘

- Cloudera Manager, https://www.cloudera.com/products/cloudera-manager.html

- Apache Ambari, https://ambari.apache.org/

- MapR Control System, http://doc.mapr.com/display/MapR/MapR+Control+System

- VMware Big Data Platform, https://www.vmware.com/products/big-data-extensions

- OpenStack Sahara, https://wiki.openstack.org/wiki/Sahara

- Amazon Elastic MapReduce, https://aws.amazon.com/elasticmapreduce/

- HDInsight, https://azure.microsoft.com/en-us/services/hdinsight/

- Hadoop on Google Cloud Platform, https://cloud.google.com/hadoop/

Who uses Hadoop?⌘

Where is it all going?⌘

- At this point we have all the tools to start changing the future:

- capability of storing and processing any amount of data

- tools for storing and processing both structured and unstructured data

- tools for batch and interactive data processing

- Big Data paradigm has matured

- BigData future:

- search engines

- scientific analysis

- trends prediction

- business intelligence

- artificial intelligence

References⌘

- Books:

- E. Sammer. "Hadoop Operations". O'Reilly, 1st edition

- T. White. "Hadoop: The Definitive Guide". O'Reilly, 4th edition

- Publications:

- Websites:

- Apache Hadoop project website: http://hadoop.apache.org/

- Cloudera website: http://www.cloudera.com/content/cloudera/en/home.html

- Hortonworks website: http://hortonworks.com/

- MapR website: http://www.mapr.com/

- Internet

Introduction to the lab⌘

Lab components:

- Laptop with Windows 8

- Virtual Machine with CentOS 7.2 on VirtualBox:

- Hadoop instance in a pseudo-distributed mode

- Hadoop ecosystem tools

- terminal for connecting to the GCE

- GCE (Google Compute Engine) cloud-based IaaS platform

- Hadoop cluster

VirtualBox⌘

- VirtualBox 64-bit version (click here to download)

- VM name: Hadoop Administration

- Credentials: terminal / terminal (use root account)

- Snapshots (top right corner)

- Press right "Control" key to release

GCE⌘

- GUI: https://console.cloud.google.com/compute/

- Project ID: check after logging in in the "My First Project" lap

- Connect the VM to the GCE:

- sudo /opt/google-cloud-sdk/bin/gcloud auth login

- follow on-screen instructions

- sudo /opt/google-cloud-sdk/bin/gcloud config set project [Project ID]

- sudo /opt/google-cloud-sdk/bin/gcloud components update

- sudo /opt/google-cloud-sdk/bin/gcloud config list

First Day - Session II⌘

Goals and motivation⌘

- Very large files:

- file sizes larger than tens of terabytes

- filesystem sizes larger than tens of petabytes

- Streaming data access:

- write-once, read-many-times approach

- optimized for batch processing

- Fault tolerance:

- data replication

- metadata high availability

- Scalability:

- commodity hardware

- horizontal scalability

- Resilience:

- components failures are common

- auto-healing feature

- Support for MapReduce data processing paradigm

Design⌘

- FUSE (Filesystem in USErspace)

- Non POSIX-compliant filesystem (http://standards.ieee.org/findstds/standard/1003.1-2008.html)

- Block abstraction:

- block size: 64 MB

- block replication factor: 3

- Benefits:

- support for file sizes larger than disk sizes

- segregation of data and metadata

- data durability

- Lab Exercise 1.2.1

Daemons⌘

- Datanode:

- responsible for storing and retrieving data

- many per cluster

- Namenode:

- responsible for storing and retrieving metadata

- responsible for maintaining a database of data locations

- 1 active per cluster

- Secondary namenode:

- responsible for checkpointing metadata

- 1 active per cluster

Data⌘

- Stored in a form of blocks on datanodes

- Blocks are files on datanodes' local filesystem

- Reported periodically to the namenode in a form of block reports

- Durability and parallel processing thanks to replication

Metadata⌘

- Stored in a form of files on the namenode:

- fsimage_[transaction ID] - complete snapshot of the filesystem metadata up to specified transaction

- edits_inprogress_[transaction ID] - incremental modifications made to the metadata since specified transaction

- Contain information on HDFS filesystem's structure and properties

- Copied and served from the namenode's RAM

- Don't confuse metadata with the database of data locations!

- Lab Exercise 1.2.2

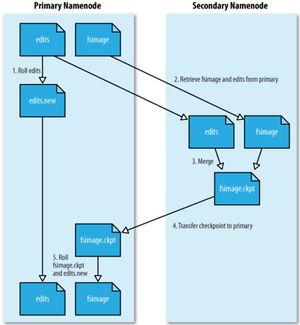

Metadata checkpointing⌘

- When does it occur?

- every hour

- edits file reaches 64 MB

- How does it work?

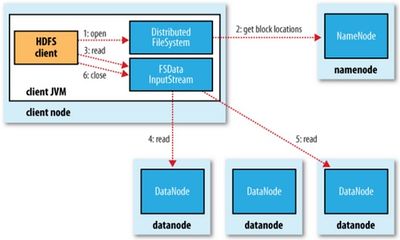

Read path⌘

- A client opens a file by calling the "open()" method on the "FileSystem" object

- The client calls the namenode to return a sorted list of datanodes for the first batch of blocks in the file

- The client connects to the first datanode from the list

- The client streams the data block from the datanode

- The client closes the connection to the datanode

- The client repeats steps 3-5 for the next block or steps 2-5 for the next batch of blocks, or closes the file when copied

Read path #2⌘

Data read preferences⌘

- Determines the closest datanode to the client

- The distance is based on the theoretical network bandwidth and latency:

- 0 - the same node

- 2 - the same rack

- 4 - the same data center

- 6 - different data centers

- Rack Topology feature has to be configured prior

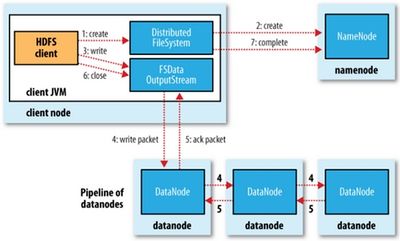

Write path⌘

- A client creates a file by calling the "create()" method on the "DistributedFileSystem" object

- The client calls the namenode to create the file with no blocks in the filesystem namespace

- The client calls the namenode to return a list of datanodes to store replicas of a batch data blocks

- The client connects to the first datanode from the list

- The client streams the data block to the datanode

- The datanode connects to the the second datanode from the list, streams the data block, and so on

- The datanodes acknowledge the receiving of the data block

- The client repeats steps 4-5 for the next blocks or steps 3-5 for the next batch of blocks, or closes the file when written

- The client notifies the namenode that the file is written

Write path #2⌘

Data write preferences⌘

- 1st copy - on the client (if running datanode daemon) or on randomly selected non-overloaded datanode

- 2nd copy - on a datanode in a different rack in the same data center

- 3rd copy - on randomly selected non-overloaded datanode in the same rack

- 4th and further copies - on randomly selected non-overloaded datanaode

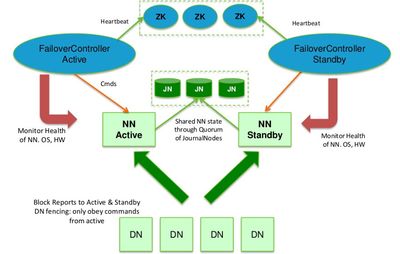

Namenode High Availability⌘

- Namenode - SPOF (Single Point Of Failure)

- Manual namenode recovery process may take up to 30 minutes

- Namenode HA feature available since 0.23 release

- High Availability type: active / standby

- Based on: QJM (Quorum Journal Manager) and ZooKeeper

- Failover type: manual or automatic with ZKFC (ZooKeeper Failover Controller)

- STONITH (Shot The Other Node In The Head)

- Fencing methods

- Secondary namenode functionality moved to the standby namenode

Namenode High Availability #2⌘

Journal Node⌘

- Relatively lightweight

- Usually collocated on the machines running namenode or resource manager daemons

- Changes to the "edits" file must be written to a majority of journal nodes

- Usually deployed in 3, 5, 7, 9, etc.

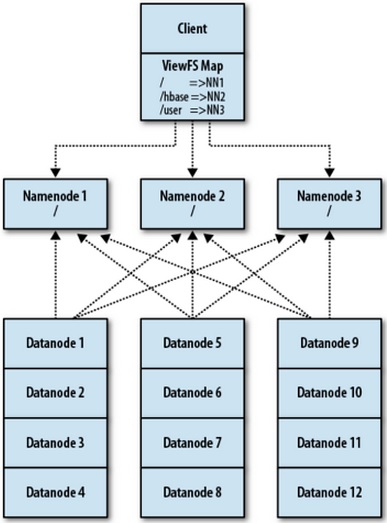

Namenode Federation⌘

- Namenode memory limitations

- Namenode federation feature available since 0.23 release

- Horizontal scalability

- Filesystem partitioning

- ViewFS

- Federation of HA pairs

- Lab Exercise 1.2.3

- Lab Exercise 1.2.4

Namenode Federation #2⌘

Interfaces⌘

- Underlying interface:

- Java API - native Hadoop access method

- Additional interfaces:

- CLI

- C interface

- HTTP interface

- FUSE

URIs⌘

- [path] - local HDFS filesystem

- file://[path] - local HDFS filesystem

- hdfs://[namenode]/[path] - remote HDFS filesystem

- http://[namenode]/[path] - HTTP

- viewfs://[path] - ViewFS

Java interface⌘

- Reminder: Hadoop is written in Java

- Destined to Hadoop developers rather than Hadoop administrators

- Based on the "FileSystem" class

- Reading data:

- by streaming from Hadoop URIs

- by using Hadoop API

- Writing data:

- by streaming to Hadoop URIs

- by appending to files

Command-line interface⌘

- Shell-like commands

- All begin with the "hdfs" keyword

- No current working directory

- Example: Overriding the replication factor:

hdfs dfs -setrep [RF] [file]

- All commands available here

Base commands⌘

- hdfs dfs -mkdir [URI] - creates a directory

- hdfs dfs -rm [URI] - removes the file

- hdfs dfs -rmr [URI] - removes the directory

- hdfs dfs -cat [URI] - displays a content of the file

- hdfs dfs -ls [URI] - displays a content of the directory

- hdfs dfs -cp [source URI] [destination URI] - copies the file or the directory

- hdfs dfs -mv [source URI] [destination URI] - moves the file or the directory

- hdfs dfs -du [URI] - displays a size of the file or the directory

Base commands #2⌘

- hdfs dfs -chmod [mode] [URI] - changes permissions of the file or the directory

- hdfs dfs -chown [owner] [URI] - changes the owner of the file or the directory

- hdfs dfs -chgrp [group] [URI] - changes the owner group of the file or the directory

- hdfs dfs -put [source path] [destination URI] - uploads the data into HDFS

- hdfs dfs -getmerge [source directory] [destination file] - merges files from directory into a single file

- hadoop archive -archiveName [name] [source URI]* [destination URI] - creates a Hadoop archive

- hadoop fsck [URI] - performs an HDFS filesystem check

- hadoop version - displays a Hadoop version

HTTP interface⌘

- HDFS HTTP ports:

- 50070 - namenode

- 50075 - datanode

- 50090 - secondary namenode

- Access methods:

- direct

- via proxy

FUSE⌘

- Reminder:

- HDFS is a user-space filesystem

- HDFS is a non-POSIX-compliant filesystem

- Fuse-DFS module

- Allows HDFS to be mounted under the Linux FHS (Filesystem Hierarchy Standard)

- Allows Unix tools to be used against the HDFS

- Documentation: src/contrib/fuse-dfs

Quotas⌘

- Count quotas vs space quotas:

- count quotas - limit a number of files inside of the HDFS directory

- space quotas - limit a post-replication disk space utilization of the HDFS directory

- Setting quotas:

hadoop dfsadmin -setQuota [count] [path] hadoop dfsadmin -setSpaceQuota [size] [path]

- Removing quotas:

hadoop dfsadmin -clrQuota [path] hadoop dfsadmin -clrSpaceQuota [path]

- Viewing quotas:

hadoop fs -count -q [path]

Limitations⌘

- Low-latency data access:

- tens of milliseconds

- alternatives: HBase, Cassandra

- Lots of small files

- limited by namenode's physical memory

- inefficient storage utilization due to large block size

- Multiple writers, arbitrary file modifications:

- single writer

- append operations only

First Day - Session III⌘

Legacy architecture⌘

- Historically, there had been only one data processing paradigm for Hadoop - MapReduce

- Hadoop with MRv1 architecture consisted of two core components: HDFS and MapReduce

- MapReduce component was responsible for cluster resources management and MapReduce jobs execution

- As other data processing paradigms have become available, Hadoop with MRv2 (YARN) was developed

Goals and motivation⌘

- Segregation of services:

- single MapReduce service for resource and job management purpose

- separate YARN services for resource and job management purpose

- Scalability:

- MapReduce framework hits scalability limitations in clusters consisting of 5000 nodes

- YARN framework doesn’t hit scalability limitations in clusters consisting of 40000 nodes

- Flexibility:

- MapReduce framework is capable of executing MapReduce jobs only

- YARN framework is capable of executing any jobs

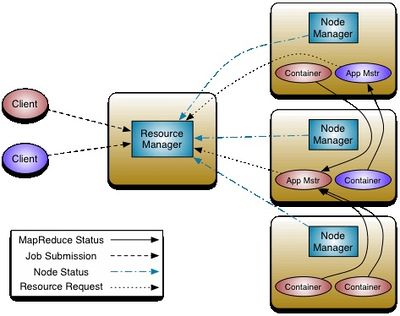

Daemons⌘

- ResourceManager:

- responsible for cluster resources management

- consists of a scheduler and an application manager component

- 1 active per cluster

- NodeManager:

- responsible for node resources management, jobs execution and tasks execution

- provides containers abstraction

- many per cluster

- JobHistoryServer:

- responsible for serving information about completed jobs

- 1 active per cluster

Design⌘

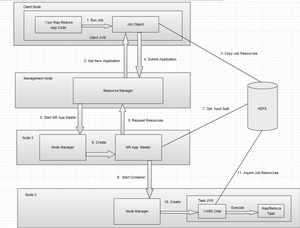

YARN job stages⌘

- A client retrieves an application ID from the resource manager

- The client calculates input splits and writes the job resources (e.g. jar file) into HDFS

- The client submits the job by calling the submitApplication() method on the resource manager

- The resource manager allocates a container for the job execution purpose and launches the application master process inside the container

- The application master process initializes the job and retrieves job resources from HDFS

- The application master process requests the resource manager for containers allocation

- The resource manager allocates containers for tasks execution purpose

- The application master process requests node managers to launch JVMs inside the allocated containers

- Containers retrieve job resources and data from HDFS

- Containers periodically report progress and status updates to the application master process

- The client periodically polls the application master process for progress and status updates

YARN job stages #2⌘

ResourceManager high availability⌘

- ResourceManager - SPOF

- High Availability type: active / passive

- Based on: ZooKeeper

- Failover type: manual or automatic with ZKFC

- ZFCK daemon embedded in the ResourceManager code

- STONITH

- Fencing methods

Resources configuration⌘

- Allocated resources:

- physical memory

- virtual cores

- Resources configuration (yarn-site.xml):

- yarn.nodemanager.resource.memory-mb - available memory in megabytes

- yarn.nodemanager.resource.cpu-vcores - available number of virtual cores

- yarn.scheduler.minimum-allocation-mb - minimum allocated memory in megabytes

- yarn.scheduler.minimum-allocation-vcores - minimum number of allocated virtual cores

- yarn.scheduler.increment-allocation-mb - increment memory in megabytes into which the request is rounded up

- yarn.scheduler.increment-allocation-vcores - increment number of virtual cores into which the request is rounded up

- Lab Exercise 1.3.1

First Day - Session IV⌘

Goals and motivation⌘

- Automatic parallelism and distribution of work:

- developers are required to only write simple map and reduce functions

- distribution and parallelism are handled by the MapReduce framework

- Data locality:

- computation operations are performed on data local to the computing node

- data transfer over the network is reduced to an absolute minimum

- Fault tolerance:

- jobs high availability

- tasks distribution

- Scalability:

- horizontal scalability

Design⌘

- Fully automated parallel data processing paradigm

- Jobs abstraction as an atomic unit

- Jobs operate on key-value pairs

- Job components:

- map tasks

- reduce tasks

- Benefits:

- simplicity of development

- fast data processing times

MapReduce by analogy⌘

- Lab Exercise 1.4.1

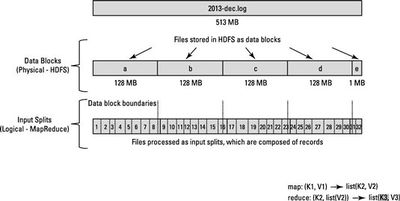

Input splits vs HDFS blocks⌘

Sample data⌘

- http://academic.udayton.edu/kissock/http/Weather/gsod95-current/allsites.zip

1 1 1995 82.4 1 2 1995 75.1 1 3 1995 73.7 1 4 1995 77.1 1 5 1995 79.5 1 6 1995 71.3 1 7 1995 71.4 1 8 1995 75.2 1 9 1995 66.3 1 10 1995 61.8

Sample map function⌘

- Function:

#!/usr/bin/perl

use strict;

my %results;

while (<>)

{

$_ =~ m/.*(\d{4}).*\s(\d+\.\d+)/;

push (@{$results{$1}}, $2);

}

foreach my $year (sort keys %results)

{

print "$year: ";

foreach my $temperature (@{$results{$year}})

{

print "$temperature ";

}

print "\n";

}

- Function output:

year: temperature1 temperature2 ...

Sample reduce function⌘

- Function:

#!/usr/bin/perl

use List::Util qw(max);

use strict;

my %results;

while (<>)

{

$_ =~ m/(\d{4})\:\s(.*)/;

my @temperatures = split(' ', $2);

@{$results{$1}} = @temperatures;

}

foreach my $year (sort keys %results)

{

my $temperature = max(@{$results{$year}});

print "$year: $temperature\n";

}

- Function output:

year: temperature

Schufle and sort phase⌘

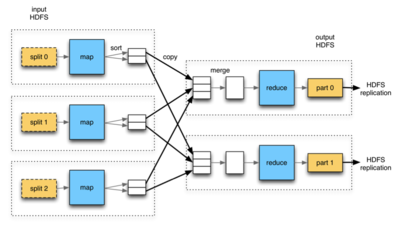

Map task in details⌘

- Map function:

- takes key-value pair

- returns key-value pair

- Input key-value pair: file - input split

- Output key-value pair: defined by the map function

- Output key-value pairs are sorted by the key

- Partitioner assigns each key-value pair to a partition

Reduce task in details⌘

- Reduce function:

- takes key-value pair

- returns key-value pair

- Input key-value pair: returned by the map function

- Output key-value pair: final result

- Input is received in the shuffle and sort phase

- Each reducer is assigned to one or more partitions

- Reducer starts copying the data from partitions as soon as they are written

Combine function⌘

- Executed by the map task

- Executed just after the map function

- Most often the same as the reduce function

- Lab Exercise 1.4.2

Java MapReduce⌘

- MapReduce jobs are written in Java

- org.apache.hadoop.mapreduce namespace:

- Mapper class and map() function

- Reducer class and reduce() function

- MapReduce jobs are executed by the hadoop program

- Sample Java MapReduce job available here [[1]]

- Sample Java MapReduce job execution:

hadoop [MapReduce program path] [input data path] [output data path]

Streaming⌘

- Uses hadoop-streaming.jar class

- Supports all languages capable of reading from the stdin and writing to the stdout

- Sample streaming MapReduce job execution:

hadoop jar [hadoop-streaming.jar path] \ -input [input data path] \ -output [output data path] \ -mapper [map function path] \ -reducer [reduce function path] \ -combiner [combiner function path] \ -file [attached files path]

Pipes⌘

- Supports all languages capable of reading from and writing to network sockets

- Mostly applicable for C and C++ languages

- Sample piped MapReduce job execution:

hadoop pipes \ -D hadoop.pipes.java.recordreader=true -D hadoop.pipes.java.recordwriter=true -input [input data path] \ -output [output data path] \ -program [MapReduce program path] -file [attached files path]

Limitations⌘

- Batch processing system:

- MapReduce jobs run on the order of minutes or hours

- no support for low-latency data access

- Simplicity:

- optimized for full table scan operations

- no support for data access optimization

- Too low-level:

- raw data processing functions

- alternatives: Pig, Hive

- Not all algorithms can be parallelised:

- algorithms with shared state

- algorithms with dependent variables

Second Day - Session I⌘

Hadoop installation in pseudo-distributed

mode⌘

- Lab Exercise 2.1.1

- Lab Exercise 2.1.2

- Lab Exercise 2.1.3

- Lab Exercise 2.1.4

Second Day - Session II⌘

Running MapReduce jobs in

pseudo-distributed mode⌘

- Lab Exercise 2.2.1

- Lab Exercise 2.2.2

- Lab Exercise 2.2.3

- Lab Exercise 2.2.4

- Lab Exercise 2.2.5

- Lab Exercise 2.2.6

Second Day - Session III⌘

Pig - Goals and motivation⌘

- Developed by Yahoo

- Simplicity of development:

- however, MapReduce programs themselves are simple, designing complex regular expressions may be challenging and time consuming

- Pig offers much reacher data structures for pattern matching purpose

- Length and clarity of the source code:

- MapReduce programs are usually long and comprehensible

- Pig programs are usually short and understandable

- Simplicity of execution:

- MapReduce programs require compiling, packaging and submitting

- Pig programs can be executed ad-hoc from an interactive shell

Pig - Design⌘

- Pig components:

- Pig Latin - scripting language for data flows

- each program is made up of series of transformations applied to the input data

- each transformation is made up of series of MapReduce jobs run on the input data

- Pig Latin programs execution environment:

- launched from a single JVM

- execution distributed over the Hadoop cluster

- Pig Latin - scripting language for data flows

Pig - relational operators⌘

- LOAD - loads the data from a filesystem or other storage into a relation

- STORE - saves the relation to a filesystem or other storage

- DUMP - prints the relation to the console

- FILTER - removes unwanted rows from the relation

- DISTINCT - removes duplicate rows from the relation

- SAMPLE - selects a random sample from the relation

- JOIN - joins two or more relations

- GROUP - groups the data in a single relation

- ORDER - sorts the relation by one or more fields

- LIMIT - limits the size of the relation to a maximum number of tuples

- and more

Pig - mathematical and logical operators⌘

- literal - constant / variable

- $n - n-th column

- x + y / x - y - addition / substraction

- x * y / x / y - multiplication / division

- x == y / x != y - equals / not equals

- x > y / x < y - greater then / less then

- x >= y / x <= y - greater or equal then / less or equal then

- x matches y - pattern matching with regular expressions

- x is null - is null

- x is not null - is not null

- x or y - logical or

- x and y - logical and

- not x - logical negation

Pig - types⌘

- int - 32-bit signed integer

- long - 64-bit signed integer

- float - 32-bit floating-point number

- double - 64-bit floating-point number

- chararray - character array in UTF-16 format

- bytearray - byte array

- tuple - sequence of fields of any type

- map - set of key-value pairs:

- key - character array

- value - any type

Pig - Exercises⌘

- Lab Exercise 2.3.1

Hive - Goals and Motivation⌘

- Developed by Facebook

- Data structurization:

- HDFS stores data in an unstructured format

- Hive stores data in a structured format

- SQL interface:

- HDFS does not provide the SQL interface

- Hive provides an SQL-like interface

Hive - Design⌘

- Metastore:

- stores Hive metadata

- RDBMS database:

- embedded: Derby

- local: MySQL, PostgreSQL, etc.

- remote: MySQL, PostgreSQL, etc.

- HiveQL:

- used to read data by accepting queries and translating them to a series of MapReduce jobs

- used to write data by uploading them into HDFS and updating the Metastore

- Interfaces:

- CLI

- Web GUI

- ODBC

- JDBC

Hive - Data Types⌘

- TINYINT - 1-byte signed integer

- SMALLINT - 2-byte signed integer

- INT - 4-byte signed integer

- BIGINT - 8-byte signed integer

- FLOAT - 4-byte floating-point number

- D0UBLE - 8-byte floating-point number

- BOOLEAN - true/false value

- STRING - character string

- BINARY - byte array

- MAP - an unordered collection of key-value pairs

Hive - SQL vs HiveQL⌘

| Feature | SQL | HiveQL |

|---|---|---|

| Updates | UPDATE, INSERT, DELETE | INSERT OVERWRITE TABLE |

| Transactions | Supported | Not Supported |

| Indexes | Supported | Not supported |

| Latency | Sub-second | Minutes |

| Multitable inserts | Partially supported | Supported |

| Create table as select | Partially supported | Supported |

| Views | Updatable | Read-only |

Hive - Exercises⌘

- Lab Exercise 2.3.2

Sqoop - Goals and Motivation⌘

- Cooperation with RDBMS:

- RDBMS are widely used for data storing purpose

- need for importing / exporting data from RDBMS to HDFS and vice versa

- Data import / export flexibility:

- manual data import / export using HDFS CLI, Pig or Hive

- automatic data import / export using Sqoop

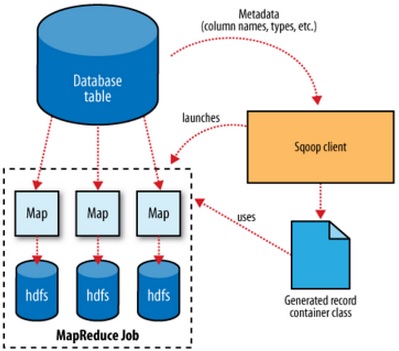

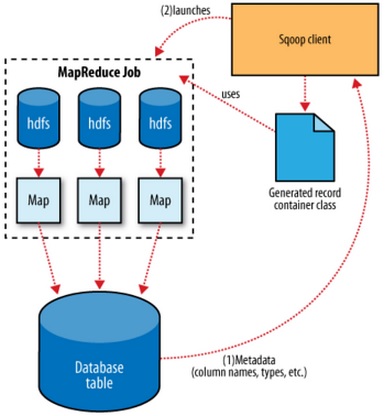

Sqoop - Design⌘

- JDBC - used to connect to the RDBMS

- SQL - used to retrieve / populate data from / to RDBMS

- MapReduce - used to retrieve / populate data from / to HDFS

- Sqoop Code Generator - used to create table-specific class to hold records extracted from the table

Sqoop - Import process⌘

Sqoop - Export process⌘

Sqoop - Exercises⌘

- Lab Exercise 2.3.3

HBase - Goals and Motivation⌘

- Designed by Google (BigTable)

- Developed by Apache

- Interactive queries:

- MapReduce, Pig and Hive are batch processing frameworks

- HBase is a real-time processing framework

- Data structurization:

- HDFS stores data in an unstructured format

- HBase stores data in a semi-structured format

HBase - Design⌘

- Runs on the top of HDFS

- Used to be concidered a core Hadoop component

- Key-value NoSQL database

- Columnar storage

- Metadata stored in the -ROOT- and .META. tables

- Data can be accessed interactively or using MapReduce

HBase - Architecture⌘

- Tables are distributed across the cluster

- Tables are automatically partitioned horizontally into regions

- Tables consist of rows and columns

- Table cells are versionde (by a timestamp by default)

- Table cell type is an uninterpreted array of bytes

- Table rows are sorted by the row key which is the table's primary key

- Table columns are grouped into column families

- Table column families must be defined on the table creation stage

- Table columns can be added on the fly if the column family exists

HBase - Exercises⌘

- Lab Exercise 2.3.4

Flume - Goals and motivation⌘

- Streaming data:

- HDFS does not have any built-in mechanisms for handling streaming data flows

- Flume is designed to collect, aggregate and move streaming data flows into HDFS

- Data queueing:

- When writing directly to HDFS data are lost during spike periods

- Flume is designed to buffer data during spike periods

- Guarantee of data delivery:

- Flume is designed to guarantee a delivery of the data by using a single-hop message delivery semantics

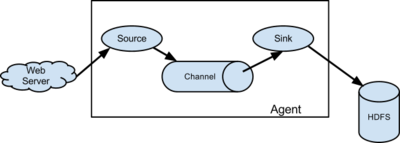

Flume - Design⌘

- Event - unit of data that Flume can transport

- Flow - movement of the events from a point of origin to their final destination

- Client - an interface implementation that operates at the point of origin of the events

- Agent - an independent process that hosts flume components such as sources, channels and sinks

- Source - an interface implementation that can consume the events delivered to it and deliver them to channels

- Channel - a transient store for the events, which are delivered by the sources and removed by sinks

- Sink - an interface implementation that can remove the events from the channel and transmit them further

Flume - Design #2⌘

Flume - Closer look⌘

- Flume sources:

- Avro, HTTP, Syslog, etc.

- https://flume.apache.org/FlumeUserGuide.html#flume-sources

- Sink types:

- regular - transmit the event to another agent

- terminal - transmit the event to its final destination

- Flow pipeline:

- from one source to one destination

- from multiple sources to one destination

- from one source to multiple destinations

- from multiple sources to multiple destinations

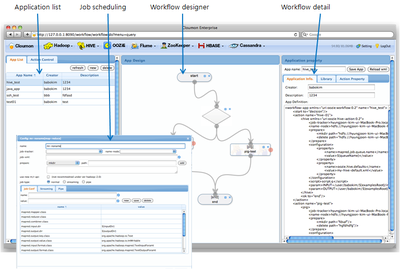

Oozie - Goals and motivation⌘

- Hadoop jobs execution:

- Hadoop clients execute Hadoop jobs from CLI using hadoop command

- Oozie provides web-based GUI for Hadoop jobs definition and execution

- Hadoop jobs management:

- Hadoop doesn't provide any built-in mechanism for jobs management (e.g. forks, merges, decisions, etc.)

- Oozie provides Hadoop jobs management feature based on a control dependency DAG

Oozie - Design⌘

- Oozie - workflow scheduling system

- Oozie workflow

- control flow nodes:

- define the beginning and the end of the workflow

- provide a mechanism of controlling the workflow execution path

- action nodes:

- are mechanisms by which the workflow triggers an execution of Hadoop jobs

- supported job types include Java MapReduce, Pig, Hive, Sqoop and more

- control flow nodes:

Oozie - Web GUI⌘

Second Day - Session IV⌘

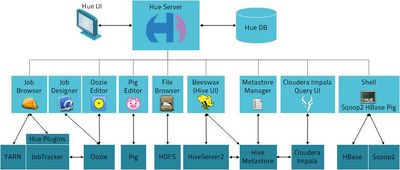

Hue - Goals and motivation⌘

- Hadoop cluster management:

- each Hadoop component is managed independently

- Hue provides centralized Hadoop components management tool

- Hadoop components are mostly managed from the CLI

- Hue provides web-based GUI for Hadoop components management

- Open Source:

- other cluster management tools (i.e. Cloudera Manager) are payable and closed

- Hue is free of charge and an open-source tool

Hue - Design⌘

- Hue components:

- Hue server - web application's container

- Hue database - web application's data

- Hue UI - web user interface application

- REST API - for communication with Hadoop components

- Supported browsers:

- Google Chrome

- Mozilla Firefox 17+

- Internet Explorer 9+

- Safari 5+

- Lab Exercise 2.4.1

Hue - Design #2⌘

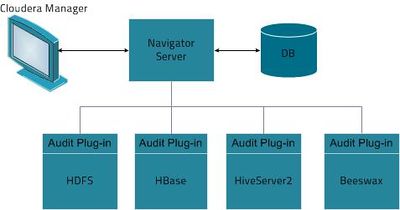

Cloudera Manager - Goals and motivation⌘

- Hadoop cluster deployment and configuration:

- Hadoop components need to be deployed and configured manually

- Cloudera Manager provides an automatic deployment and configuration of Hadoop components

- Hadoop cluster management:

- each Hadoop component is managed independently

- Cloudera Manager provides a centralized Hadoop components management tool

- Hadoop components are mostly managed from the CLI

- Cloudera Manager provides a web-based GUI for Hadoop components management

Cloudera Manager - Design⌘

- Cloudera Manager versions:

- Express - deployment, configuration, management, monitoring and diagnostics

- Enterprise - advanced management features and support

- Cloudera Manager components:

- Cloudera Manager Server - web application's container and core Cloudera Manager engine

- Cloudera Manager Database - web application's data and monitoring information

- Admin Console - web user interface application

- Agent - installed on every component in the cluster

- REST API - for communication with Agents

- Lab Exercise 2.4.2

- Lab Exercise 2.4.3

- Lab Exercise 2.4.4

Cloudera Manager - Design #2⌘

Impala - Goals and Motivation⌘

- Designed by Google (Dremel)

- Developed by Cloudera

- Interactive queries:

- MapReduce, Pig and Hive are batch processing frameworks

- Impala is a real-time processing framework

- Data structurization:

- HDFS stores data in an unstructured format

- HBase and Cassandra store data in a semi-structured format

- Impala stores data in a structured format

- SQL interface:

- HDFS does not provide the SQL interface

- HBase and Cassandra do not provide the SQL interface by default

- Impala provides the SQL interface

Impala - Design⌘

- Runs on the top of HDFS or HBase

- Columnar storage

- Uses Hive metastore

- Implements SQL tables and queries

- Interfaces:

- CLI

- web GUI

- ODBC

- JDBC

Impala - Daemons⌘

- Impala Daemon:

- performs read and write operations

- accepts queries and returns query results

- parallelizes queries and distributes the work

- Impala Statestore:

- checks health of the Impala Daemons

- reports detected failures to other nodes in the cluster

- Impala Catalog Server:

- relays metadata changes to all nodes in the cluster

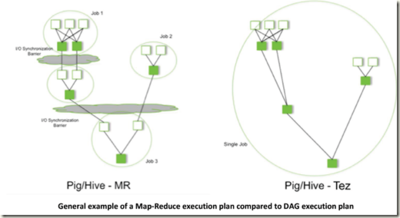

Tez - Goals and Motivation⌘

- Interactive queries:

- MapReduce is a batch processing framework

- Tez is a real-time processing framework

- Job types:

- MapReduce is destined for key-value-based data processing

- Tez is destined for DAG-based data processing

Tez - Design⌘

Spark⌘

- Fast and general engine for large-scale data processing.

- Runs programs up to 100x faster than Hadoop MapReduce in memory, or 10x faster on disk.

- Supports applications written in Java, Scala, Python, R.

- Combines SQL, streaming, and complex analytics.

- Runs on Hadoop, Mesos, standalone, or in the cloud.

- Can access diverse data sources including HDFS, Cassandra, HBase, and S3.

NoSQL⌘

- "Not only SQL" database"

- key-value store concept

- fast and easy

- examples:

- MongoDB, https://www.mongodb.org/

- Redis, http://redis.io/

- Couchbase, http://www.couchbase.com/

Third Day - Session I⌘

Picking up a distribution⌘

- Considerations:

- license cost

- open / closed source

- Hadoop version

- available features

- supported OS types

- ease of deployment

- integrity

- support

Hadoop releases⌘

- Release notes: http://hadoop.apache.org/releases.html

Why CDH?⌘

- Free

- Open source

- Stable (always based on stable Hadoop releases)

- Wide range of available features

- Support for RHEL, SUSE and Debian

- Easy to deploy

- Very well integrated

- Very well supported

Hardware selection⌘

- Considerations:

- CPU

- RAM

- storage IO

- storage capacity

- storage resilience

- network bandwidth

- network resilience

- hardware resilience

Hardware selection #2⌘

- Master nodes:

- namenodes

- resource managers

- secondary namenode

- Worker nodes

- Network devices

- Supporting nodes

Namenode hardware selection⌘

- CPU: quad-core 2.6 GHz

- RAM: 24-96 GB DDR3 (1 GB per 1 million of blocks)

- Storage IO: SAS / SATA II

- Storage capacity: less than 1 TB

- Storage resilience: RAID

- Network bandwidth: 2 Gb/s

- Network resilience: LACP

- Hardware resilience: non-commodity hardware

ResourceManager hardware selection⌘

- CPU: quad-core 2.6 GHz

- RAM: 24-96 GB DDR3 (unpredictable)

- Storage IO: SAS / SATA II

- Storage capacity: less than 1 TB

- Storage resilience: RAID

- Network bandwidth: 2 Gb/s

- Network resilience: LACP

- Hardware resilience: non-commodity hardware

Secondary namenode hardware selection⌘

- Almost always identical to namenode

- The same requirements for RAM, storage capacity and desired resilience level

- Very often used as a replacement hardware in case of namenode failure

Worker hardware selection⌘

- CPU: 2 * 6 core 2.9 GHz

- RAM: 64-96 GB DDR3

- Storage IO: SAS / SATA II

- Storage capacity: 24-36 TB JBOD

- Storage resilience: none

- Network bandwidth: 1-10 Gb/s

- Network resilience: none

- Hardware resilience: commodity hardware

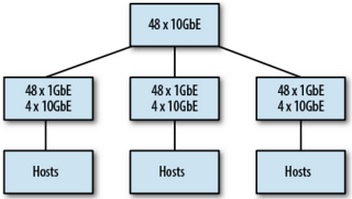

Network devices hardware selection⌘

- Downlink bandwidth: 1-10 Gb/s

- Uplink bandwidth: 10 Gb/s

- Computing resources: vendor-specific

- Resilience: by network topology

Supporting nodes hardware selection⌘

- Backup system

- Monitoring system

- Security system

- Logging system

Cluster growth planning⌘

- Considerations:

- replication factor: 3 by default

- temporary data: 20-30% of the worker storage space

- data growth: based on trends analysis

- the amount of data being analised: based on cluster requirements

- usual task RAM requirements: 2-4 GB

- Lab Exercise 3.1.1

- Lab Exercise 3.1.2

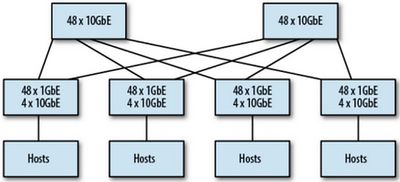

Network topologies⌘

- Hadoop East/West traffic flow design

- Network topologies:

- Leaf

- Spine

- Inter data center replication

- Lab Exercise 3.1.3

Leaf topology⌘

Spine topology⌘

Blades, SANs, RAIDs and virtualization⌘

- Blades considerations:

- Hadoop is designed to work on the commodity hardware

- Hadoop cluster scales out rather than up

- SANs considerations:

- Hadoop is designed for processing local data

- HDFS is distributed and shared by design

- RAIDs considerations:

- Hadoop is designed to provide data durability

- RAID introduces additional limitations and overhead

- Virtualization considerations:

- Hadoop worker nodes don't benefit from virtualization

- Hadoop is a clustering solution which is the opposite to virtualization

Hadoop directories⌘

| Directory | Linux path | Grows? |

|---|---|---|

| Hadoop install directory | /usr/* | no |

| Namenode metadata directory | defined by an administrator | yes |

| Secondary namenode metadata directory | defined by an administrator | yes |

| Datanode data directory | defined by an administrator | yes |

| MapReduce local directory | defined by an administrator | no |

| Hadoop log directory | /var/log/* | yes |

| Hadoop pid directory | /var/run/hadoop | no |

| Hadoop temp directory | /tmp | no |

Filesystems⌘

- LVM (Logical Volume Manager) - HDFS killer

- Considerations:

- extent-based design

- burn-in time

- Winners:

- EXT4

- XFS

- Lab Exercise 3.1.4

Fourth Day - Session II and III⌘

Underpinning software⌘

- Prerequisites:

- JDK (Java Development Kit)

- Additional software:

- Cron daemon

- NTP client

- SSH server

- MTA (Mail Transport Agent)

- RSYNC tool

- NMAP tool

Hadoop installation⌘

- Should be performed as the root user

- Installation instructions are distribution-dependent

- Installation types:

- manual installation

- automated installation

- Installation sources:

- packages

- tarballs

- source code

Hadoop package content⌘

- /etc/hadoop - configuration files

- /etc/rc.d/init.d - SYSV init scripts for Hadoop daemons

- /usr/bin - executables

- /usr/include/hadoop - C++ header files for Hadoop pipes

- /usr/lib - C libraries

- /usr/libexec - miscellaneous files used by various libraries and scripts

- /usr/sbin - administrator scripts

- /usr/share/doc/hadoop - License, NOTICE and README files

CDH-specific additions⌘

- Binaries for Hadoop Ecosystem

- Use of alternatives system for Hadoop configuration directory:

- /etc/alternatives/hadoop-conf

- Default nofile and nonproc limits:

- /etc/security/limits.d

Hadoop configuration⌘

- hadoop-env.sh - environmental variables

- core-site.xml - all daemons

- hdfs-site.xml - HDFS daemons

- mapred-site.xml - MapReduce daemons

- log4j.properties - logging information

- masters (optional) - list of servers which run secondary namenode daemon

- slaves (optional) - list of servers which run datanode and tasktracker daemons

- fail-scheduler.xml (optional) - fair scheduler configuration and properties

- capacity-scheduler.xml (optional) - capacity scheduler configuration and properties

- dfs.include (optional) - list of servers permitted to connect to the namenode

- dfs.exclude (optional) - list of servers denied to connect to the namenode

- hadoop-policy.xml - user / group permissions for RPC functions execution

- mapred-queue-acl.xml - user / group permissions for jobs submission

Hadoop XML files structure⌘

<?xml version="1.0"?>

<configuration>

<property>

<name>[name]</name>

<value>[value]</value>

(<final>{true | false}</final>)

</property>

</configuration>

Hadoop configuration⌘

- Parameter: fs.defaultFS

- Configuration file: core-site.xml

- Purpose: specifies the default filesystem used by clients

- Format: Hadoop URL

- Used by: NN, SNN, DN, RM, NM, clients

- Example:

<property>

<name>fs.defaultFS</name>

<value>hdfs://namenode.hadooplab:8020</value>

</property>

Hadoop configuration⌘

- Parameter: io.file.buffer.size

- Configuration file: core-site.xml

- Purpose: specifies a size of the IO buffer

- Guidline: 64 KB

- Format: integer

- Used by: NN, SNN, DN, JT, TT, clients

- Example:

<property>

<name>io.file.buffer.size</name>

<value>65536</value>

</property>

Hadoop configuration⌘

- Parameter: fs.trash.interval

- Configuration file: core-site.xml

- Purpose: specifies the amount of time (in minutes) the file is retained in the .Trash directory

- Format: integer

- Used by: NN, clients

- Example:

<property>

<name>fs.trash.interval</name>

<value>60</value>

</property>

Hadoop configuration⌘

- Parameter: ha.zookeeper.quorum

- Configuration file: core-site.xml

- Purpose: specifies the list of ZKFCs for automatic jobtracker failover purpose

- Format: comma separated list of hostname:port pairs

- Used by: JT

- Example:

<property>

<name>ha.zookeeper.quorum</name>

<value>jobtracker1.hadooplab:2181,jobtracker2.hadooplab:2181</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.name.dir

- Configuration file: hdfs-site.xml

- Purpose: specifies where the namenode should store a copy of the HDFS metadata

- Format: comma separated list of Hadoop URLs

- Used by: NN

- Example:

<property>

<name>dfs.name.dir</name>

<value>file:///namenodedir,file:///mnt/nfs/namenodedir</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.data.dir

- Configuration file: hdfs-site.xml

- Purpose: specifies where the datanode should store HDFS data

- Format: comma separated list of Hadoop URLs

- Used by: DN

- Example:

<property>

<name>dfs.data.dir</name>

<value>file:///datanodedir1,file:///datanodedir2</value>

</property>

Hadoop configuration⌘

- Parameter: fs.checkpoint.dir

- Configuration file: hdfs-site.xml

- Purpose: specifies where the secondary namenode should store HDFS metadata

- Format: comma separated list of Hadoop URLs

- Used by: SNN

- Example:

<property>

<name>fs.checkpoint.dir</name>

<value>file:///secondarynamenodedir</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.permissions.supergroup

- Configuration file: hdfs-site.xml

- Purpose: specifies a group permitted to perform all HDFS filesystem operations

- Format: string

- Used by: NN, clients

- Example:

<property>

<name>dfs.permissions.supergroup</name>

<value>supergroup</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.balance.bandwidthPerSec

- Configuration file: hdfs-site.xml

- Purpose: specifies the maximum allowed bandwidth for HDFS balancing operations

- Format: integer

- Used by: DN

- Example:

<property>

<name>dfs.balance.bandwidthPerSec</name>

<value>100000000</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.blocksize

- Configuration file: hdfs-site.xml

- Purpose: specifies the HDFS block size of newly created files

- Guideline: 64 MB

- Format: integer

- Used by: clients

- Example:

<property>

<name>dfs.block.size</name>

<value>67108864</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.datanode.du.reserved

- Configuration file: hdfs-site.xml

- Purpose: specifies the disk space reserved for MapReduce temporary data

- Guideline: 20-30% of the datanode disk space

- Format: integer

- Used by: DN

- Example:

<property>

<name>dfs.datanode.du.reserved</name>

<value>10737418240</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.namenode.handler.count

- Configuration file: hdfs-site.xml

- Purpose: specifies the number of concurrent namenode handlers

- Guideline: natural logarithm of the number of cluster nodes, times 20, as a whole number

- Format: integer

- Used by: NN

- Example:

<property>

<name>dfs.namenode.handler.count</name>

<value>105</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.datanode.failed.volumes.tolerated

- Configuration file: hdfs-site.xml

- Purpose: specifies the number of datanode disks that are permitted to die before failing the entire datanode

- Format: integer

- Used by: DN

- Example:

<property>

<name>dfs.datanode.failed.volumes.tolerated</name>

<value>0</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.hosts

- Configuration file: hdfs-site.xml

- Purpose: specifies the location of dfs.hosts file

- Format: Linux path

- Used by: NN

- Example:

<property>

<name>dfs.hosts</name>

<value>/etc/hadoop/conf/dfs.hosts</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.hosts.exclude

- Configuration file: hdfs-site.xml

- Purpose: specifies the location of dfs.hosts.exclude file

- Format: Linux path

- Used by: NN

- Example:

<property>

<name>dfs.hosts.exclude</name>

<value>/etc/hadoop/conf/dfs.hosts.exclude</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.nameservices

- Configuration file: hdfs-site.xml

- Purpose: specifies virtual namenode names used for the namenode HA and federation purpose

- Format: comma separated strings

- Used by: NN, clients

- Example:

<property>

<name>dfs.nameservices</name>

<value>HAcluster1,HAcluster2</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.ha.namenodes.[nameservice]

- Configuration file: hdfs-site.xml

- Purpose: specifies logical namenode names used for the namenode HA purpose

- Format: comma separated strings

- Used by: DN, NN, clients

- Example:

<property>

<name>dfs.ha.namenodes.HAcluster1</name>

<value>namenode1,namenode2</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.namenode.rpc-address.[nameservice].[namenode]

- Configuration file: hdfs-site.xml

- Purpose: specifies namenode RPC address used for the namenode HA purpose

- Format: Hadoop URL

- Used by: DN, NN, clients

- Example:

<property>

<name>dfs.namenode.rpc-address.HAcluster1.namenode1</name>

<value>http://namenode1.hadooplab:8020</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.namenode.http-address.[nameservice].[namenode]

- Configuration file: hdfs-site.xml

- Purpose: specifies namenode HTTP address used for the namenode HA purpose

- Format: Hadoop URL

- Used by: DN, NN, clients

- Example:

<property>

<name>dfs.namenode.http-address.[nameservice].[namenode]</name>

<value>http://namenode1.hadooplab:50070</value>

</property>

Hadoop configuration⌘

- Parameter: topology.script.file.name

- Configuration file: core-site.xml

- Purpose: specifies the location of a script for rack-awareness purpose

- Format: Linux path

- Used by: NN

- Example:

<property>

<name>topology.script.file.name</name>

<value>/etc/hadoop/conf/topology.sh</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.namenode.shared.edits.dir

- Configuration file: hdfs-site.xml

- Purpose: specifies the location of shared directory on QJM with namenode metadata for namenode HA purpose

- Format: Hadoop URL

- Used by: NN, clients

- Example:

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://namenode1.hadooplab.com:8485/hadoop/hdfs/HAcluster</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.client.failover.proxy.provider.[nameservice]

- Configuration file: hdfs-site.xml

- Purpose: specifies the class used for locating active namenode for namenode HA purpose

- Format: Java class

- Used by: DN, clients

- Example:

<property>

<name>dfs.client.failover.proxy.provider.HAcluster1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.ha.fencing.methods

- Configuration file: hdfs-site.xml

- Purpose: specifies the fencing methods for namenode HA purpose

- Format: new line-separated list of Linux paths

- Used by: NN

- Example:

<property>

<name>dfs.ha.fencing.methods</name>

<value>/usr/local/bin/STONITH.sh</value>

</property>

Hadoop configuration⌘

- Parameter: dfs.ha.automatic-failover.enabled

- Configuration file: hdfs-site.xml

- Purpose: specifies whether the automatic namenode failover is enabled or not

- Format: 'true' / 'false'

- Used by: NN, clients

- Example:

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

Hadoop configuration⌘

- Parameter: ha.zookeeper.quorum

- Configuration file: hdfs-site.xml

- Purpose: specifies the list of ZKFCs for automatic namenode failover purpose

- Format: comma separated list of hostname:port pairs

- Used by: NN

- Example:

<property>

<name>ha.zookeeper.quorum</name>

<value>namenode1.hadooplab:2181,namenode2.hadooplab:2181</value>

</property>

Hadoop configuration⌘

- Parameter: fs.viewfs.mounttable.default.link.[path]

- Configuration file: hdfs-site.xml

- Purpose: specifies the mount point of the namenode or namenode cluster for namenode federation purpose

- Format: Hadoop URL or namenode cluster virtual name

- Used by: NN

- Example:

<property>

<name>fs.viewfs.mounttable.default.link./federation1</name>

<value>hdfs://namenode.hadooplabl:8020</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.hostname

- Configuration file: yarn-site.xml

- Purpose: specifies the location of resource manager

- Format: hostname:port

- Used by: RM, NM, clients

- Example:

<property>

<name>yarn.resourcemanager.hostname</name>

<value>resourcemanager.hadooplab:8021</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.nodemanager.local-dirs

- Configuration file: yarn-site.xml

- Purpose: specifies where the resource manager and node manager should store application temporary data

- Format: comma separated list of Hadoop URLs

- Used by: RM, NM

- Example:

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>file:///applocaldir</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.nodemanager.resource.memory-mb

- Configuration file: yarn-site.xml

- Purpose: specifies memory in megabytes available for allocation for the applications

- Format: integer

- Used by: NM

- Example:

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>40960</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.nodemanager.resource.cpu-vcores

- Configuration file: yarn-site.xml

- Purpose: specifies virtual cores available for allocation for the applications

- Format: integer

- Used by: NM

- Example:

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>8</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.scheduler.minimum-allocation-mb

- Configuration file: yarn-site.xml

- Purpose: specifies minimum amount of memory in megabytes allocated for the applications

- Format: integer

- Used by: NM

- Example:

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.scheduler.minimum-allocation-vcores

- Configuration file: yarn-site.xml

- Purpose: specifies minimum number of virtual cores allocated for the applications

- Format: integer

- Used by: NM

- Example:

<property>

<name>yarn.scheduler.minimum-allocation-vcores</name>

<value>2</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.scheduler.increment-allocation-mb

- Configuration file: yarn-site.xml

- Purpose: specifies increment amount of memory in megabytes for allocation for the applications

- Format: integer

- Used by: NM

- Example:

<property>

<name>yarn.scheduler.increment-allocation-mb</name>

<value>512</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.scheduler.increment-allocation-vcores

- Configuration file: yarn-site.xml

- Purpose: specifies incremental number of virtual cores for allocation for the applications

- Format: integer

- Used by: NM

- Example:

<property>

<name>yarn.scheduler.increment-allocation-vcores</name>

<value>1</value>

</property>

Hadoop configuration⌘

- Parameter: mapreduce.task.io.sort.mb

- Configuration file: mapred-site.xml

- Purpose: specifies the size of the circular in-memory buffer used to store MapReduce temporary data

- Guideline: ~10% of the JVM heap size

- Format: integer (MB)

- Used by: NM

- Example:

<property>

<name>mapreduce.task.io.sort.mb</name>

<value>128</value>

</property>

Hadoop configuration⌘

- Parameter: mapreduce.task.io.sort.factor

- Configuration file: mapred-site.xml

- Purpose: specifies the number of files to merge at once

- Guideline: 64 for 1 GB JVM heap size

- Format: integer

- Used by: NM

- Example:

<property>

<name>mapreduce.task.io.sort.factor</name>

<value>64</value>

</property>

Hadoop configuration⌘

- Parameter: mapreduce.map.output.compress

- Configuration file: mapred-site.xml

- Purpose: specifies whether the output of the map tasks should be compressed or not

- Format: 'true' / 'false'

- Used by: NM

- Example:

<property>

<name>mapreduce.map.output.compress</name>

<value>true</value>

</property>

Hadoop configuration⌘

- Parameter: mapreduce.map.output.compress.codec

- Configuration file: mapred-site.xml

- Purpose: specifies the codec used for map tasks output compression

- Format: Java class

- Used by: NM

- Example:

<property>

<name>mapreduce.map.output.compress.codec</name>

<value>org.apache.io.compress.SnappyCodec</value>

</property>

Hadoop configuration⌘

- Parameter: mapreduce.output.fileoutputformat.compress.type

- Configuration file: mapred-site.xml

- Purpose: specifies whether the compression should be applied to the values only, key-value pairs or not at all

- Format: 'RECORD' / 'BLOCK' / 'NONE'

- Used by: NM

- Example:

<property>

<name>mapreduce.output.fileoutputformat.compress.type</name>

<value>BLOCK</value>

</property>

Hadoop configuration⌘

- Parameter: mapreduce.reduce.shuffle.parallelcopies

- Configuration file: mapred-site.xml

- Purpose: specifies the number of threads started in parallel to fetch the output from map tasks

- Guideline: natural logarithm of the size of the cluster, times four, as a whole number

- Format: integer

- Used by: NM

- Example:

<property>

<name>mapreduce.reduce.shuffle.parallelcopies</name>

<value>5</value>

</property>

Hadoop configuration⌘

- Parameter: mapreduce.job.reduces

- Configuration file: mapred-site.xml

- Purpose: specifies the number of reduce tasks to use for the MapReduce jobs

- Format: integer

- Used by: RM

- Example:

<property>

<name>mapreduce.job.reduces</name>

<value>1</value>

</property>

Hadoop configuration⌘

- Parameter: mapreduce.shuffle.max.threads

- Configuration file: mapred-site.xml

- Purpose: specifies the number of threads started in parallel to serve the output of map tasks

- Format: integer

- Used by: RM

- Example:

<property>

<name>mapreduce.shuffle.max.threads</name>

<value>1</value>

</property>

Hadoop configuration⌘

- Parameter: mapreduce.job.reduce.slowstart.completedmaps

- Configuration file: mapred-site.xml

- Purpose: specifies when to start allocating reducers as the number of complete map tasks grows

- Format: float

- Used by: RM

- Example:

<property>

<name>mapreduce.job.reduce.slowstart.completedmaps</name>

<value>0.5</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.ha.rm-ids

- Configuration file: yarn-site.xml

- Purpose: specifies logical resource manager names used for resource manager HA purpose

- Format: comma separated strings

- Used by: RM, NM, clients

- Example:

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>resourcemanager1,resourcemanager2</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.ha.id

- Configuration file: yarn-site.xml

- Purpose: specifies preferred resource manager for an active unit

- Format: string

- Used by: RM

- Example:

<property>

<name>yarn.resourcemanager.ha.id</name>

<value>resourcemanager1</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.address.[resourcemanager]

- Configuration file: yarn-site.xml

- Purpose: specifies resource manager RPC address

- Format: Hadoop URL

- Used by: RM, clients

- Example:

<property>

<name>yarn.resourcemanager.address.resourcemanager1</name>

<value>http://resourcemanager1.hadooplab:8032</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.scheduler.address.[resourcemanager]

- Configuration file: yarn-site.xml

- Purpose: specifies resource manager RPC address for Scheduler service

- Format: Hadoop URL

- Used by: RM, clients

- Example:

<property>

<name>yarn.resourcemanager.scheduler.address.resourcemanager1</name>

<value>http://resourcemanager1.hadooplab:8030</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.admin.address.[resourcemanager]

- Configuration file: yarn-site.xml

- Purpose: specifies resource manager RPC address used for the resource manager HA purpose

- Format: Hadoop URL

- Used by: RM, clients

- Example:

<property>

<name>yarn.resourcemanager.admin.address.resourcemanager1</name>

<value>http://resourcemanager1.hadooplab:8033</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.resource-tracker.address.[resourcemanager]

- Configuration file: yarn-site.xml

- Purpose: specifies resource manager RPC address for Resource Tracker service

- Format: Hadoop URL

- Used by: RM, NM

- Example:

<property>

<name>yarn.resourcemanager.resource-tracker.address.resourcemanager1</name>

<value>http://resourcemanager1.hadooplab:8031</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.webapp.address.[resourcemanager]

- Configuration file: yarn-site.xml

- Purpose: specifies resource manager address for web UI and REST API services

- Format: Hadoop URL

- Used by: RM, clients

- Example:

<property>

<name>yarn.resourcemanager.webapp.address.resourcemanager1</name>

<value>http://resourcemanager1.hadooplab:8088</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.ha.fencer

- Configuration file: yarn-site.xml

- Purpose: specifies the fencing methods for resourcemanager HA purpose

- Format: new-line-separated list of Linux paths

- Used by: RM

- Example:

<property>

<name>yarn.resourcemanager.ha.fencer</name>

<value>/usr/local/bin/STONITH.sh</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.ha.auto-failover.enabled

- Configuration file: yarn-site.xml

- Purpose: enables automatic failover in resource manager HA pair

- Format: boolean

- Used by: RM

- Example:

<property>

<name>yarn.resourcemanager.ha.auto-failover.enabled</name>

<value>true</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.recovery.enabled

- Configuration file: yarn-site.xml

- Purpose: enables job recovery on resource manager restart or during the failover

- Format: boolean

- Used by: RM

- Example:

<property>

<name>yarn.resourcemanager.ha.recovery.enabled</name>

<value>true</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.ha.auto-failover.port

- Configuration file: yarn-site.xml

- Purpose: specifies ZKFC port

- Format: integer

- Used by: RM

- Example:

<property>

<name>yarn.resourcemanager.ha.auto-failover.port</name>

<value>8018</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.ha.enabled

- Configuration file: yarn-site.xml

- Purpose: enables resource manager HA feature

- Format: boolean value

- Used by: RM

- Example:

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.store.class

- Configuration file: yarn-site.xml

- Purpose: specifies storage for resource manager internal state

- Format: string

- Used by: RM

- Example:

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

Hadoop configuration⌘

- Parameter: yarn.resourcemanager.cluster-id

- Configuration file: yarn-site.xml

- Purpose: specifies the logical name of YARN cluster

- Format: string

- Used by: RM, NM, clients

- Example:

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>HAcluster</value>

</property>

Hadoop configuration - exercises⌘

- Lab Exercise 3.2.1

- Lab Exercise 3.2.2

- Lab Exercise 3.2.3

- Lab Exercise 3.3.1

- Lab Exercise 3.3.2

- Lab Exercise 3.3.3

- Lab Exercise 3.3.4

- Lab Exercise 3.3.5

- Lab Exercise 3.3.6

- Lab Exercise 3.3.7

- Lab Exercise 3.3.8

- Lab Exercise 3.3.9

Third Day - Session IV⌘

Certification and Surveys⌘

- Congratulations on completing the course!

- Official Cloudera certification authority:

- Visit http://www.nobleprog.com for other courses

- Surveys: http://www.nobleprog.pl/te